Research News

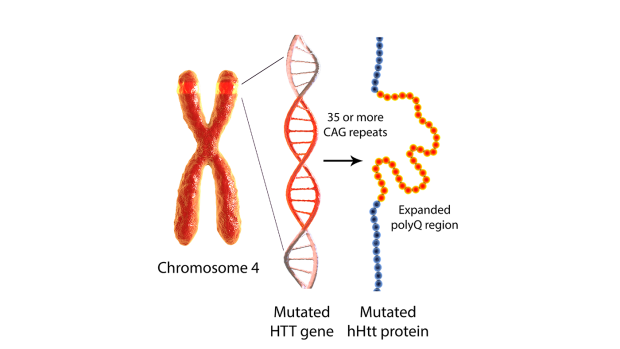

Tracking Wildlife Trafficking in the Age of Online Marketplaces

Wildlife trafficking is one of the world’s most widespread illegal trades, contributing to biodiversity loss, organized crime, and public health risks. Once concentrated in physical markets, much of this activity has moved online. Today, animals and animal products are advertised on large e-commerce platforms alongside ordinary consumer goods. This shift makes enforcement harder — but it also creates a valuable source of data.

Every online advertisement leaves behind digital information: text descriptions, prices, images, seller details, and timestamps. If collected and analyzed at scale, these traces can help researchers understand how wildlife trafficking operates online. The problem is volume. Online marketplaces contain millions of listings, and most searches for animal names return irrelevant results such as toys, artwork, or souvenirs. Distinguishing illegal wildlife ads from harmless products is difficult to do manually and challenging to automate.

Institute Professor of Computer Science Juliana Freire is part of a team that is taking on the problem head on, building a scalable system designed to address this challenge. They developed a flexible data collection pipeline that automatically gathers wildlife-related advertisements from the web and filters them using modern machine learning techniques. The goal is not to focus on one species or one website, but to enable broad, systematic monitoring across many platforms, regions, and languages, as well as to develop strategies to disrupt illegal markets.

The team is a multi-disciplinary effort, including Gohar Petrossian, Professor of Criminal Justice at John Jay College of Criminal Justice; Jennifer Jacquet, Professor of Environmental Science and Policy at the University of Miami; and Sunandan Chakraborty, Professor of Data Science at Indiana University.

The pipeline begins with web crawling. The researchers generate tens of thousands of search URLs by combining endangered species names with the search structures of major e-commerce websites. A specialized crawler then follows these links, downloading product pages while limiting requests to avoid overwhelming servers. Over just 34 days, the system retrieved more than 11 million ads.

Next comes information extraction. Product pages are messy and inconsistent, varying widely across websites. The pipeline uses a combination of HTML parsing tools and automated scrapers to extract useful details such as titles, descriptions, prices, images, and seller information. These data are stored in structured formats that allow large-scale analysis.

The most critical step is filtering. While machine learning classifiers can be used for this filtering, training specialized classifiers for multiple collection tasks is both time-consuming and expensive, requiring experts to create training data for each task. Freire’s group developed a new approach that leverages large-language models (LLMs) to label data and use the labeled data to automatically create specialized classifiers, which can perform data triage at a low cost and at scale.

This research has enabled large-scale data collection to answer different scientific questions and shed insights into different aspects of wildlife trafficking. One analysis of 14,000 reptile leather product listings on eBay showed that crocodile, alligator, and python skins dominated the market. Only about 10 animal-product combinations (such as ‘crocodile bags’, ‘alligator bags’ and ‘alligator watches’ made up about 72 percent of all listings, indicating that the trade heavily focuses on a few luxury items. The analysis of all of the listings from these sites showed that while small leather products were shipped from 65 countries, 93 percent came from 10 countries, with the United States, United Kingdom, Australia collectively accounting for over 3/4th of this market.

Similar data from Ebay on shark and ray trophies reveals that, although the platform has introduced policies to restrict threatened or endangered species, their derivatives are still circulated widely on the platform. Tiger shark trophies accounted for one-fifth of such listings, with asking prices up to $3,000. Over 85 percent of listings were linked to sellers in the United States, suggesting a pipeline from deep sea commercial fishing vessels to the US trophy trade.

This research is also being used to determine what would be the most effective way to disrupt this market. For example, the researchers found that targeting key sellers is effective, but targeting key product types — “alligator watch,” for example — breaks the market of reptile leather products equally effectively, and is much easier to enact at a broad scale.

The authors emphasize that this system is a starting point, not a finished solution. The pipeline is designed to be extensible, allowing future researchers to incorporate better classifiers, image-based analysis, or new data sources. By making the code openly available, they aim to support broader collaboration.

As wildlife trade continues to move online, understanding its digital footprint will be increasingly important. Scalable data collection tools like this one offer a way to transform scattered online listings into actionable knowledge, an essential step toward disrupting illegal wildlife trade in the digital era.

Juliana Silva Barbosa, Ulhas Gondhali, Gohar Petrossian, Kinshuk Sharma, Sunandan Chakraborty, Jennifer Jacquet, and Juliana Freire. 2025. A Cost-Effective LLM-based Approach to Identify Wildlife Trafficking in Online Marketplaces. Proc. ACM Manag. Data 3, 3, Article 119 (June 2025), 23 pages. https://doi.org/10.1145/3725256

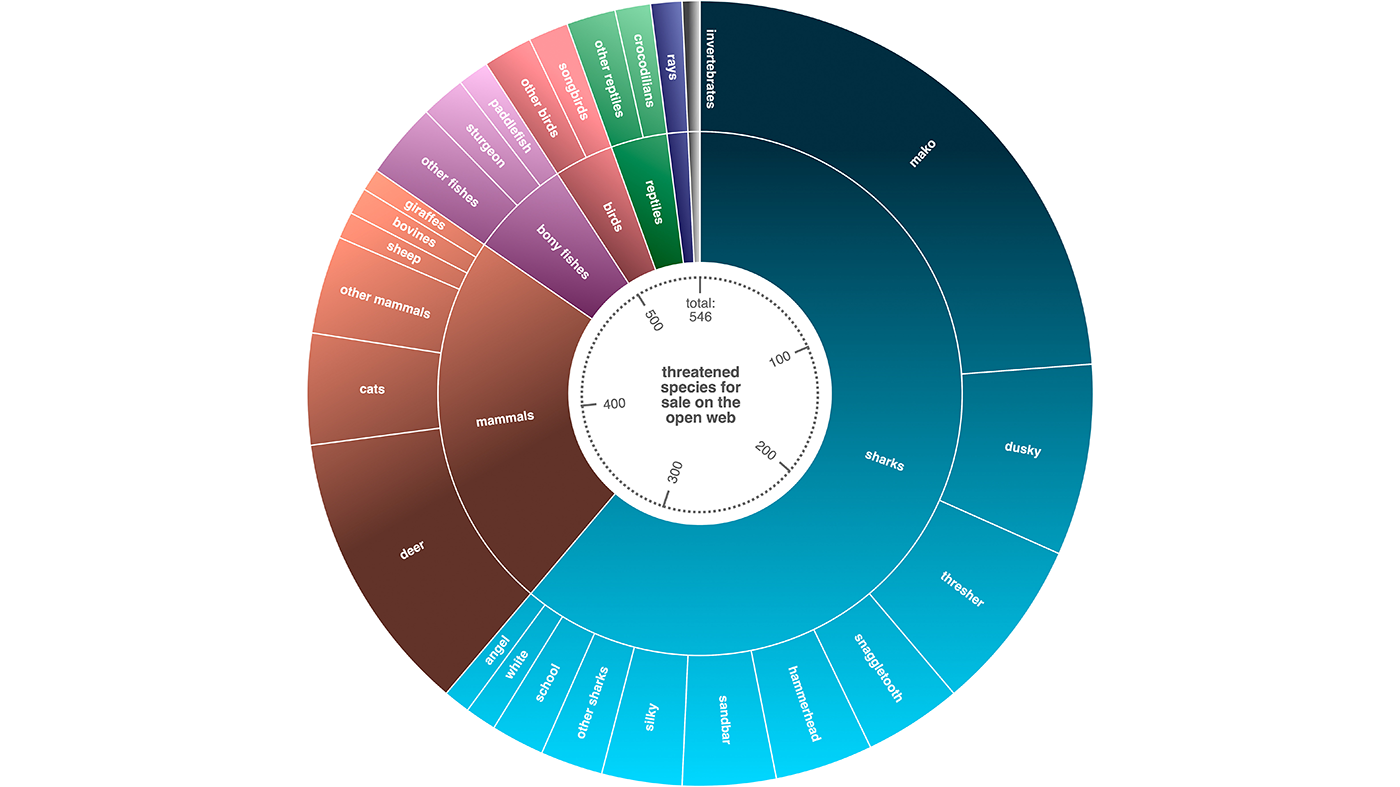

Huntington’s Disease is Neuroscience’s Clearest Test Case

Neuroscience rarely enjoys clean experiments. Most brain disorders are mosaics of risk genes, aging, lifestyle and chance that leave their origins obscured. Huntington’s disease (HD) is different. It begins with a single genetic expansion — a repeated stretch of DNA letters in the HTT gene — that is both measurable and decisive. If you inherit a sufficiently long repeat, you will develop the disease. That stark clarity makes HD scientifically invaluable.

That is the argument put forward by Roy Maimon in a new essay in Trends in Molecular Medicine. Maimon, Assistant Professor of Biomedical Engineering, is an expert in endogenous neural stem cells and their altered regenerative potential in brain diseases like HD. In the piece, he argues that HD’s clear cause and effects offer the ideal starting point to uncover parts of the brain, and the diseases that affect it, that are far less understood.

But it is not only the unique biological properties of HD that make it such a potentially productive topic — it’s the people too. “The HD world is unusually united,” Maimon writes. “It is among the few fields in biomedicine where patients, scientists, and clinicians share the same space. We meet families regularly. We see their courage and humor. We celebrate together and we grieve together.”

“It is this culture that makes HD research not just productive but deeply personal, too. You become part of something when you join this community that truly matters.”

The HTT mutation acts like a molecular clock. The longer the repeat, the earlier symptoms tend to appear. Decades before the first involuntary movement or subtle cognitive shift, a blood test can reveal who carries the expansion. Few neurological diseases offer such foresight. That predictability allows researchers to ask a question that is nearly impossible elsewhere: What happens if we intervene before neurons begin to die?

The brain changes in HD follow a surprisingly consistent pattern. Early damage centers on a deep brain structure called the striatum, which helps control movement and decision-making. Over time, connected regions of the cortex also become involved. Even within the striatum, certain neurons are especially vulnerable, while their neighbors remain relatively resilient. Why some cells are fragile and others hardy is a major puzzle — and HD offers a controlled setting to investigate it.

Because the genetic cause is so precise, HD has become a testing ground for cutting-edge therapies. Drugs called antisense oligonucleotides are designed to lower production of the harmful huntingtin protein. Gene therapies aim to deliver long-lasting genetic instructions that reduce or modify the faulty gene’s output. Other approaches target the DNA repair machinery thought to drive repeat expansion. Not every clinical trial has succeeded, but each has sharpened our understanding of how to measure brain changes, track biomarkers in blood and spinal fluid, and choose the best moment to intervene.

Researchers are also exploring ways to repair or replace damaged cells. The striatum lies near a region of the brain that can generate new neurons, at least in small numbers. In animal studies, boosting this process — or transplanting healthy cells — has shown promise in rebuilding parts of the damaged circuit. While such strategies remain experimental, HD provides a uniquely measurable proving ground: a known mutation, defined target cells and a predictable timeline.

“Every dollar invested in HD yields methods, models, and biomarkers that accelerate discoveries throughout the entire field,” Maimon writes. “For students, it's the quickest path to learning real translational neuroscience. For investors and funders, it’s the most efficient place to deploy resources: high-quality trials, engaged patients, measurable outcomes, and clear readthrough to other diseases. For scientists, it is a bridge between basic biology and the clinic.”

“New York University (NYU) is uniquely positioned to serve as a national hub for Huntington’s disease research”, Maimon adds. “The university bridges engineering, neuroscience, clinical medicine, and data science within a single ecosystem, allowing discoveries to move rapidly from molecular insight to patient-facing trials. With strong programs in gene therapy, RNA therapeutics, biomarker development, and computational modeling, NYU offers the interdisciplinary infrastructure required to tackle a disease that demands precision at every level. Equally important, its proximity to major clinical centers and patient communities enables sustained engagement with families living with HD, ensuring that translational efforts remain both scientifically rigorous and deeply human”.

Maimon, R. (2026). Huntington’s disease is the best investment in Neuroscience Today. Trends in Molecular Medicine. https://doi.org/10.1016/j.molmed.2026.01.001

Wildfire Prevention Models Miss Key Factor: How Forests Will Change Over Decades

Eucalyptus trees, laden with flammable oils, could spread into Portugal's south-central region by 2060 if changing climate conditions make the area more hospitable to their growth, creating wildfire hotspots that would evade detection by conventional prevention approaches.

The gap exists because most wildfire models account for climate change but treat forests as static, missing how vegetation itself will evolve and alter fire risk.

A new study from NYU Tandon School of Engineering fills this gap by modeling both climate and vegetation changes together. Published in the International Journal of Wildland Fire, the research projects how forests will evolve through 2060 and reveals that ignoring vegetation dynamics produces fundamentally incomplete fire risk projections.

"If you only consider the impact of climate but ignore vegetation, you're going to miss wildfire patterns that will happen," said Augustin Guibaud, the NYU Tandon assistant professor who led the research team. "Vegetation works on a timescale that's different from climate or weather."

Testing the model in Portugal revealed a striking paradox: local fire risk doesn't always track with global warming trends. Some higher-emission scenarios actually showed decreased fire risk in Portugal, with medium emissions projecting a 12% decrease when vegetation responses were included. In the low-emission scenario, projections without vegetation changes predicted a 59% increase in burned area by 2060, but including how forests would actually adapt reduced that to just 3%.

"The climate scenario which is more drastic from a temperature perspective may not be the one associated with highest risk at the local level," Guibaud explained. The counterintuitive results underscore that local climate conditions and vegetation responses can diverge significantly from global patterns.

The findings matter beyond Portugal. Wildfires are increasing in frequency, intensity and geographic scope across Mediterranean climates and western North America, with regions like California experiencing recurring large fires. Climate projections indicate these trends will continue, making long-term planning increasingly important. Guibaud anticipates working with federal agencies to apply the methodology in the United States, where the same dynamics of shifting vegetation and fire risk are playing out.

The team developed their approach using machine learning to analyze Portugal's wildfire patterns, correctly identifying 84% of historical wildfire locations in validation tests. They modeled how wildfires would change under three climate futures through 2060 — from low to high emissions — incorporating how seven dominant ecosystems characterized by the tree species would shift in response to changing temperature and precipitation.

The model has immediate practical implications. Planting strategies aimed at reducing wildfire risk can backfire if they don't account for future climate. Species that won't survive future conditions waste resources, while fire-prone species that will thrive "lock in elevated risk for decades," Guibaud said. Because forest ecosystems take about a century to fully restore, those mistakes reverberate for generations.

The team's model integrates data from NASA satellite systems, Portugal's National Forest Inventory, and IPCC climate projections, using Maximum Entropy modeling to project species shifts and a Graph Convolutional Network to assess fire risk based on surrounding vegetation and terrain. The researchers developed a method to decouple climate and vegetation effects by running projections twice: once holding vegetation constant and once allowing it to evolve.

The team plans to refine the vegetation modeling to include shrubs and grasses, not just tree species. In addition to Guibaud, who sits in Tandon's Mechanical and Aerospace Engineering Department and its Center for Urban Science + Progress, the paper's authors are Feiyang Ren, now at the University of Leeds; Noah Tobinsky, who worked on the project as a master's student at NYU Tandon; and Trisung Dorji of University College London.

Ren F, Tobinsky N, Dorji T, Guibaud A. (2025) On the importance of both climate and vegetation evolution when predicting long-term wildfire susceptibility. International Journal of Wildland Fire 34, WF25092. https://doi.org/10.1071/WF25092

New Mathematical Model Shows How Economic Inequalities Affect Migration Patterns

For as long as there have been humans, there have been migrations — some driven by the promise of a better life, others by the desperate need to survive. But while the world has changed dramatically, the mathematical models used to explain how people move have often lagged behind reality. A new study in PNAS Nexus from a team led by Institute Professor Maurizio Porfiri argues that the patterns of human movement can’t be fully understood without reckoning with inequality.

For decades, researchers have relied on models that treat all cities and regions as if they were equal. The “radiation” and “gravity” models, the workhorses of mobility science, describe migration as a function of population size and distance: how many people live in one place, and how far they have to go to reach another. These equations have been useful for predicting broad commuting and migration trends, but they share a blind spot: they assume that opportunities and living conditions are evenly distributed. In a world where climate change, war, and widening economic divides are shaping the way people move, that assumption no longer makes sense.

Porfiri and his colleagues built a new model that explicitly incorporates inequality. It assigns each location a different “opportunity distribution,” a measure of how attractive it is based on social, economic, or environmental conditions. Cities or towns suffering from war, poverty, or environmental disasters are penalized in the model; their residents are more likely to leave, and outsiders are less likely to move in. The result is a mathematical system that behaves more like the real world.

The team tested their model in two settings: South Sudan and the United States—places that could hardly be more different, yet both marked by deep disparities. In South Sudan, years of civil conflict and catastrophic flooding have displaced millions. The researchers assembled a new dataset that tracked these internal movements across the country’s counties between 2020 and 2021. When they compared their inequality-aware model to the traditional one, the difference was stark. The new approach captured how people fled not just from areas of violence but also from those hit hardest by floods, revealing the powerful influence of environmental stress on migration. In fact, flooding alone explained more of the observed migration patterns than conflict did.

In the United States, the researchers turned their attention to a more familiar form of movement: the daily commute. Using data from the American Community Survey, they explored how factors like income inequality, poverty, and housing costs shape commuting flows between counties. Once again, inequality mattered. The model showed that places where rent consumed a larger share of income, or where poverty was more widespread, had distinctive commuting patterns — ones that standard models could not explain.

What the study suggests is that mobility is as much a story of inequality as it is of geography. People do not simply move because of distance or population pressure; they move because some places have become unlivable, unaffordable, or unsafe. “Mobility reflects human aspiration, but also human constraint,” said Porfiri, who serves as Director of the Director of Center for Urban Science + Progress, Interim Chair of the Department of Civil and Urban Engineering, as well as Director of NYU’s Urban Institute. “Understanding both sides of that equation is crucial if we want to plan for the future.”

The implications are far-reaching. As climate change intensifies floods, droughts, and heat waves, and as economic gaps widen within and between nations, migration pressures are likely to grow. Models like this one could help policymakers anticipate where displaced people will go, and what stresses those movements might place on cities and infrastructure. They could also inform strategies to reduce inequality itself — by identifying which regions are most vulnerable to losing their populations, and which are absorbing more than they can sustain.

Alongside Porfiri, contributing authors include Alain Boldini of the New York Institute of Technology, Manuel Heitor of Instituto Superior Técnico, Lisbon, Salvatore Imperatore and Pietro De Lellis of the University of Naples, Rishita Das of the Indian Institute of Science, and Luis Ceferino of the University of California Berkeley. This study was funded in part by the National Science Foundation.

Alain Boldini, Pietro De Lellis, Salvatore Imperatore, Rishita Das, Luis Ceferino, Manuel Heitor, Maurizio Porfiri, Predicting the role of inequalities on human mobility patterns, PNAS Nexus, Volume 5, Issue 1, January 2026, pgaf407, https://doi.org/10.1093/pnasnexus/pgaf407

How Policy, People, and Power Interact to Determine the Future of the Electric Grid

When energy researchers talk about the future of the grid, they often focus on individual pieces: solar panels, batteries, nuclear plants, or new transmission lines. But in a recent study, urban systems researcher Anton Rozhkov takes a different approach — treating the energy system itself as a complex, evolving organism shaped as much by policy and human behavior as by technology.

Rozhkov’s research, recently published in PLOS Complex Systems, models the electricity system of Northern Illinois, focusing on the service territory of Commonwealth Edison (ComEd). Rather than trying to predict exactly how much electricity the region will generate or consume decades from now, the model explores how the system’s overall behavior changes under different long-term scenarios.

“This work is not intended as a deterministic prediction,” Rozhkov, Industry Assistant Professor in the Center for Urban Science + Progress, explains. “What’s important for complex systems is the general trend, the system’s trajectory, whether something is increasing or decreasing under various scenarios, and how steep and fast that change is.”

The study uses a system dynamics framework, a method designed to capture feedback loops and interactions over time. Rozhkov modeled both electricity generation — reflecting Illinois’s current distinctive mix, including a significant share of nuclear power — and demand, then explored how the balance shifts across a 50-year horizon. Illinois is an especially interesting test case, he notes, because few U.S. states rely as heavily on nuclear energy, making the transition to low-carbon systems more nuanced than a simple fossil-fuel-to-renewables swap.

From this baseline, Rozhkov examined five broad scenarios. Some focused on technology, such as a future dominated by renewable energy, with or without the development of large-scale battery storage. Others reflected policy goals, including a scenario aligned with Illinois’s Climate and Equitable Jobs Act, which sets a target of economy-wide climate neutrality by 2040. Still others explored changes in how people live and consume energy, including denser urban development and widespread adoption of distributed energy resources by households and neighborhoods.

Across these scenarios, the model tracked economic costs and environmental outcomes, particularly greenhouse gas emissions. One striking pattern emerged when decentralized energy production — such as rooftop solar paired with the ability to sell excess power back to the grid — became widespread. In that case, demand for centralized electricity generation steadily declined, and there became a need to support that shift through policies: a clear example of an integrated approach. “We can clearly see that utilities would be needed to produce energy differently in the future, and the energy market should be ready for it,” Rozhkov says.

This is the central concept in the paper is which Rozhkov calls a “policy-driven transition.” Policy, in this context, is not a physical component of the grid but a force that shapes decisions. Incentives, tax credits, and regulations can push households and businesses toward clean energy even when natural conditions are less than ideal. He points to the northeastern states as an example: despite limited sunlight, strong solar policies have made rooftop installations attractive. “Policy can move someone from being on the fence about installing solar to actually doing it,” he says.

The research also highlights the growing role of decentralization, in which individual buildings or districts generate much of their own power. In Illinois’s deregulated electricity market, customers can sell excess energy back to the grid, blurring the line between consumer and producer. Beyond cost savings, decentralization can improve resilience during outages or extreme events, allowing communities to maintain power independently when the main grid fails.

Importantly, Rozhkov’s findings suggest that no single lever — technology, policy, or individual motivation — can drive a successful energy transition on its own. “Isolated single-solution approaches (technology-only, policy-only, planning-only) are rarely enough. The transition emerges from the interactions across all of them, and that’s something we need to consider as urban scientists,” he says. The scenarios that performed best combined strong policy frameworks, technological change, and shifts in behavior and urban design.

Although the model was developed for Northern Illinois, it is designed to be adaptable. With sufficient data, it could be applied to other regions, from New York City to Texas, allowing researchers to explore how different regulatory environments shape energy futures. Rozhkov’s next steps include comparing states with contrasting policies, exploring if policies or natural conditions are driving the transition to a more renewable-based energy profile, and digging deeper into behavioral factors about why people choose to adopt distributed energy in the first place.

Rozhkov, A. (2025). Decentralized Renewable Energy Integration in the urban energy markets: A system dynamics approach. PLOS Complex Systems, 2(12). https://doi.org/10.1371/journal.pcsy.0000083

Predicting the Peak: New AI Model Prepares NYC’s Power Grid for a Warmer Future

Buildings produce a large share of New York's greenhouse gas emissions, but predicting future energy demand — essential for reducing those emissions — has been hampered by missing data on how buildings currently use energy.

Semiha Ergan in NYU Tandon’s Civil and Urban Engineering (CUE) Department, is pursuing research that addresses the problem from two directions. Both projects, conducted with CUE Ph.D. student Heng Quan, apply machine learning to forecast building energy use, including short-term (day-ahead) predictions to support grid peak management and longer-term (monthly) projections of how climate change may affect energy demand and building-grid interactions.

First, Ergan and Quan introduced STARS (Synthetic-to-real Transfer for At-scale Robust Short-term forecasting), which predicts 24-hour-ahead electricity use across buildings in New York State. The practical problem, Ergan said, is that most buildings lack the historic submetered sensor data that conventional forecasting models require.

"In reality, most buildings only have monthly electricity use data,” said Ergan, noting that detailed sensor data remains rare even in buildings subject to energy reporting requirements like New York City’s Local Law 84 and 87.

STARS sidesteps that limitation by training on thousands of simulated building profiles from the U.S. Department of Energy's ComStock library, then transferring what it learns to real buildings. Tested against actual consumption data from 101 New York State buildings, the model achieved 12.07 percent error in summer and 11.44 percent in winter, below the roughly 30 percent threshold that industry guidelines consider well-calibrated.

The 24-hour forecast window is designed for demand response programs. During heat waves, grid operators need day-ahead predictions to coordinate building energy use — pre-cooling spaces before peak hours, for example — to prevent blackouts. "Eventually, this will help advance grid efficiency and citizen comfort,” said Ergan.

In complementary work with Quan, Ergan is examining how climate change will impact New York City buildings’ energy use over longer timeframes. They developed a physics-based machine learning model to address the poor extrapolation performance of purely data-driven methods.

The model is trained on real building energy consumption data from NYC Local Law 84 covering over 1,000 buildings and projects monthly energy use under warming scenarios of 2 to 4 degrees Fahrenheit. By incorporating physics-based knowledge into the machine learning framework, it enables robust projections even without historical data from the future climate conditions buildings will face.

Their methodology revealed, for example, that a 4-degree increase could raise summer energy use by an average of 7.6 percent.

Together, the two projects address building energy use from complementary time scales: short-term forecasting enables day-ahead grid coordination during peak demand events, while long-term climate projections inform infrastructure planning and policy. Both ultimately support greenhouse gas reduction goals, as operational energy use converts directly to emissions.

The research was funded by NYU Tandon's IDC Innovation Hub.

Heng Quan and Semiha Ergan. 2025. Sim-to-Real Transfer Learning for Large-Scale Short-Term Building Energy Forecasting in Sustainable Cities. In Proceedings of the 12th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation (BuildSys '25). Association for Computing Machinery, New York, NY, USA, 86–95. https://doi.org/10.1145/3736425.3772006

Mass Shootings Trigger Starkly Different Congressional Responses on Social Media Along Party Lines, NYU Tandon Study Finds

After mass shootings, Democrats are nearly four times more likely than Republicans to post about guns on social media, but the disparity goes deeper than volume, according to research from NYU Tandon School of Engineering.

The study analyzed the full two-year term of the 117th Congress using computational methods designed to distinguish true cause-and-effect relationships from mere coincidence. The analysis reveals that mass shootings directly trigger Democratic posts within roughly two days, while Republicans show no such causal response.

Topic analysis of gun-related posts, moreover, reveals strikingly different foci. Democrats are far more likely to zero in on legislation, communities, families, and victims, while Republicans more often center on Second Amendment rights, law enforcement, and crime.

The study, in PLOS Global Public Health, analyzed 785,881 total posts from 513 members of the 117th Congress on X over two years, and identified 12,274 gun-related posts using keyword-based filtering. The team tracked how legislators’ posting related to 1,338 mass shooting incidents that occurred between January 2021 and January 2023.

"In this research, we tested a long-running concern, namely that when it comes to gun violence, Americans too often talk past each other instead of with each other,” said Institute Professor Maurizio Porfiri, the paper’s senior author. Porfiri is director of NYU Tandon’s Center for Urban Science + Progress (CUSP) and of its newly formed Urban Institute. “Our findings expose a fundamental difference in the way we mourn and react in the aftermath of a mass shooting. Building consensus with these stark differences across the political aisle becomes exceptionally difficult under these conditions.”

The research team employed the PCMCI+ causal discovery algorithm, which uses statistical methods to identify whether one event genuinely causes another or whether they simply occur around the same time. Combined with mixed-effects logistic regression and topic modeling, this approach revealed that Democrats respond causally to shooting severity — particularly the number of fatalities — both immediately and the day after incidents. Posting likelihood rose especially when incidents occurred in legislators' home states.

"The findings matter because they expose a structural problem in how Americans address gun violence as a nation," said CUSP Assistant Research Scientist Dmytro Bukhanevych, the paper’s lead author. "When Democrats surge onto social media after mass shootings while Republicans have a comparatively smaller level of response, there's no shared moment of attention. The asymmetry itself becomes a barrier to meaningful exchange."

The research may help advocates and policymakers time interventions more strategically. With congressional attention peaking immediately and declining within roughly 48 hours, sustained public pressure needs to extend well beyond the immediate aftermath. And understanding the distinct frames each party uses could help communicators craft messages that bridge ideological divides rather than reinforcing them.

Along with Porfiri and Bukhanevych, CUSP Ph.D. candidate Rayan Succar served as the PLOS paper’s co-author.

This study contributes to Porfiri’s ongoing research related to U.S. gun prevalence and violence, which he and colleagues are pursuing under a National Science Foundation grant to study the “firearm ecosystem” in the United States. Prior published research under the grant explores:

- the degree that political views, rather than race, shape reactions to mass shooting data;

- the role that cities’ population size plays on the incidences of gun homicides, gun ownership and licensed gun sellers;

- motivations of fame-seeking mass shooters;

- factors that prompt gun purchases;

- state-by-state gun ownership trends; and

- forecasting monthly gun homicide rates.

Buffalo's Deadly Blizzard Revealed When Travel Bans Lose Their Power Over Time

When Buffalo, New York’s devastating December 2022 blizzard claimed more than 30 lives, it exposed a hard reality: even life-saving travel bans can lose their force over time, especially when residents face situations where compliance becomes difficult. The disruption stretched on for days, straining households' ability to stay supplied without venturing out.

Researchers at NYU Tandon School of Engineering and Rochester Institute of Technology (RIT) have now developed a way to help authorities anticipate when these breakdowns may begin.

Published in Transport Policy, the study introduces a predictive framework using weather indicators — snowfall, temperature, and snow depth — to estimate how quickly a travel ban may start to lose effectiveness.

"Agencies have the option to implement travel bans during life-threatening storms," said Professor Kaan Ozbay, the paper's senior author and founding Director of NYU Tandon's C2SMART transportation research center. "But a ban that works for a 24-hour storm may not hold for a week-long event. This framework helps officials understand those differences and plan accordingly."

The research compared two Buffalo storms weeks apart in late 2022. Both involved travel restrictions, but travel patterns diverged sharply. Analyzing travel-time and speed estimates from vehicles and navigation systems, researchers tracked how movement changed around the period restrictions were in effect, identifying statistical "turning points" when travel began shifting back toward normal.

During December 2022's blizzard, travel patterns rebounded before officials lifted the ban. November 2022's storm, with more frequent updates and neighborhood-specific adjustments for South Buffalo, showed stronger sustained travel suppression. The contrast suggests policy durability is shaped not only by storm conditions, but also by how long restrictions must be maintained and how well responses adapt to local realities.

“Location-specific modifications, including those made for South Buffalo during the November event, may be associated with improved compliance of the policy compared to the December storm,” explained Eren Kaval, a C2SMART Ph.D. candidate and the paper's first author.

The framework introduces a metric called "Loss of Resilience of Policy" quantifying how a policy's ability to limit travel deteriorates over time. Regression modeling indicates weather forecast information can help anticipate that trajectory. Harsher conditions — heavier snowfall and greater snow depth — are associated with larger losses of policy resilience, information officials could use during planning.

"If forecasts predict heavy snow over five days, officials can anticipate a blanket ban may not hold," Kaval said. "They might design a different approach from the start, such as targeted restrictions for hardest-hit areas, planned food distribution, or phased restrictions acknowledging people will need to venture out."

The researchers found that the breakdown varied across the city. Some neighborhoods exhibited larger shifts than others, with patterns discussed alongside socioeconomic factors like income and education.

"Some communities had fewer options," Kaval said. "If you can't stockpile a week's supplies, staying home that long becomes impossible." This helps explain why blanket bans can falter. They implicitly assume equal capacity to comply when that capacity varies. The framework can help identify where compliance may be hardest to sustain and inform targeted interventions before storms hit.

"The aim isn't to blame residents or agencies," Ozbay said. "It's to help officials design realistic policies from the beginning. If forecasts show a storm will push beyond what most can prepare for, you can build that into your emergency plan by arranging food deliveries, opening warming centers strategically, or implementing rolling restrictions rather than week-long bans."

The alternative — maintaining restrictions residents cannot realistically follow — can erode trust and weaken future emergency orders. Understanding these dynamics could help preserve emergency measures' legitimacy while keeping people safer.

The approach could apply to other prolonged emergencies like hurricanes or floods, where officials must balance safety with what people can sustain.

The Transport Policy paper was inspired by initial findings from a C2SMART joint research project with NYU Wagner led by Sarah Kaufman, Director of the NYU Rudin Center for Transportation & Assistant Clinical Professor of Public Service, examining lessons learned from the 2022 Buffalo blizzard. It also builds on Professor Ozbay's previous work with Zilin Bian, a co-author of the current paper and NYU Tandon Ph.D. graduate, now an assistant professor at RIT, and Jingqin (Jannie) Gao, Assistant Research Director of C2SMART, on using AI and Big Data to quantify the time lag effect in transportation systems when authorities took action in response to the COVID-19 pandemic.

Eren Kaval, Zilin Bian, Kaan Ozbay, Data-driven quantification of the resilience of enforcement policies under emergency conditions: A comparative study of two major winter storms in Buffalo, New York, Transport Policy, Volume 176, 2026, https://doi.org/10.1016/j.tranpol.2025.103893.

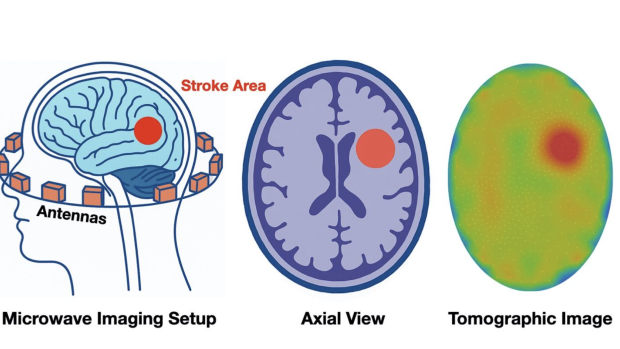

New Algorithm Dramatically Speeds Up Stroke Detection Scans

When someone walks into an emergency room with symptoms of a stroke, every second matters. But today, diagnosing the type of stroke, the life-or-death distinction between a clot and a bleed, requires large, stationary machines like CT scanners that may not be available everywhere. In ambulances, rural clinics, and many hospitals worldwide, doctors often have no way to make this determination in time.

For years, scientists have imagined a different world, one in which a lightweight microwave imaging device, no bigger than a bike helmet, could allow clinicians to look inside the head without radiation, without a shielded room, and without waiting. That idea isn’t far-fetched. Microwave imaging technology already exists and can detect changes in the electrical properties of tissues — changes that happen when stroke, swelling, or tumors disrupt the brain’s normal structure.

The real obstacle has always been speed. “The hardware can be portable,” said Stephen Kim, a Research Professor in the Department of Biomedical Engineering at NYU Tandon. “But the computations needed to turn the raw microwave data into an actual image have been far too slow. You can’t wait up to an hour to know if someone is having a hemorrhagic stroke.”

Kim, along with BME Ph.D. student Lara Pinar and Department Chair Andreas Hielscher, believes that barrier may now be disappearing. In a new study published in IEEE Transactions on Computational Imaging, the team describes an innovative algorithm that reconstructs microwave images 10 to 30 times faster than the best existing methods, a leap that could bring real-time microwave imaging from theory into practice.

It’s a breakthrough that didn’t come from building new devices or designing faster hardware, but from rethinking the mathematics behind the imaging itself. Kim recalls spending long nights in the lab watching microwave reconstructions crawl along frame by frame. “You could almost hear the computer groan,” he said. “It was like trying to push a boulder uphill. We knew there had to be a better way.”

At the heart of the problem is how traditional algorithms work. They repeatedly try to “guess” the electrical properties of the tissue, check whether that guess explains the measured microwave signals, and adjust the guess again. This is a tedious process that can require solving large electromagnetic equations hundreds of times.

The team’s new method takes a different path. Instead of demanding a perfectly accurate intermediate solution at every iteration, their algorithm allows quick, imperfect approximations early on and tightens the accuracy only as needed. This shift, which is simple in concept, but powerful in practice, dramatically reduces the number of heavy computations.

To make the process even more efficient, the team incorporated several clever tricks: using a compact mathematical representation to shrink the size of the problem, streamlining how updates are computed, and using a modeling approach that remains stable even for complex head shapes.

The results are striking. Reconstructions that once took nearly an hour now appear in under 40 seconds. In tests with real experimental data, including cylindrical targets imaged using a microwave scanner from the University of Manitoba, the method consistently delivered high-quality results in seconds instead of minutes.

For Kim and Hielscher, who have worked collaboratively for decades on optical and microwave imaging techniques, the speed improvement feels like a long-awaited turning point. “We always knew microwave imaging had the potential to be portable and affordable. But without rapid reconstruction, the technology couldn’t make the leap into real clinical settings,” Hielscher said. “Now we’re finally closing that gap.”

The promise extends far beyond stroke detection. Portable microwave devices could one day provide an accessible alternative to mammography in low-resource settings, monitor brain swelling in intensive care units without repeated CT scans, or track tumor responses to therapy by observing subtle changes in tissue composition.

The team is now focused on extending the algorithm to full 3D imaging, a step that would bring microwave tomography even closer to practical deployment. But the momentum is palpable. “We’re taking a technology that has been stuck in the lab for years and giving it the speed it needs to matter clinically,” Kim said. “That’s the part that excites us: imagining how many patients someday might benefit from this.”

Accelerated Microwave Tomographic Imaging with a PDE-Constrained Optimization Method, IEEE Transactions on Computational Imaging, VOL. 11, 1614 – 1629 (2025), Authors: Stephen H. Kim, Lara Pinar, and Andreas H. Hielscher.

New AI Language-Vision Models Transform Traffic Video Analysis to Improve Road Safety

New York City's thousands of traffic cameras capture endless hours of footage each day, but analyzing that video to identify safety problems and implement improvements typically requires resources that most transportation agencies don't have.

Now, researchers at NYU Tandon School of Engineering have developed an artificial intelligence system that can automatically identify collisions and near-misses in existing traffic video by combining language reasoning and visual intelligence, potentially transforming how cities improve road safety without major new investments.

Published in the journal Accident Analysis and Prevention, the research won New York City's Vision Zero Research Award, an annual recognition of work that aligns with the City's road safety priorities and offers actionable insights. Professor Kaan Ozbay, the paper's senior author, presented the study at the eighth annual Research on the Road symposium on November 19.

The work exemplifies cross-disciplinary collaboration between computer vision experts from NYU's new Center for Robotics and Embodied Intelligence and transportation safety researchers at NYU Tandon's C2SMART center, where Ozbay serves as Director.

By automatically identifying where and when collisions and near-misses occur, the team’s system — called SeeUnsafe — can help transportation agencies pinpoint dangerous intersections and road conditions that need intervention before more serious accidents happen. It leverages pre-trained AI models that can understand both images and text, representing one of the first applications of multimodal large language models to analyze long-form traffic videos.

"You have a thousand cameras running 24/7 in New York City. Having people examine and analyze all that footage manually is untenable," Ozbay said. "SeeUnsafe gives city officials a highly effective way to take full advantage of that existing investment."

"Agencies don't need to be computer vision experts. They can use this technology without the need to collect and label their own data to train an AI-based video analysis model," added NYU Tandon Associate Professor Chen Feng, a co-founding director of the Center for Robotics and Embodied Intelligence, and paper co-author.

Tested on the Toyota Woven Traffic Safety dataset, SeeUnsafe outperformed other models, correctly classifying videos as collisions, near-misses, or normal traffic 76.71% of the time. The system can also identify which specific road users were involved in critical events, with success rates reaching up to 87.5%.

Traditionally, traffic safety interventions are implemented only after accidents occur. By analyzing patterns of near-misses — such as vehicles passing too close to pedestrians or performing risky maneuvers at intersections — agencies can proactively identify danger zones. This approach enables the implementation of preventive measures like improved signage, optimized signal timing, and redesigned road layouts before serious accidents take place.

The system generates “road safety reports” — natural language explanations for its decisions, describing factors like weather conditions, traffic volume, and the specific movements that led to near-misses or collisions.

While the system has limitations, including sensitivity to object tracking accuracy and challenges with low-light conditions, it establishes a foundation for using AI to “understand” road safety context from vast amounts of traffic footage. The researchers suggest the approach could extend to in-vehicle dash cameras, potentially enabling real-time risk assessment from a driver's perspective.

The research adds to a growing body of work from C2SMART that can improve New York City's transportation systems. Recent projects include studying how heavy electric trucks could strain the city's roads and bridges, analyzing how speed cameras change driver behavior across different neighborhoods, developing a “digital twin” that can find smarter routing to reduce FDNY response times, and a multi-year collaboration with the City to monitor the Brooklyn-Queens Expressway for damage-causing overweight vehicles.

In addition to Ozbay and Feng, the paper's authors are lead author Ruixuan Zhang, a Ph.D. student in transportation engineering at NYU Tandon; Beichen Wang and Juexiao Zhang, both graduate students from NYU's Courant Institute of Mathematical Sciences; and Zilin Bian, a recent NYU Tandon Ph.D. graduate now an assistant professor at Rochester Institute of Technology.

Funding for the research came from the National Science Foundation and the U.S. Department of Transportation's University Transportation Centers Program.

Ruixuan Zhang, Beichen Wang, Juexiao Zhang, Zilin Bian, Chen Feng, Kaan Ozbay,

When language and vision meet road safety: Leveraging multimodal large language models for video-based traffic accident analysis, Accident Analysis & Prevention, Volume 219,2025, 108077,ISSN 0001-4575,https://doi.org/10.1016/j.aap.2025.108077.