Research News

A COVID-19 emergency response for remote control of a dialysis machine with mobile HRI

This research is led by Vikram Kapila, professor of mechanical and aerospace engineering. Principal authors are Ph.D. students Hassam Khan Wazir and Christian Lourido, and Sonia Mary Chacko, a researcher and recent Ph.D. graduate under Kapila.

Healthcare workers, at risk of contracting COVID-19 when in close proximity to infected patients, may transmit the virus to other hospital-bound patients, including those on dialysis. In order to circumvent this risk, the researchers proposed a remote control system for dialysis machines. The proposed setup uses dialysis machines fitted with robotic manipulators connected wirelessly to tablets allowing remote control by workers outside of patients’ rooms.

The system (see video here) comprises an off-the-shelf four degrees of freedom (DoF) robotic manipulator equipped with a USB camera. The robot base and camera stand are fixed on a platform, making the system installation and operation simple, just requiring the user to properly locate the robot in front of its workspace and point the camera to a touchscreen (representing a dialysis machine instrument control panel touchscreen) with which the robot manipulator is required to interact. The user interface consisting of a mobile app is connected to the same wireless network as the robot manipulator system. To identify the surface plane of action of the robot, the mobile app uses the camera’s video-feed which includes a 2D image marker located in the plane of the instrument control panel touchscreen, in front of the robot manipulator. The robotic arm can facilitate complicated sequences of button and slider manipulation thanks to a complex series of algorithms that automate some functions of the machine.

The machine attached to the already in-use dialysis machine livestreams data and results directly to a tablet or computer operated by a remote user in another room. Users tend to report a more consistent performance from the system when the user interaction is performed with a computer versus a tablet, though no significant difference has been recorded between the two modes of operation.

One of the most significant features of this new technology is that creating a custom user interface is not required to operate it, given that the user is interacting directly with the video feed from the instrument control panel touchscreen. Thus, the system works on any device with a touchscreen. The proposed device can be administered almost instantly, which can make it useful in an emergency situation. In the future, options of applying AR (augmented reality) features to the system in order to optimize user experience and efficiency may be explored.

This work is supported in part by the National Science Foundation under ITEST grant DRL-1614085, RET Site grant EEC-1542286, and DRK- 12 grant DRL-1417769.

Examining the effectiveness of a professional development program: Integration of educational robotics into science and mathematics curricula

This research was based on a program developed by Vikram Kapila, professor of mechanical and aerospace engineering.

Sparking interest in STEM education is a critical step toward creating a diverse workforce that can confer key advantages to any country in the global tech world. The number of US jobs required in STEM fields has increased nearly 34% over the past decade, but the number of students opting to pursue STEM as a major and career is declining. Many educators, researchers, and funding agencies have devoted significant efforts towards promoting students’ motivation and interests for learning and academic performance at all levels of STEM ecosystem to catalyze students’ entry on the pathways for STEM professions.

For students, robotics seems to be one of the best entryways to the field of engineering and STEM education in general. For example, students using a robot kit made by LEGO in a classroom can have a joyful and entertaining experience as they feel like playing with toys, which can encourage them to participate in robotic-based learning activities. Still, teachers can be reluctant. These programs can require specialized knowledge and teachers often do not have models or understanding of pedagogical approaches to implement technology-integrated courses, in general, and robotics-integrated courses, in particular.

A new study from Tandon researchers describes a professional development (PD) program designed to support middle school teachers in effectively integrating robotics in science and mathematics classrooms. The PD program encouraged the teachers to develop their own science and mathematics lessons, aligned with national standards, infused with robotic activities.

The study is based on a program developed by Vikram Kapila, Professor of Mechanical and Aerospace Engineering, and it involves Sonia Mary Chacko, a recent doctoral graduate from NYU Tandon. The lead author of the study, Hye Sun You, served as a research associate in the program and is now an Assistant Professor of Science Education at Arkansas Tech University. The study proposed that a multi-week summer PD and sustained academic year follow-up imparted to the teachers the technical knowledge and skills of robotics as well as an understanding of when and how to use robotics in science and mathematics teaching.

The 41 participants of study consisted of 20 mathematics and 20 science teachers and one teacher who teaches both subjects. Three instruments were administered to the teachers during the PD, and follow-up interviews were conducted to further examine benefits and possible impacts on their teaching resulting from the PD. The data were analyzed by both statistical and qualitative methods to identify the effectiveness of the PD.

This study found the technology integration of LEGO robotics tools has the potential to enhance teaching and learning, and that thoughtful PD programs and ongoing support for teachers can provide specific and practical ways to reduce the barrier to embedding technology in educational curricula. It is expected that the PD focused on improving teachers’ knowledge level, confidence, and attitudes towards technology helps teachers overcome barriers that make it difficult to integrate technology into their instruction and ultimately, transforms the performance of students by effective use of technology.

This work is supported in part by the National Science Foundation under ITEST grant DRL-1614085, RET 632 Site grant EEC-1542286, and DRK-12 grant DRL-1417769, and NY Space Grant Consortium grant 76156-10488.

Solvation-Driven Electrochemical Actuation

In a new study led by Institute Professor Maurizio Porfiri at NYU Tandon, researchers showed a novel principle of actuation — to transform electrical energy into motion. This actuation mechanism is based on solvation, the interaction between solute and solvent molecules in a solution. This phenomenon is particular important in water, as its molecules are polar: oxygen attracts electrons more than hydrogen, such that oxygen has a slightly negative charge and hydrogen a slightly positive one. Thus, water molecules are attracted by charged ions in solution, forming shells around them. This microscopic phenomenon plays a critical role in the properties of solutions and in essential biological processes such as protein folding, but prior to this study there was no evidence of potential macroscopic mechanical consequences of solvation.

The group of researchers, which also included Alain Boldini, a Ph.D. candidate in the Department of Mechanical and Aerospace Engineering at NYU Tandon, and Dr. Youngsu Cha of the Korea Institute of Science and Technology, proposed that solvation could be exploited to produce macroscopic deformations in materials. To this end, Porfiri and his group utilized ionomer membranes, unique polymeric materials in which negative charges cannot move. Positive ions can easily enter these membranes, while negative ions are repulsed by them. To demonstrate actuation, ionomer membranes were immersed in a solution of water and salt, between two electrodes. Applying a voltage across the electrodes caused the membrane to bend. The paper, "Solvation-Driven Electrochemical Actuation," is published in the American Physical Society's Physical Review Letters.

According to the model developed by Porfiri and his group, the voltage caused a current of positive ions toward the negative electrode. These ions entered the membrane from one side, along with the water molecules in their solvation shells. On the other side of the membrane, positive ions and their solvation shells were dragged outside. The membrane responded like a sponge: the side full of water expanded, while the side with less water shrank. This differential swelling produced the macroscopic bending of the membrane. Studying actuation with different ions helps understand this phenomenon, as different ions attract a different number of water molecules around them.

The discovery of macroscopic mechanical consequences of solvation paves the way for more research on membranes. The group expects applications in the field of electrochemical cells (batteries, fuel cells, and electrolyzers), which often rely on the membranes utilized in this study. These membranes also share similarities with natural membranes, such as cell membranes, on which the mechanical effects of solvation are largely unknown.

The work was supported by the National Science Foundation under grant No. OISE-1545857 and the National Research Foundation of Korea (NRF) funded by the Korea government (MSIT) under grant No. 2020R1A2C2005252.

Subverting Privacy-Preserving GANs: Hiding Secrets in Sanitized Images

This research was led by Siddharth Garg, Institute Associate Professor of electrical and computer engineering, and included Benjamin Tan, a research assistant professor of electrical and computer engineering, and Kang Liu, a Ph.D. student.

Machine learning (ML) systems are being proposed for use in domains that can affect our day-to-day lives, including face expression recognition systems. Because of the need for privacy, users will look to use privacy preservation tools, typical produced by a third party. To this end, generative adversarial neural networks (GANs) have been proposed for generating or manipulating images. Versions of these systems called “privacy-preserving GANs” (PP-GANs) are designed to sanitize sensitive data (e.g., images of human faces) so that only application-critical information is retained while private attributes, such as the identity of a subject, are removed — by, for example, preserving facial expressions while replacing other identifying information.

Such ML-based privacy tools have potential applications in other privacy sensitive domains such as to remove location-relevant information from vehicular camera data; obfuscate the identity of a person who produced a handwriting sample; or remove barcodes from images. In the case of GANs, the complexity involved in training such models suggests the outsourcing of GAN training in order to achieve PP-GANs functionality.

To measure the privacy-preserving performance of PP-GANs researchers typically use empirical metrics of information leakage to demonstrate the (in)ability of deep learning (DL)-based discriminators to identify secret information from sanitized images. Noting that empirical metrics are dependent on discriminators’ learning capacities and training budgets, Garg and his collaborators argue that such privacy checks lack the necessary rigor for guaranteeing privacy.

In the paper “Subverting Privacy-Preserving GANs: Hiding Secrets in Sanitized Images,” the team formulated an adversarial setting to "stress-test" whether empirical privacy checks are sufficient to guarantee protection against private data recovery from data that has been “sanitized” by a PP-GAN. In doing so, they showed that PP-GAN designs can, in fact, be subverted to pass privacy checks, while still allowing secret information to be extracted from sanitized images.

While the team’s adversarial PP-GAN passed all existing privacy checks, it actually hid secret data pertaining to the sensitive attributes, even allowing for reconstruction of the original private image. They showed that the results have both foundational and practical implications, and that stronger privacy checks are needed before PP-GANs can be deployed in the real-world.

“From a practical stand-point, our results sound a note of caution against the use of data sanitization tools, and specifically PP-GANs, designed by third-parties,” explained Garg.

The study, which will be presented at the virtual 35th AAAI Conference on Artificial Intelligence, provides background on PP-GANs and associated empirical privacy checks; formulates an attack scenario to ask if empirical privacy checks can be subverted, and outlines an approach for circumventing empirical privacy checks.

- The team provides the first comprehensive security analysis of privacy-preserving GANs and demonstrate that existing privacy checks are inadequate to detect leakage of sensitive information.

- Using a novel steganographic approach, they adversarially modify a state-of-the-art PP-GAN to hide a secret (the user ID), from purportedly sanitized face images.

- They show that their proposed adversarial PP-GAN can successfully hide sensitive attributes in “sanitized” output images that pass privacy checks, with 100% secret recovery rate.

“Our experimental results highlighted the insufficiency of existing DL-based privacy checks, and potential risks of using untrusted third-party PP-GAN tools,” said Garg, in the study.

RAPID: High-resolution agent-based modeling of COVID-19 spreading in a small town

COVID 19 has wreaked havoc across the planet. As of January 1, 2021, the WHO has reported nearly 82 million cases globally, with over 1.8 million deaths. In the face of this upheaval, public health authorities and the general population are striving to achieve a balance between safety and normalcy. The uncertainty and novelty of the current conditions call for the development of theory and simulation tools that could offer a fine resolution of multiple strata of society while supporting the evaluation of “what-if” scenarios.

The research team led by Maurizio Porfiri proposes an agent-based modeling platform to simulate the spreading of COVID-19 in small towns and cities. The platform is developed at the resolution of a single individual, and demonstrated for the city of New Rochelle, NY — one of the first outbreaks registered in the United States. The researchers used New Rochelle not only because of its place in the COVID timeline, but because agent-based modelling for mid-size towns are relatively unexplored despite the U.S. being largely composed of small towns.

Supported by expert knowledge and informed by officially reported COVID-19 data, the model incorporates detailed elements of the spreading within a statistically realistic population. Along with pertinent functionality such as testing, treatment, and vaccination options, the model also accounts for the burden of other illnesses with symptoms similar to COVID-19. Unique to the model is the possibility to explore different testing approaches — in hospitals or drive-through facilities— and vaccination strategies that could prioritize vulnerable groups. Decision making by public authorities could benefit from the model, for its fine-grain resolution, open-source nature, and wide range of features.

The study had some stark conclusions. One example: the results suggest that prioritizing vaccination of high-risk individuals has a marginal effect on the count of COVID-19 deaths. To obtain significant improvements, a very large fraction of the town population should, in fact, be vaccinated. Importantly, the benefits of the restrictive measures in place during the first wave greatly surpass those from any of these selective vaccination scenarios. Even with a vaccine available, social distancing, protective measures, and mobility restrictions will still key tools to fight COVID-19.

The research team included Zhong-Ping Jiang, professor of electrical and computer engineering; post-docs Agnieszka Truszkowska, who led the implementation of the computational framework for the project, and Brandon Behring; graduate student Jalil Hasanyan; as well as Lorenzo Zino from the University of Groningen, Sachit Butail from Southern Illinois University, Emanuele Caroppo from the Università Cattolica del Sacro Cuore, and Alessandro Rizzo from Turin Polytechnic. The work was partially supported by National Science Foundation (CMMI1561134 and CMMI-2027990), Compagnia di San Paolo, MAECI (“Mac2Mic”), the European Research Council, and the Netherlands Organisation for Scientific Research.

Detection of Hardware Trojans Using Controlled Short-Term Aging

This research project is led by Department of Electrical and Computer Engineering Professors Farshad Khorrami and Ramesh Karri, who is co-founder and co-chair of the NYU Center for Cybersecurity, and Prashanth Krishnamurthy, a research scientist at NYU Tandon; and Jörg Henkel and Hussam Amrouch of the Computer Science Department of the Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany.

The project builds upon on-going research, funded by a $1.3 million grant from the Office of Naval Research, to create algorithms for detecting Trojans — deliberate flaws inserted into chips during fabrication — based on the short term aging phenomena in transistors.

It will focus on this physical phenomenon of short-term aging as a route to detecting hardware Trojans. The efficacy of short-term aging-based hardware Trojan detection has been demonstrated through simulations on integrated circuits (ICs) with several types of hardware Trojans through stochastic perturbations injected into the simulation studies. This DURIP project seeks to demonstrate hardware Trojan detection in actual physical ICs.

Khorrami explained that the new $359,000 grant will support the design and fabrication of 28nm chips with and without built-in trojans

"The supply chain in manufacturing chips is complex and most foundries are overseas. Once a chip is fabricated and returned to the customer, the question is if additional hardware has been included on the chip die for most likely malicious purposes," he said.

For this purpose, this DURIP project is proposing a novel experimental testbed consisting of:

• A specifically designed IC that contains Trojan-free and Trojan-infected variants of multiple circuits (e.g., cryptographic accelerators and micrcontrollers). This IC will be used for evaluation of the efficacy and accuracy of the hardware short-term aging based Trojan detection methods. To validate the Trojan detection methodology the team will use 3mm×3mm ICs with both Trojan-free and Trojan-infected variants of multiple circuits.

• AnFPGA-based interface module to apply clock signal and inputs to the fabricated IC and collect outputs.

• A fast switching programmable power supply for precise application of supply voltage changes to the IC’s being tested. The unit will apply patterns of supply voltages to the test chips to induce controllable and repeatable levels of short-term aging.

• Finally, a data analysis software module on a host computer for machine learning based device evaluation and anomaly detection (i.e., detection of hardware Trojans).

This testbed, a vital resource in the physical validation of the proposed NYU-KIT hardware Trojan detection methodology will also be a valuable resource for evaluating and validating other hardware Trojan detection techniques developed by NYU and the hardware security researchers outside of NYU. The testbed will therefore be a unique experimental facility for the hardware security community by providing access to (i) physical ICs with Trojan- free and Trojan-infected variants of circuits ranging from moderate-sized cryptographic circuits to complex microprocessors plus (ii) a generic FPGA-based interface to interrogate and test these ICs for Trojans according to their detection method.

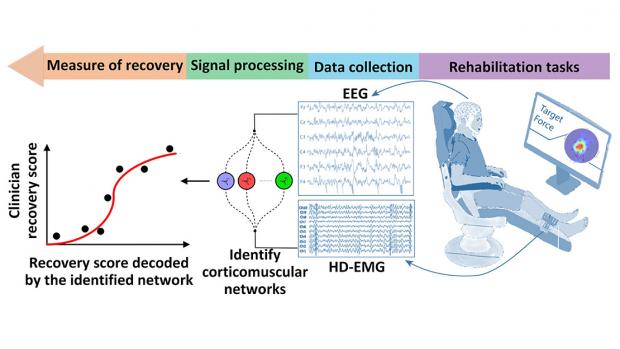

Brain-Muscle Connectivity Network for Assessing Stroke NeuroRehabilitation

This project is led by S. Farokh Atashzar, assistant professor of electrical and computer engineering at NYU Tandon; and John-Ross Rizzo, assistant professor in the Departments of Rehabilitation Medicine and Neurology at NYU Langone Health, and of mechanical and aerospace and biomedical engineering at NYU Tandon.

Stroke, the leading cause of motor disabilities, is putting tremendous pressure on healthcare infrastructures because of an imbalance between an aging society and available neurorehabilitation resources. Thus, there has been a surge in the production of novel rehabilitative technologies for accelerating recovery. Despite the successful development of such devices, lack of objective standards besides clinical investigations using subjective measures have led to controversial recommendations regarding several devices, including robots.

This NSF/FDA Scholar-in-Residence project, designed to address the need for effective rehabilitative technologies, is focused on the design, implementation, and evaluation of a novel, objective, and robust algorithmic biomarker of recovery. Called Delta CorticoMuscular Information-based Connectivity (D-CMiC), the proposed algorithm-based protocol quantifies the connectivity between the central nervous system (CNS) and the peripheral nervous system (PNS) by simultaneously measuring electrical activity from the brain and an ankle muscle on the affected side of recovering post-stroke patients. The system will quantify both spectrotemporal neurophysiological connectivity between the CNS (using electroencephalography (EEG) and PNS (using high-density surface electromyography (HD-sEMG).

The goal of the collaborative project, beyond clarifying the neurophysiology of recovery, is to expedite availability of more effective rehabilitation devices to patients for a range of neurological disorders beyond stroke (such as Parkinson's disease, Essential Tremor and Ataxia). For educational impact, the project will generate a unique transdisciplinary educational environment by conducting workshops about emerging Brain-Computer Interface (BCI) technologies in medicine, and undergraduate team projects for human-machine interfacing, with a focus on promoting STEM activities within underrepresented groups.

The predictive capability, precision, and efficiency of the developed D-CMiC metric will be analyzed by collecting data from recovering stroke patients and healthy subjects alike. Unique D-CMiC features include: (1) accurately and objectively tracking corticomuscular functional connectivity in the Delta/low frequency band; (2) computationally modeling of corticomuscular connectivity; (3) building the basis for the first medical device development tool for the systematic, objective, and transparent evaluation of pre-market rehabilitation devices, aligned with the FDA's mission.

RAPID: Visualizing Epidemical Uncertainty for Personal Risk Assessment

The National Science Foundation RAPID grant for this research was obtained by Rumi Chunara and Enrico Bertini, professors in the Department of Computer Science and Engineering.

COVID-19 is one of the most deadly and fastest transmitting viruses in modern history. In response to this pandemic, news agencies, government organizations, citizen scientists, and many others have released hundreds of visualizations of pandemic forecast data. While providing people with accurate information is essential, it is unclear how the average person understands the widely distributed depictions of pandemic data. Prior research on uncertainty communication shows that even common visualizations can be confusing. One possible source of inappropriate responses to COVID-19 is the lack of knowledge about personal risk and the nature of pandemic uncertainty.

The goal of this research is to test how people understand currently available COVID-19 data visualizations and create communication guidelines based on these findings. Further, the researchers will develop an application to help people understand the factors that contribute to their risk. Users are able to interact with the application to learn about the impact of their actions on their risk. This research provides immediate solutions for teaching people about their personal risk associated with COVID-19 and how their actions influence the risks of others, which could improve the public's response and decrease fatalities. Additionally, this work supports decision making for future pandemics and any subsequent outbreaks of COVID-19 or other viruses.

Specifically, the research team, in an effort to understand how people respond to uncertainty about the nature of the pandemic, is testing the effects of currently available visualizations on personal risk judgments and behavior. By studying how changes in factors influence risk perceptions, the research can contribute to understanding how people conceptualize compound uncertainties from different sources (e.g., uncertainties associated with location, time, demographics and risk behaviors). The researchers are using this information to produce a visualization application that allows people to change the parameters of a simulation to see how the resulting changes affect their risk judgments. For example, users in one city are able to see the pandemic risk to individuals of their age in their zip code and then see how that risk would change if the infection rate increased or decreased.

The aim is to promote intuitive understanding of the epidemiological uncertainty in the forecast through participants? experimentation with the application. While in line with current recommendations for intrinsic uncertainty visualization, this work is the first of its kind to test the effect of user interaction to convey uncertainty through visualization.

This award reflects NSF's statutory mission and has been deemed worthy of support through evaluation using the Foundation's intellectual merit and broader impacts review criteria.

Resource constrained mobile data analytics assisted by the wireless edge

The National Science Foundation grant for this research was obtained by Siddharth Garg and Elza Erkip, professors of electrical and computer engineering, and Yao Wang, professor of computer science and engineering and biomedical engineering. Wang and Erkip are also members of the NYU WIRELESS research center.

Increasing amounts of data are being collected on mobile and internet-of-things (IoT) devices. Users are interested in analyzing this data to extract actionable information for such purposes as identifying objects of interest from high-resolution mobile phone pictures. The state-of-the-art technique for such data analysis employs deep learning, which makes use of sophisticated software algorithms modeled on the functioning of the human brain. Deep learning algorithms are, however, too complex to run on small, battery constrained mobile devices. The alternative, i.e., transmitting data to the mobile base station where the deep learning algorithm can be executed on a powerful server, consumes too much bandwidth.

This project that this NSF funding will support seeks to devise new methods to compress data before transmission, thus reducing bandwidth costs while still allowing for the data to be analyzed at the base station. Departing from existing data compression methods optimized for reproducing the original images, the team will develop a means of using deep learning itself to compress the data in a fashion that only keeps the critical parts of data necessary for subsequent analysis. The resulting deep learning based compression algorithms will be simple enough to run on mobile devices while drastically reducing the amount of data that needs to be transmitted to mobile base stations for analysis, without significantly compromising the analysis performance.

The proposed research will provide greater capability and functionality to mobile device users, enable extended battery lifetimes and more efficient sharing of the wireless spectrum for analytics tasks. The project also envisions a multi-pronged effort aimed at outreach to communities of interest, educating and training the next generation of machine learning and wireless professionals at the K-12, undergraduate and graduate levels, and broadening participation of under-represented minority groups.

The project seeks to learn "analytics-aware" compression schemes from data, by training low-complexity deep neural networks (DNNs) for data compression that execute on mobile devices and achieve a range of transmission rate and analytics accuracy targets. As a first step, efficient DNN pruning techniques will be developed to minimize the DNN complexity, while maintaining the rate-accuracy efficiency for one or a collection of analytics tasks.

Next, to efficiently adapt to varying wireless channel conditions, the project will seek to design adaptive DNN architectures that can operate at variable transmission rates and computational complexities. For instance, when the wireless channel quality drops, the proposed compression scheme will be able to quickly reduce transmission rate in response while ensuring the same analytics accuracy, but at the cost of greater computational power on the mobile device.

Further, wireless channel allocation and scheduling policies that leverage the proposed adaptive DNN architectures will be developed to optimize the overall analytics accuracy at the server. The benefits of the proposed approach in terms of total battery life savings for the mobile device will be demonstrated using detailed simulation studies of various wireless protocols including those used for LTE (Long Term Evolution) and mmWave channels.

This award reflects NSF's statutory mission and has been deemed worthy of support through evaluation using the Foundation's intellectual merit and broader impacts review criteria.

A survey of cybersecurity of digital manufacturing

This survey was led by Nikhil Gupta, professor mechanical and aerospace engineering and a member of the NYU Center for Cybersecurity; and Ramesh Karri, professor of electrical and computer engineering and co-founder and co-Chair of the NYU Center for Cybersecurity.

The Industry 4.0 concept promotes a digital manufacturing (DM) paradigm that can enhance quality and productivity, which reduces inventory and the lead time for delivering custom, batch-of-one products based on achieving convergence of additive, subtractive, and hybrid manufacturing machines, automation and robotic systems, sensors, com- puting, and communication networks, artificial intelligence, and big data. A DM system consists of embedded electronics, sensors, actuators, control software, and interconnectivity to enable the machines and the components within them to exchange data with other machines, components therein, the plant operators, the inventory managers, and customers.

Digitalization of manufacturing aided by advances in sensors, artificial intelligence, robotics, and networking technology is revolutionizing the traditional manufacturing industry by rethinking manufacturing as a service.

Concurrently, there is a shift in demand from high-volume manufacturing to batches-of-one, custom manufacturing of products. While the large manufacturing enterprises can reallocate resources and transform themselves to seize these opportunities, the medium-scale enterprises (MSEs) and small-scale enterprises with limited resources need to become federated and proactively deal with digitalization. Many MSEs essentially consist of general-purpose machines that give them the flexibility to execute a variety of process plans and workflows to create one-off products with complex shapes, textures, properties, and functionalities. One way the MSEs can stay relevant in the next-generation digital manufacturing (DM) environment is to become fully interconnected with other MSEs by using the digital thread and becoming part of a larger, cyber-manufacturing business network. This allows the MSEs to make their resources visible to the market and continue to serve as suppliers to OEMs and other parts of the manufacturing supply networks.

This article, whose authors include researchers from NYU Tandon and Texas A&M, explores the cybersecurity risks in the emerging DM context, assesses the impact on manufacturing, and identifies approaches to secure DM. It resents a hybrid-manufacturing cell, a building block of DM, and uses it to discuss vulnerabilities; discusses a taxonomy of threats for DM; explores attack case studies; surveys existing taxonomies in DM systems; and demonstrates how novel manufacturing-unique defenses can mitigate the attacks.

The team's research is supported, in part, by the National Science Foundation.