Siddharth Garg

-

Professor

Siddharth Garg is currently a Professor of ECE at NYU Tandon, where he leads the EnSuRe Research group. Prior to that he was in Assistant Professor also in ECE from 2014-2020, and an Assistant Professor of ECE at the Unversity of Waterloo from 2010-2014. His research interests are in machine learning, cyber-security and computer hardware design.

He received his Ph.D. degree in Electrical and Computer Engineering from Carnegie Mellon University in 2009, and a B.Tech. degree in Electrical Enginerring from the Indian Institute of Technology Madras. In 2016, Siddharth was listed in Popular Science Magazine's annual list of "Brilliant 10" researchers. Siddharth has received the NSF CAREER Award (2015), and paper awards at the IEEE Symposium on Security and Privacy (S&P) 2016, USENIX Security Symposium 2013, at the Semiconductor Research Consortium TECHCON in 2010, and the International Symposium on Quality in Electronic Design (ISQED) in 2009. Siddharth also received the Angel G. Jordan Award from ECE department of Carnegie Mellon University for outstanding thesis contributions and service to the community. He serves on the technical program committee of several top conferences in the area of computer engineering and computer hardware, and has served as a reviewer for several IEEE and ACM journals.

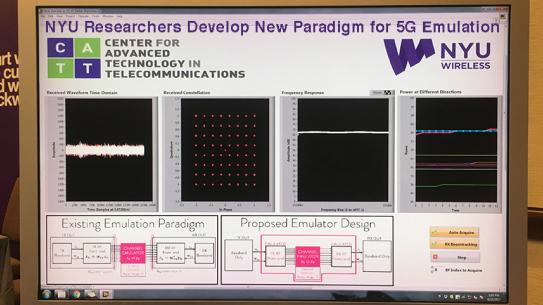

His research is supported in part by NYU WIRELESS.

Education

Stanford University, 2005

M.S., Electrical Engineering

Indian Institute of Technology Madras, 2004

B.Tech., Electrical Engineering

Carnegie Mellon University, 2009

Ph.D., Electrical and Computer Engineering

Experience

Carnegie Mellon University

Post-doctoral Fellow

From: October 2009 to August 2010

University of Waterloo

Assistant Professor of Electrical and Computer Engineering

From: September 2010 to August 2014

Publications

Journal Articles

A nearly complete list of my publications can be found on my personal website or via my Google Scholar page.

Awards

- Popular Science Magazine's "Brilliant 10" of 2016

- Distinguighed Student Paper Award, IEEE Symp. on Security & Privacy (Oakland) 2016

- NSF CAREER Award 2015

- Best Student Paper Award, USENIX Security Symposium 2013

- Angel G. Jordan Award for thesis contributions, CMU 2010

- Best Paper Award, International Symposium on Quality in Electronic Design (ISQED) 2009

Research News

New NYU Tandon-led project will accelerate privacy-preserving computing

Today's most advanced cryptographic computing technologies — which enable privacy-preserving computation — are trapped in research labs by one critical barrier: they're thousands of times too slow for everyday use.

NYU Tandon, helming a research team that includes Stanford University and the City University of New York, just received funding from a $3.8 million grant from the National Science Foundation to build the missing infrastructure that could make those technologies practical, via a new design platform and library that allows researchers to develop and share chip designs.

The problem is stark. Running a simple AI model on encrypted data takes over 10 minutes instead of milliseconds, a four order of magnitude performance gap that impedes many real-world use cases.

Current approaches to speeding up cryptographic computing have hit a wall, however. "The normal tricks that we have to get over this performance bottleneck won’t scale much further, so we have to do something different," said Brandon Reagen, the project's lead investigator. Reagen is an NYU Tandon assistant professor with appointments in the Electrical and Computer Engineering (ECE) Department and in the Computer Science and Engineering (CSE) Department. He is also on the faculty of NYU's Center for Advanced Technology in Telecommunications (CATT) and the NYU Center for Cybersecurity (CCS).

The team's solution is a new platform called "Cryptolets.”

Currently, researchers working on privacy chips must build everything from scratch. Cryptolets will provide three things: a library where researchers can share and access pre-built, optimized hardware designs for privacy computing; tools that allow multiple smaller chips to work together as one powerful system; and automated testing to ensure contributed designs work correctly and securely.

This chiplet approach — using multiple small, specialized chips working together — is a departure from traditional single, monolithic chip optimization, potentially breaking through performance barriers.

For Reagen, this project represents the next stage of his research approach. "For years, most of our academic research has been working in simulation and modeling," he said. "I want to pivot to building. I’d like to see real-world encrypted data run through machine learning workloads in the cloud without the cloud ever seeing your data. You could, for example, prove you are who you say you are without actually revealing your driver's license, social security number, or birth certificate."

What sets this project apart is its community-building approach. The researchers are creating competitions where students and other researchers use Cryptolets to compete in designing the best chip components. The project plans to organize annual challenges at major cybersecurity and computer architecture conferences. The first workshop will take place in October 2025 at MICRO 2025, which focuses on hardware for zero-knowledge proofs.

"We want to build a community, too, so everyone's not working in their own silos," Reagen said. The project will support fabrication opportunities for competition winners, with plans to assist tapeouts of smaller designs initially and larger full-system tapeouts in the later phases, helping participants who lack chip fabrication resources at their home institutions

"With Cryptolets, we are not just funding a new hardware platform—we are enabling a community-wide leap in how privacy-preserving computation can move from theory to practice,” said Deep Medhi, program director in the Computer & Information Sciences & Engineering Directorate at the U.S. National Science Foundation. “By lowering barriers for researchers and students to design, share and test cryptographic chips, this project aligns with NSF’s mission to advance secure, trustworthy and accessible technologies that benefit society at large."

If the project succeeds, it could enable a future where strong digital privacy isn't just theoretically possible, but practically deployable at scale, from protecting personal health data to securing financial transactions to enabling private AI assistants that never see people's actual queries.

Along with Reagen, the team is led by NYU Tandon co-investigators Ramesh Karri, ECE Professor and Department Chair, and faculty member of CATT and CCS; Siddharth Garg, Professor in ECE and faculty member of NYU WIRELESS and CCS; Austin Rovinski, Assistant Professor in ECE; The City College of New York’s Rosario Gennaro and Tushar Jois; and Stanford's Thierry Tambe and Caroline Trippel, with Warren Savage serving as project manager. The team also includes industry advisors from companies working on cryptographic technologies.

NYU Tandon engineers create first AI model specialized for chip design language, earning top journal honor

Researchers at NYU Tandon School of Engineering have created VeriGen, the first specialized artificial intelligence model successfully trained to generate Verilog code, the programming language that describes how a chip's circuitry functions.

The research just earned the ACM Transactions on Design Automation of Electronic Systems 2024 Best Paper Award, affirming it as a major advance in automating the creation of hardware description languages that have traditionally required deep technical expertise.

"General purpose AI models are not very good at generating Verilog code, because there's very little Verilog code on the Internet available for training," said lead author Institute Professor Siddharth Garg, who sits in NYU Tandon’s Department of Electrical and Computer Engineering (ECE) and serves on the faculty of NYU WIRELESS and NYU Center for Cybersecurity (CCS). "These models tend to do well on programming languages that are well represented on GitHub, like C and Python, but tend to do a lot worse on poorly represented languages like Verilog."

Along with Garg, a team of NYU Tandon Ph.D. students, postdoctoral researchers, and faculty members Ramesh Karri and Brendan Dolan-Gavitt tackled this challenge by creating and distributing the largest AI training dataset of Verilog code ever assembled. They scoured GitHub to gather approximately 50,000 Verilog files from public repositories, and supplemented this with content from 70 Verilog textbooks. This data collection process required careful filtering and de-duplication to create a high-quality training corpus.

For their most powerful model, the researchers then fine-tuned Salesforce's open-source CodeGen-16B language model, which contains 16 billion parameters and was originally pre-trained on both natural language and programming code.

The computational demands were substantial. Training required three NVIDIA A100 GPUs working in parallel, with the model parameters alone consuming 30 GB of memory and the full training process requiring approximately 250 GB of GPU memory.

This fine-tuned model performed impressively in testing, outperforming commercial state-of-the-art models while being an order of magnitude smaller and fully open-source. In their evaluation, the fine-tuned CodeGen-16B achieved a 41.9% rate of functionally correct code versus 35.4% for the commercial code-davinci-002 model — with fine-tuning boosting accuracy from just 1.09% to 27%, demonstrating the significant advantage of domain-specific training.

"We've shown that by fine-tuning a model on that specific task you care about, you can get orders of magnitude reduction in the size of the model," Garg noted, highlighting how their approach improved both accuracy and efficiency. The smaller size enables the model to run on standard laptops rather than requiring specialized hardware.

The team evaluated VeriGen's capabilities across a range of increasingly complex hardware design tasks, from basic digital components to advanced finite state machines. While still not perfect — particularly on the most complex challenges — VeriGen demonstrated remarkable improvements over general-purpose models, especially in generating syntactically correct code.

The significance of this work has been recognized in the field, with subsequent research by NVIDIA in 2025 acknowledging VeriGen as one of the earliest and most important benchmarks for LLM-based Verilog generation, helping establish foundations for rapid advancements in AI-assisted hardware design.

The project's open-source nature has already sparked significant interest in the field. While VeriGen was the team's first model presented in the ACM paper, they've since developed an improved family of models called 'CL Verilog' that perform even better.

These newer models have been provided to hardware companies including Qualcomm and NXP for evaluation of potential commercial applications. The work builds upon earlier NYU Tandon efforts including the 2020 DAVE (Deriving Automatically Verilog from English) project, advancing the field by creating a more comprehensive solution through large-scale fine-tuning of language models.

VeriGen complements other AI-assisted chip design initiatives from NYU Tandon aimed at democratizing hardware: their Chip Chat project created the first functional microchip designed through natural language conversations with GPT-4; Chips4All, supported by the National Science Foundation's (NSF’s) Research Traineeship program, trains diverse STEM graduate students in chip design; and BASICS, funded through NSF's Experiential Learning for Emerging and Novel Technologies initiative, teaches chip design to non-STEM professionals.

In addition to Garg, the VeriGen paper authors are Shailja Thakur (former NYU Tandon); Baleegh Ahmad (NYU Tandon PhD '25), Hammond Pearce (former NYU Tandon; currently University of New South Wales), Benjamin Tan (University of Calgary), Dolan-Gavitt (NYU Tandon Associate Professor of Computer Science and Engineering (CSE) and CCS faculty), and Karri (NYU Tandon Professor of ECE and CCS faculty).

Funding for the VeriGen research came from the National Science Foundation and the Army Research Office.

Shailja Thakur, Baleegh Ahmad, Hammond Pearce, Benjamin Tan, Brendan Dolan-Gavitt, Ramesh Karri, and Siddharth Garg. 2024. VeriGen: A Large Language Model for Verilog Code Generation. ACM Trans. Des. Autom. Electron. Syst. 29, 3, Article 46 (May 2024), 31 pages.

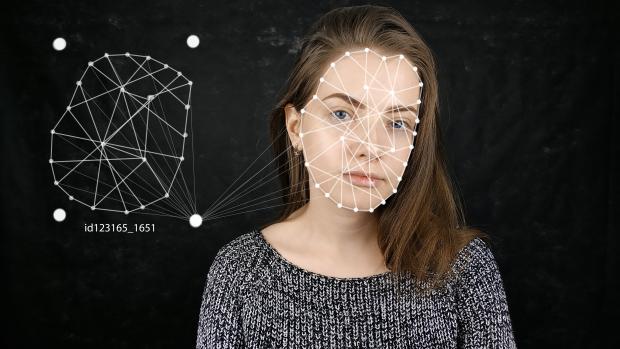

Studying the online deepfake community

In the evolving landscape of digital manipulation and misinformation, deepfake technology has emerged as a dual-use technology. While the technology has diverse applications in art, science, and industry, its potential for malicious use in areas such as disinformation, identity fraud, and harassment has raised concerns about its dangerous implications. Consequently, a number of deepfake creation communities, including the pioneering r/deepfakes on Reddit, have faced deplatforming measures to mitigate risks.

A noteworthy development unfolded in February 2018, just over a week after the removal of r/deepfakes, as MrDeepFakes (MDF) made its entrance into the online realm. Functioning as a privately owned platform, MDF positioned itself as a community hub, boasting to be the largest online space dedicated to deepfake creation and discussion. Notably, this purported communal role sharply contrasts with the platform's primary function — serving as a host for nonconsensual deepfake pornography.

Researchers at NYU Tandon led by Rachel Greenstadt, Professor of Computer Science and Engineering and a member of the NYU Center for Cybersecurity, undertook an exploration of these two key deepfake communities utilizing a mixed methods approach, combining quantitative and qualitative analysis. The study aimed to uncover patterns of utilization by community members, the prevailing opinions of deepfake creators regarding the technology and its societal perception, and attitudes toward deepfakes as potential vectors of disinformation.

Their analysis, presented in a paper written by lead author and Ph.D. candidate Brian Timmerman, revealed a nuanced understanding of the community dynamics on these boards. Within both MDF and r/deepfakes, the predominant discussions lean towards technical intricacies, with many members expressing a commitment to lawful and ethical practices. However, the primary content produced or requested within these forums were nonconsensual and pornographic deepfakes. Adding to the complexity are facesets that raise concerns, hinting at potential mis- and disinformation implications with politicians, business leaders, religious figures, and news anchors comprising 22.3% of all faceset listings.

In addition to Greenstadt and Timmerman, the research team includes Pulak Mehta, Progga Deb, Kevin Gallagher, Brendan Dolan-Gavitt, and Siddharth Garg.

Timmerman, B., Mehta, P., Deb, P., Gallagher, K., Dolan-Gavitt, B., Garg, S., Greenstadt, R. (2023). Studying the online Deepfake Community. Journal of Online Trust and Safety, 2(1). https://doi.org/10.54501/jots.v2i1.126

DeepReDuce: ReLU Reduction for Fast Private Inference

This research was led by Brandon Reagen, assistant professor of computer science and electrical and computer engineering, with Nandan Kumar Jha, a Ph.D. student under Reagen, and Zahra Ghodsi, who obtained her Ph.D. at NYU Tandon under Siddharth Garg, Institute associate professor of electrical and computer engineering.

Concerns surrounding data privacy are having an influence on how companies are changing the way they use and store users’ data. Additionally, lawmakers are passing legislation to improve users’ privacy rights. Deep learning is the core driver of many applications impacted by privacy concerns. It provides high utility in classifying, recommending, and interpreting user data to build user experiences and requires large amounts of private user data to do so. Private inference (PI) is a solution that simultaneously provides strong privacy guarantees while preserving the utility of neural networks to power applications.

Homomorphic data encryption, which allows inferences to be made directly on encrypted data, is a solution that addresses the rise of privacy concerns for personal, medical, military, government and other sensitive information. However, the primary challenge facing private inference is that computing on encrypted data levies an impractically high penalty on latency, stemming mostly from non-linear operators like ReLU (rectified linear activation function).

Solving this challenge requires new optimization methods that minimize network ReLU counts while preserving accuracy. One approach is minimizing the use of ReLU by eliminating uses of this function that do little to contribute to the accuracy of inferences.

“What we are to trying to do there is rethink how neural nets are designed in the first place,” said Reagen. “You can skip a lot of these time and computationally-expensive ReLU operations and still get high performing networks at 2 to 4 times faster run time.”

The team proposed DeepReDuce, a set of optimizations for the judicious removal of ReLUs to reduce private inference latency. The researchers tested this by dropping ReLUs from classic networks to significantly reduce inference latency while maintaining high accuracy.

The team found that, compared to the state-of-the-art for private inference DeepReDuce improved accuracy and reduced ReLU count by up to 3.5% (iso-ReLU count) and 3.5× (iso-accuracy), respectively.

The work extends an innovation, called CryptoNAS. Described in an earlier paper whose authors include Ghodsi and a third Ph.D. student, Akshaj Veldanda, CryptoNAS optimizes the use of ReLUs as one might rearrange how rocks are arranged in a stream to optimize the flow of water: it rebalances the distribution of ReLUS in the network and removes redundant ReLUs.

The investigators will present their work on DeepReDuce at the 2021 International Conference on Machine Learning (ICML) from July 18-24, 2021.