Brendan Dolan-Gavitt

-

Associate Professor

Brendan Dolan-Gavitt is an Associate Professor in the Computer Science and Engineering Department at NYU Tandon. He holds a Ph.D. in computer science from Georgia Tech (2014) and a BA in Math and Computer Science from Wesleyan University (2006).

Dolan-Gavitt's research interests span many areas of cybersecurity, including program analysis, virtualization security, memory forensics, and embedded and cyber-physical systems. His research focuses on developing techniques to ease or automate the understanding of large, real-world software systems in order to develop novel defenses against attacks, typically by subjecting them to static and dynamic analyses that reveal hidden and undocumented assumptions about their design and behavior.

His work has been presented at top security conferences such as USENIX Security, the ACM Conference on Computer and Communications Security (CCS) and the IEEE Symposium on Security and Privacy. He also led the development of PANDA, an open-source platform for architecture-neutral dynamic analysis, which has users around the world and has been featured in technical press such as The Register. Prior to joining NYU, he was a postdoctoral researcher at Columbia University.

Education

Georgia Institute of Technology 2014

Doctor of Philosophy, Computer Science

Wesleyan University 2006

Bachelor of Arts, Computer Science and Mathematics

Publications

Journal Articles

Brendan Dolan-Gavitt, Patrick Hulin, Engin Kirda, Tim Leek, Andrea Mambretti, Wil Robertson, Frederick Ulrich, Ryan Whelan. LAVA: Large-scale Automated Vulnerability Addition. Proceedings of the IEEE Symposium on Security and Privacy (Oakland), May 2016.

Brendan Dolan-Gavitt, Josh Hodosh, Patrick Hulin, Tim Leek, and Ryan Whelan. Repeatable Reverse Engineering with PANDA. Program Protection and Reverse Engineering Workshop (PPREW), December 2015.

Brendan Dolan-Gavitt, Tim Leek, Josh Hodosh, and Wenke Lee. Tappan Zee (North) Bridge: Mining Memory Accesses for Introspection. Proceedings of the ACM Conference on Computer and Communications Security (CCS), November 2013.

Brendan Dolan-Gavitt, Tim Leek, Michael Zhivich, Jonathon Giffin, and Wenke Lee. Virtuoso: Narrowing the Semantic Gap in Virtual Machine Introspection. Proceedings of the IEEE Symposium on Security and Privacy (Oakland), May 2011.

Brendan Dolan-Gavitt, Abhinav Srivasta, Patrick Traynor, and Jonathon Giffin. Robust Signatures for Kernel Data Structures. Proceedings of the ACM Conference on Computer and Communications Security (CCS), November 2009.

Brendan Dolan-Gavitt. The VAD tree: A process-eye view of physical memory. Digital Investigation, Volume 4, Supplement 1, September 2007, Pages 62-64.

Brendan Dolan-Gavitt. Forensic analysis of the Windows registry in memory. Digital Investigation, Volume 5, Supplement 1, September 2008, Pages S26-S32.

Other Publications

Brendan Dolan-Gavitt, Bryan Payne, and Wenke Lee. Leveraging Forensic Tools for Virtual Machine Introspection. Technical Report: GT-CS-11-05, May, 2011.

Brendan Dolan-Gavitt and Yacin Nadji. See No Evil: Evasions in Honeymonkey Systems. May 2010.

Brendan Dolan-Gavitt and Patrick Traynor. Using Kernel Type Graphs to Detect Dummy Structures. December 2008.

Research News

NYU Tandon engineers create first AI model specialized for chip design language, earning top journal honor

Researchers at NYU Tandon School of Engineering have created VeriGen, the first specialized artificial intelligence model successfully trained to generate Verilog code, the programming language that describes how a chip's circuitry functions.

The research just earned the ACM Transactions on Design Automation of Electronic Systems 2024 Best Paper Award, affirming it as a major advance in automating the creation of hardware description languages that have traditionally required deep technical expertise.

"General purpose AI models are not very good at generating Verilog code, because there's very little Verilog code on the Internet available for training," said lead author Institute Professor Siddharth Garg, who sits in NYU Tandon’s Department of Electrical and Computer Engineering (ECE) and serves on the faculty of NYU WIRELESS and NYU Center for Cybersecurity (CCS). "These models tend to do well on programming languages that are well represented on GitHub, like C and Python, but tend to do a lot worse on poorly represented languages like Verilog."

Along with Garg, a team of NYU Tandon Ph.D. students, postdoctoral researchers, and faculty members Ramesh Karri and Brendan Dolan-Gavitt tackled this challenge by creating and distributing the largest AI training dataset of Verilog code ever assembled. They scoured GitHub to gather approximately 50,000 Verilog files from public repositories, and supplemented this with content from 70 Verilog textbooks. This data collection process required careful filtering and de-duplication to create a high-quality training corpus.

For their most powerful model, the researchers then fine-tuned Salesforce's open-source CodeGen-16B language model, which contains 16 billion parameters and was originally pre-trained on both natural language and programming code.

The computational demands were substantial. Training required three NVIDIA A100 GPUs working in parallel, with the model parameters alone consuming 30 GB of memory and the full training process requiring approximately 250 GB of GPU memory.

This fine-tuned model performed impressively in testing, outperforming commercial state-of-the-art models while being an order of magnitude smaller and fully open-source. In their evaluation, the fine-tuned CodeGen-16B achieved a 41.9% rate of functionally correct code versus 35.4% for the commercial code-davinci-002 model — with fine-tuning boosting accuracy from just 1.09% to 27%, demonstrating the significant advantage of domain-specific training.

"We've shown that by fine-tuning a model on that specific task you care about, you can get orders of magnitude reduction in the size of the model," Garg noted, highlighting how their approach improved both accuracy and efficiency. The smaller size enables the model to run on standard laptops rather than requiring specialized hardware.

The team evaluated VeriGen's capabilities across a range of increasingly complex hardware design tasks, from basic digital components to advanced finite state machines. While still not perfect — particularly on the most complex challenges — VeriGen demonstrated remarkable improvements over general-purpose models, especially in generating syntactically correct code.

The significance of this work has been recognized in the field, with subsequent research by NVIDIA in 2025 acknowledging VeriGen as one of the earliest and most important benchmarks for LLM-based Verilog generation, helping establish foundations for rapid advancements in AI-assisted hardware design.

The project's open-source nature has already sparked significant interest in the field. While VeriGen was the team's first model presented in the ACM paper, they've since developed an improved family of models called 'CL Verilog' that perform even better.

These newer models have been provided to hardware companies including Qualcomm and NXP for evaluation of potential commercial applications. The work builds upon earlier NYU Tandon efforts including the 2020 DAVE (Deriving Automatically Verilog from English) project, advancing the field by creating a more comprehensive solution through large-scale fine-tuning of language models.

VeriGen complements other AI-assisted chip design initiatives from NYU Tandon aimed at democratizing hardware: their Chip Chat project created the first functional microchip designed through natural language conversations with GPT-4; Chips4All, supported by the National Science Foundation's (NSF’s) Research Traineeship program, trains diverse STEM graduate students in chip design; and BASICS, funded through NSF's Experiential Learning for Emerging and Novel Technologies initiative, teaches chip design to non-STEM professionals.

In addition to Garg, the VeriGen paper authors are Shailja Thakur (former NYU Tandon); Baleegh Ahmad (NYU Tandon PhD '25), Hammond Pearce (former NYU Tandon; currently University of New South Wales), Benjamin Tan (University of Calgary), Dolan-Gavitt (NYU Tandon Associate Professor of Computer Science and Engineering (CSE) and CCS faculty), and Karri (NYU Tandon Professor of ECE and CCS faculty).

Funding for the VeriGen research came from the National Science Foundation and the Army Research Office.

Shailja Thakur, Baleegh Ahmad, Hammond Pearce, Benjamin Tan, Brendan Dolan-Gavitt, Ramesh Karri, and Siddharth Garg. 2024. VeriGen: A Large Language Model for Verilog Code Generation. ACM Trans. Des. Autom. Electron. Syst. 29, 3, Article 46 (May 2024), 31 pages.

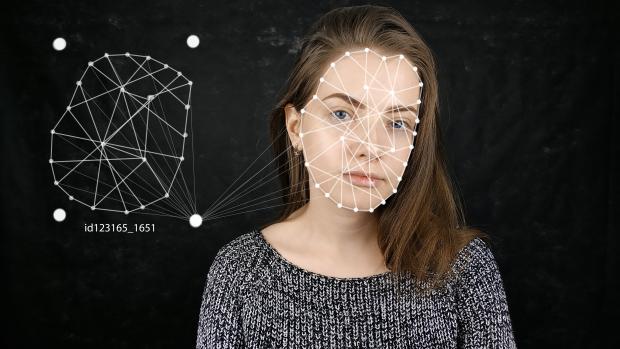

Studying the online deepfake community

In the evolving landscape of digital manipulation and misinformation, deepfake technology has emerged as a dual-use technology. While the technology has diverse applications in art, science, and industry, its potential for malicious use in areas such as disinformation, identity fraud, and harassment has raised concerns about its dangerous implications. Consequently, a number of deepfake creation communities, including the pioneering r/deepfakes on Reddit, have faced deplatforming measures to mitigate risks.

A noteworthy development unfolded in February 2018, just over a week after the removal of r/deepfakes, as MrDeepFakes (MDF) made its entrance into the online realm. Functioning as a privately owned platform, MDF positioned itself as a community hub, boasting to be the largest online space dedicated to deepfake creation and discussion. Notably, this purported communal role sharply contrasts with the platform's primary function — serving as a host for nonconsensual deepfake pornography.

Researchers at NYU Tandon led by Rachel Greenstadt, Professor of Computer Science and Engineering and a member of the NYU Center for Cybersecurity, undertook an exploration of these two key deepfake communities utilizing a mixed methods approach, combining quantitative and qualitative analysis. The study aimed to uncover patterns of utilization by community members, the prevailing opinions of deepfake creators regarding the technology and its societal perception, and attitudes toward deepfakes as potential vectors of disinformation.

Their analysis, presented in a paper written by lead author and Ph.D. candidate Brian Timmerman, revealed a nuanced understanding of the community dynamics on these boards. Within both MDF and r/deepfakes, the predominant discussions lean towards technical intricacies, with many members expressing a commitment to lawful and ethical practices. However, the primary content produced or requested within these forums were nonconsensual and pornographic deepfakes. Adding to the complexity are facesets that raise concerns, hinting at potential mis- and disinformation implications with politicians, business leaders, religious figures, and news anchors comprising 22.3% of all faceset listings.

In addition to Greenstadt and Timmerman, the research team includes Pulak Mehta, Progga Deb, Kevin Gallagher, Brendan Dolan-Gavitt, and Siddharth Garg.

Timmerman, B., Mehta, P., Deb, P., Gallagher, K., Dolan-Gavitt, B., Garg, S., Greenstadt, R. (2023). Studying the online Deepfake Community. Journal of Online Trust and Safety, 2(1). https://doi.org/10.54501/jots.v2i1.126