Ramesh Karri

-

Electrical and Computer Engineering Department Chair

-

Professor of ECE

- Professor of ECE

- Co-founded NYU CCS (2009)

- Co-Directed NYU CCS (2016-2024)

Ramesh Karri is a Professor of Electrical and Computer Engineering at New York University. He co-founded the NYU Center for Cyber Security (cyber.nyu.edu) NYU in 2009. He co-directed the center from 2016-2024. He co-founded the Trust-Hub (trust-hub.org) and founded/organizes the Embedded Systems Challenge (csaw.engineering.nyu.edu/esc), the annual red-blue team event.

Ramesh Karri holds a Ph.D. in Computer Science and Engineering from the University of California at San Diego, and a B.E in ECE from Andhra University. With a focus on hardware cybersecurity, his research and educational endeavors encompass trustworthy ICs, processors, and cyber-physical systems; security-aware computer-aided design, test, verification, validation, and reliability; nano meets security; hardware security competitions, benchmarks, and metrics; biochip security; and additive manufacturing security. Ramesh has published over 350 articles in prestigious journals and conferences.

A Fellow of IEEE, Ramesh's work on hardware cybersecurity has earned numerous best paper award nominations (IEEE S&P 2022, ICCD 2015 and DFTS 2015) and awards (ITC 2014, CCS 2013, DFTS 2013, VLSI Design 2012, ACM Student Research Competition at DAC 2012, ICCAD 2013, DAC 2014, ACM Grand Finals 2013, Kaspersky Challenge, and Embedded Security Challenge). He received the Humboldt Fellowship and the National Science Foundation CAREER award. He is the Editor in Chief of the ACM Journal of Emerging Computing Technologies and an Associate Editor for IEEE and ACM journals. He has had leadership roles in various IEEE conferences like ICCD, HOST, DFTS, and others. He served as an IEEE Computer Society Distinguished Visitor from 2013-2015 and was on the Executive Committee for Security@DAC from 2014-2017. Additionally, he's been on multiple PCs and delivered keynotes on Hardware Security and Trust at events like ESRF, DAC, MICRO.

Education

University of Hyderabad 1988

Master of Technology, Computer Science

Andhra University 1985

Bachelor of Engineering, Electronics and Communication Engineering

University of California, San Diego 1992

Master of Science, Computer Engineering

University of California, San Diego 1993

Doctor of Philosophy, Computer Science

Experience

NYU Tandon School of Engineering

Professor

Research and teaching in computer engineering. Current research focus is on trustworthy and secure hardware.

From: September 2011 to present

Polytechnic Institute of New York University

Associate Professor

Research and teaching in computer engineering. Current research focus is on trustworthy and secure hardware.

From: September 1998 to August 2011

Lucent Bell Labs Engineering Research Center, Princeton

Member of Technical Staff

On-line built-in self test of VLSICs

From: June 1997 to July 1998

University of Massachusetts, Amherst

Assistant Professor of Electrical and Computer Engineering

Research and teaching in computer engineering.

From: September 1993 to July 1998

University of California, San Diego

Graduate Teaching and Research Assistant

From: September 1989 to August 1993

Fifth Generation Computing Group, CMC Research and Development Ce

Research Engineer

Implemented multiprocessor cache consistency protocols and evaluated their performance and scalability.

From: May 1988 to June 1989

Research News

AI tools can help hackers plant hidden flaws in computer chips, study finds

Widely available artificial intelligence systems can be used to deliberately insert hard-to-detect security vulnerabilities into the code that defines computer chips, according to new research from the NYU Tandon School of Engineering, a warning about the potential weaponization of AI in hardware design.

In a study published by IEEE Security & Privacy, an NYU Tandon research team showed that large language models like ChatGPT could help both novices and experts create "hardware Trojans,” malicious modifications hidden within chip designs that can leak sensitive information, disable systems or grant unauthorized access to attackers.

To test whether AI could facilitate malicious hardware modifications, the researchers organized a competition over two years called the AI Hardware Attack Challenge as part of CSAW, an annual student-run cybersecurity event held by the NYU Center for Cybersecurity.

Participants were challenged to use generative AI to insert exploitable vulnerabilities into open-source hardware designs, including RISC-V processors and cryptographic accelerators, then demonstrate working attacks.

"AI tools definitely simplify the process of adding these vulnerabilities," said Jason Blocklove, a Ph.D. candidate in NYU Tandon’s Electrical and Computer Engineering (ECE) Department and lead author of the study. "Some teams fully automated the process. Others interacted with large language models to understand the design better, identify where vulnerabilities could be inserted, and then write relatively simple malicious code."

The most effective submissions came from teams that created automated tools requiring minimal human oversight. These systems could analyze hardware code to identify vulnerable locations, then generate and insert custom trojans without direct human intervention. The AI-generated flaws included backdoors granting unauthorized memory access, mechanisms to leak encryption keys, and logic designed to crash systems under specific conditions.

Perhaps most concerning, several teams with little hardware expertise successfully created sophisticated attacks. Two submissions came from undergraduate teams with minimal prior knowledge of chip design or security, yet both produced vulnerabilities rated medium to high severity by standard scoring systems.

Most large language models include safeguards designed to prevent malicious use, but competition participants found these protections relatively easy to circumvent. One winning team crafted prompts framing malicious requests as academic scenarios, successfully inducing the AI to generate working hardware trojans. Other teams discovered that requesting responses in less common languages could bypass content filters entirely.

The permanence of hardware vulnerabilities amplifies the risk. Unlike software flaws that can be corrected through updates, errors in manufactured chips cannot be fixed without replacing the components entirely.

"Once a chip has been manufactured, there is no way to fix anything in it without replacing the components themselves," Blocklove said. "That's why researchers focus on hardware security. We’re getting ahead of problems that don't exist in the real world yet but could conceivably occur. If such an attack did happen, the consequences could be catastrophic."

The research follows earlier work by the same team demonstrating AI's potential benefits for chip design. In their "Chip Chat" project, the researchers showed that ChatGPT could help design a functioning microprocessor. The new study reveals the technology's dual nature. The same capabilities that could democratize chip design might also enable new forms of attack.

"This competition has highlighted both a need for improved LLM guardrails as well as a major need for improved verification and security analysis tools," the researchers wrote.

The researchers emphasized that commercially available AI models represent only the beginning of potential threats. More specialized open-source models, which remain largely unexplored for these purposes, could prove even more capable of generating sophisticated hardware attacks.

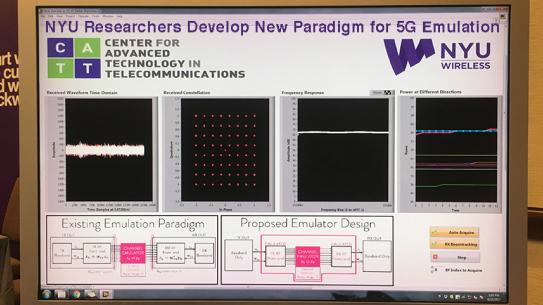

The paper’s senior author is NYU Tandon’s Ramesh Karri, Professor and Chair of ECE. Karri is also on the faculty of the Center for Advanced Technology in Telecommunications and co-founded and co-directed the NYU Center for Cybersecurity (CCS). Karri founded the embedded security challenge (ESC), the first hardware security challenge worldwide. Hammond Pearce, Senior Lecturer at UNSW Sydney's School of Computer Science and Engineering and a former NYU Tandon research assistant professor in ECE and CCS, is the other co-author.

J. Blocklove, H. Pearce and R. Karri, "Lowering the Bar: How Large Language Models Can be Used as a Copilot by Hardware Hackers" in IEEE Security & Privacy, vol. , no. 01, pp. 2-12, PrePrints 5555, doi: 10.1109/MSEC.2025.3600140.

New NYU Tandon-led project will accelerate privacy-preserving computing

Today's most advanced cryptographic computing technologies — which enable privacy-preserving computation — are trapped in research labs by one critical barrier: they're thousands of times too slow for everyday use.

NYU Tandon, helming a research team that includes Stanford University and the City University of New York, just received funding from a $3.8 million grant from the National Science Foundation to build the missing infrastructure that could make those technologies practical, via a new design platform and library that allows researchers to develop and share chip designs.

The problem is stark. Running a simple AI model on encrypted data takes over 10 minutes instead of milliseconds, a four order of magnitude performance gap that impedes many real-world use cases.

Current approaches to speeding up cryptographic computing have hit a wall, however. "The normal tricks that we have to get over this performance bottleneck won’t scale much further, so we have to do something different," said Brandon Reagen, the project's lead investigator. Reagen is an NYU Tandon assistant professor with appointments in the Electrical and Computer Engineering (ECE) Department and in the Computer Science and Engineering (CSE) Department. He is also on the faculty of NYU's Center for Advanced Technology in Telecommunications (CATT) and the NYU Center for Cybersecurity (CCS).

The team's solution is a new platform called "Cryptolets.”

Currently, researchers working on privacy chips must build everything from scratch. Cryptolets will provide three things: a library where researchers can share and access pre-built, optimized hardware designs for privacy computing; tools that allow multiple smaller chips to work together as one powerful system; and automated testing to ensure contributed designs work correctly and securely.

This chiplet approach — using multiple small, specialized chips working together — is a departure from traditional single, monolithic chip optimization, potentially breaking through performance barriers.

For Reagen, this project represents the next stage of his research approach. "For years, most of our academic research has been working in simulation and modeling," he said. "I want to pivot to building. I’d like to see real-world encrypted data run through machine learning workloads in the cloud without the cloud ever seeing your data. You could, for example, prove you are who you say you are without actually revealing your driver's license, social security number, or birth certificate."

What sets this project apart is its community-building approach. The researchers are creating competitions where students and other researchers use Cryptolets to compete in designing the best chip components. The project plans to organize annual challenges at major cybersecurity and computer architecture conferences. The first workshop will take place in October 2025 at MICRO 2025, which focuses on hardware for zero-knowledge proofs.

"We want to build a community, too, so everyone's not working in their own silos," Reagen said. The project will support fabrication opportunities for competition winners, with plans to assist tapeouts of smaller designs initially and larger full-system tapeouts in the later phases, helping participants who lack chip fabrication resources at their home institutions

"With Cryptolets, we are not just funding a new hardware platform—we are enabling a community-wide leap in how privacy-preserving computation can move from theory to practice,” said Deep Medhi, program director in the Computer & Information Sciences & Engineering Directorate at the U.S. National Science Foundation. “By lowering barriers for researchers and students to design, share and test cryptographic chips, this project aligns with NSF’s mission to advance secure, trustworthy and accessible technologies that benefit society at large."

If the project succeeds, it could enable a future where strong digital privacy isn't just theoretically possible, but practically deployable at scale, from protecting personal health data to securing financial transactions to enabling private AI assistants that never see people's actual queries.

Along with Reagen, the team is led by NYU Tandon co-investigators Ramesh Karri, ECE Professor and Department Chair, and faculty member of CATT and CCS; Siddharth Garg, Professor in ECE and faculty member of NYU WIRELESS and CCS; Austin Rovinski, Assistant Professor in ECE; The City College of New York’s Rosario Gennaro and Tushar Jois; and Stanford's Thierry Tambe and Caroline Trippel, with Warren Savage serving as project manager. The team also includes industry advisors from companies working on cryptographic technologies.

Large language models can execute complete ransomware attacks autonomously, NYU Tandon research shows

Criminals can use artificial intelligence, specifically large language models, to autonomously carry out ransomware attacks that steal personal files and demand payment, handling every step from breaking into computer systems to writing threatening messages to victims, according to new research from NYU Tandon School of Engineering.

The study serves as an early warning to help defenders prepare countermeasures before bad actors adopt these AI-powered techniques.

A simulation malicious AI system developed by the Tandon team carried out all four phases of ransomware attacks — mapping systems, identifying valuable files, stealing or encrypting data, and generating ransom notes — across personal computers, enterprise servers, and industrial control systems.

This system, which the researchers call “Ransomware 3.0," became widely known recently as "PromptLock," a name chosen by cybersecurity firm ESET when experts there discovered it on VirusTotal, an online platform where security researchers test whether files can be detected as malicious.

The Tandon researchers had uploaded their prototype to VirusTotal during testing procedures, and the files there appeared as functional ransomware code with no indication of their academic origin. ESET initially believed they found the first AI-powered ransomware being developed by malicious actors. While it is the first to be AI-powered, the ransomware prototype is a proof-of-concept that is non-functional outside of the contained lab environment.

"The cybersecurity community's immediate concern when our prototype was discovered shows how seriously we must take AI-enabled threats," said Md Raz, a doctoral candidate in the Electrical and Computer Engineering Department who is the lead author on the Ransomware 3.0 paper the team published publicly. "While the initial alarm was based on an erroneous belief that our prototype was in-the-wild ransomware and not laboratory proof-of-concept research, it demonstrates that these systems are sophisticated enough to deceive security experts into thinking they're real malware from attack groups."

The research methodology involved embedding written instructions within computer programs rather than traditional pre-written attack code. When activated, the malware contacts AI language models to generate Lua scripts customized for each victim's specific computer setup, using open-source models that lack the safety restrictions of commercial AI services.

Each execution produces unique attack code despite identical starting prompts, creating a major challenge for cybersecurity defenses. Traditional security software relies on detecting known malware signatures or behavioral patterns, but AI-generated attacks produce variable code and execution behaviors that could evade these detection systems entirely.

Testing across three representative environments showed both AI models were highly effective at system mapping and correctly flagged 63-96% of sensitive files depending on environment type. The AI-generated scripts proved cross-platform compatible, operating on (desktop/server) Windows, Linux, and (embedded) Raspberry Pi systems without modification.

The economic implications reveal how AI could reshape ransomware operations. Traditional campaigns require skilled development teams, custom malware creation, and substantial infrastructure investments. The prototype consumed approximately 23,000 AI tokens per complete attack execution, equivalent to roughly $0.70 using commercial API services running flagship models. Open-source AI models eliminate these costs entirely.

This cost reduction could enable less sophisticated actors to conduct advanced campaigns previously requiring specialized technical skills. The system's ability to generate personalized extortion messages referencing discovered files could increase psychological pressure on victims compared to generic ransom demands.

The researchers conducted their work under institutional ethical guidelines within controlled laboratory environments. The published paper provides critical technical details that can help the broader cybersecurity community understand this emerging threat model and develop stronger defenses.

The researchers recommend monitoring sensitive file access patterns, controlling outbound AI service connections, and developing detection capabilities specifically designed for AI-generated attack behaviors.

The paper's senior authors are Ramesh Karri — ECE Professor and department chair, and faculty member of Center for Advanced Technology in Telecommunications (CATT) and NYU Center for Cybersecurity — and Farshad Khorrami — ECE Professor and CATT faculty member. In addition to lead author Raz, the other authors include ECE Ph.D. candidate Meet Udeshi; ECE Postdoctoral Scholar Venkata Sai Charan Putrevu and ECE Senior Research Scientist Prashanth Krishnamurthy.

The work was supported by grants from the Department of Energy, National Science Foundation, and from the State of New York via Empire State Development's Division of Science, Technology and Innovation.

Raz, Md, et al. “Ransomware 3.0: Self-Composing and LLM-Orchestrated.” arXiv.Org, 28 Aug. 2025, doi.org/10.48550/arXiv.2508.20444.

NYU Tandon researchers develop AI agent that solves cybersecurity challenges autonomously

Artificial intelligence agents — AI systems that can work independently toward specific goals without constant human guidance — have demonstrated strong capabilities in software development and web navigation. Their effectiveness in cybersecurity has remained limited, however.

That may soon change, thanks to a research team from NYU Tandon School of Engineering, NYU Abu Dhabi and other universities that developed an AI agent capable of autonomously solving complex cybersecurity challenges.

The system, called EnIGMA, was presented this month at the International Conference on Machine Learning (ICML) 2025 in Vancouver, Canada.

"EnIGMA is about using Large Language Model agents for cybersecurity applications," said Meet Udeshi, a NYU Tandon Ph.D. student and co-author of the research. Udeshi is advised by Ramesh Karri, Chair of NYU Tandon's Electrical and Computer Engineering Department (ECE) and a faculty member of the NYU Center for Cybersecurity and NYU Center for Advanced Technology in Telecommunications (CATT), and by Farshad Khorrami, ECE professor and CATT faculty member. Both Karri and Khorrami are co-authors on the paper, with Karri serving as a senior author.

To build EnIGMA, the researchers started with an existing framework called SWE-agent, which was originally designed for software engineering tasks. However, cybersecurity challenges required specialized tools that didn't exist in previous AI systems. "We have to restructure those interfaces to feed it into an LLM properly. So we've done that for a couple of cybersecurity tools," Udeshi explained.

The key innovation was developing what they call "Interactive Agent Tools" that convert visual cybersecurity programs into text-based formats the AI can understand. Traditional cybersecurity tools like debuggers and network analyzers use graphical interfaces with clickable buttons, visual displays, and interactive elements that humans can see and manipulate.

"Large language models process text only, but these interactive tools with graphical user interfaces work differently, so we had to restructure those interfaces to work with LLMs," Udeshi said.

The team built their own dataset by collecting and structuring Capture The Flag (CTF) challenges specifically for large language models. These gamified cybersecurity competitions simulate real-world vulnerabilities and have traditionally been used to train human cybersecurity professionals.

"CTFs are like a gamified version of cybersecurity used in academic competitions. They're not true cybersecurity problems that you would face in the real world, but they are very good simulations," Udeshi noted.

Paper co-author Minghao Shao, a NYU Tandon Ph.D. student and Global Ph.D. Fellow at NYU Abu Dhabi who is advised by Karri and Muhammad Shafique, Professor of Computer Engineering at NYU Abu Dhabi and ECE Global Network Professor at NYU Tandon, described the technical architecture: "We built our own CTF benchmark dataset and created a specialized data loading system to feed these challenges into the model." Shafique is also a co-author on the paper.

The framework includes specialized prompts that provide the model with instructions tailored to cybersecurity scenarios.

EnIGMA demonstrated superior performance across multiple benchmarks. The system was tested on 390 CTF challenges across four different benchmarks, achieving state-of-the-art results and solving more than three times as many challenges as previous AI agents.

During the research conducted approximately 12 months ago, "Claude 3.5 Sonnet from Anthropic was the best model, and GPT-4o was second at that time," according to Udeshi.

The research also identified a previously unknown phenomenon called "soliloquizing," where the AI model generates hallucinated observations without actually interacting with the environment, a discovery that could have important consequences for AI safety and reliability.

Beyond this technical finding, the potential applications extend outside of academic competitions. "If you think of an autonomous LLM agent that can solve these CTFs, that agent has substantial cybersecurity skills that you can use for other cybersecurity tasks as well," Udeshi explained. The agent could potentially be applied to real-world vulnerability assessment, with the ability to "try hundreds of different approaches" autonomously.

The researchers acknowledge the dual-use nature of their technology. While EnIGMA could help security professionals identify and patch vulnerabilities more efficiently, it could also potentially be misused for malicious purposes. The team has notified representatives from major AI companies including Meta, Anthropic, and OpenAI about their results.

In addition to Karri, Khorrami, Shafique, Udeshi and Shao, the paper's authors are Talor Abramovich (Tel Aviv University), Kilian Lieret (Princeton University), Haoran Xi (NYU Tandon), Kimberly Milner (NYU Tandon), Sofija Jancheska (NYU Tandon), John Yang (Stanford University), Carlos E. Jimenez (Princeton University), Prashanth Krishnamurthy (NYU Tandon), Brendan Dolan-Gavitt (NYU Tandon), Karthik Narasimhan (Princeton University), and Ofir Press (Princeton University).

Funding for the research came from Open Philanthropy, Oracle, the National Science Foundation, the Army Research Office, the Department of Energy, and NYU Abu Dhabi Center for Cybersecurity and Center for Artificial Intelligence and Robotics.