Research News

NYU Tandon researchers develop technology that may allow stroke patients to undergo rehab at home

For survivors of strokes, which afflict nearly 800,000 Americans each year, regaining fine motor skills like writing and using utensils is critical for recovering independence and quality of life. But getting intensive, frequent rehabilitation therapy can be challenging and expensive.

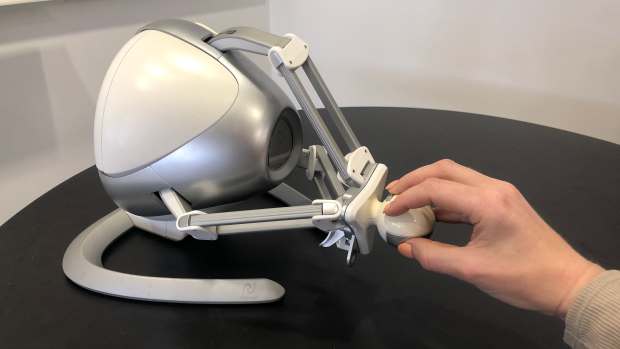

Now, researchers at NYU Tandon School of Engineering are developing a new technology that could allow stroke patients to undergo rehabilitation exercises at home by tracking their wrist movements through a simple setup: a smartphone strapped to the forearm and a low-cost gaming controller called the Novint Falcon.

The Novint Falcon, a desktop robot typically used for video games, can guide users through specific arm motions and track the trajectory of its controller. But it cannot directly measure the angle of the user's wrist, which is essential data for therapists providing remote rehabilitation.

In a paper presented at SPIE Smart Structures + Nondestructive Evaluation 2024, the researchers proposed using the Falcon in tandem with a smartphone's built-in motion sensors to precisely monitor wrist angles during rehab exercises.

"Patients would strap their phone to their forearm and manipulate this robot," said Maurizio Porfiri, NYU Tandon Institute Professor and director of its Center for Urban Science + Progress (CUSP), who is the paper’s senior author. "Data from the phone's inertial sensors can then be combined with the robot's measurements through machine learning to infer the patient's wrist angle."

The researchers collected data from a healthy subject performing tasks with the Falcon while wearing motion sensors on the forearm and hand to capture the true wrist angle. They then trained an algorithm to predict the wrist angles based on the sensor data and Falcon controller movements.

The resulting algorithm could predict wrist angles with over 90% accuracy, a promising initial step toward enabling remote therapy with real-time feedback in the absence of an in-person therapist.

"This technology could allow patients to undergo rehabilitation exercises at home while providing detailed data to therapists remotely assessing their progress," Roni Barak Ventura, the paper’s lead author who was an NYU Tandon postdoctoral fellow at the time of the study. "It's a low-cost, user-friendly approach to increasing access to crucial post-stroke care."

The researchers plan to further refine the algorithm using data from more subjects. Ultimately, they hope the system could help stroke survivors stick to intensive rehab regimens from the comfort of their homes.

"The ability to do rehabilitation exercises at home with automatic tracking could dramatically improve quality of life for stroke patients," said Barak Ventura. "This portable, affordable technology has great potential for making a difficult recovery process much more accessible."

This study adds to NYU Tandon’s body of work that aims to improve stroke recovery. In 2022, Researchers from NYU Tandon began collaborating with the FDA to design a regulatory science tool based on biomarkers to objectively assess the efficacy of rehabilitation devices for post-stroke motor recovery and guide their optimal usage. A study from earlier this year unveiled advances in technology that uses implanted brain electrodes to recreate the speaking voice of someone who has lost speech ability, which can be an outcome from stroke.

In addition to Porfiri and Barak Ventura, the study’s authors are Angelo Catalano, who earned an MS from NYU Tandon in 2024, and Rayan Succar, an NYU Tandon PhD candidate. The study was funded by grants from the National Science Foundation.

Roni Barak Ventura, Angelo Catalano, Rayan Succar, and Maurizio Porfiri "Automating the assessment of wrist motion in telerehabilitation with haptic devices", Proc. SPIE 12948, Soft Mechatronics and Wearable Systems, 129480F (9 May 2024); https://doi.org/10.1117/12.3010545

Cutting-edge cancer treatments research promises more effective interventions

Cancer is one of the most devastating diagnoses that a person can receive, and it is a society-wide problem. According to the National Cancer Institute, an estimated 2,001,140 new cases of cancer will be diagnosed in the United States and 611,720 people will die from the disease this year. While cancer treatments have seen large improvements over the last decades, researchers are still laser-focused on developing strategies to defeat cancers, especially those that have been resistant to traditional interventions.

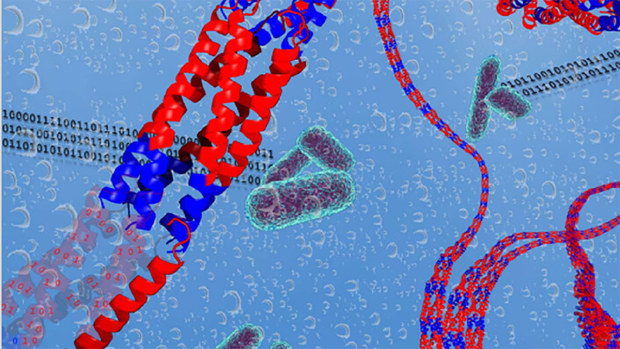

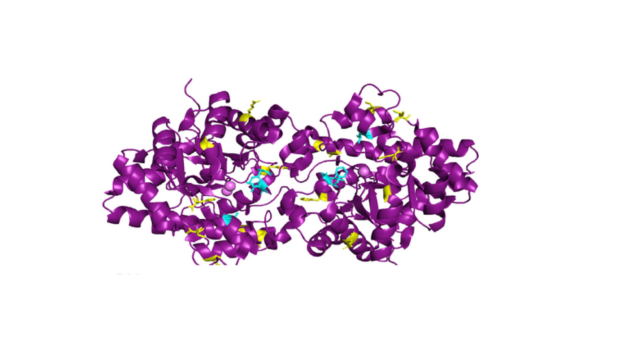

New research from Jin Kim Montclare, Professor of Chemical and Biomolecular Engineering, may lend hope to cancer patients in the future. Montclare’s lab, which uses customized artificial proteins to target human disorders, drug delivery and tissue regeneration utilizing a blend of chemistry and genetic engineering, has recently published two papers that take aim at cancers that have been difficult to treat.

MAPping a course to recovery

In the ongoing quest to develop more effective cancer therapies, these researchers have unveiled a promising approach utilizing protein-based targeting agents. A recent study published in Biomaterials Science introduces a novel strategy that harnesses the power of multivalent assembled proteins (MAPs) to target hypoxic tumors with unprecedented precision and efficacy.

Traditional cancer treatments often rely on passive or active targeting mechanisms to deliver therapeutic agents to tumor sites. However, these approaches have limitations, particularly in overcoming physiological and pathological barriers. To address these challenges, the research team focused on exploiting the unique features of the tumor microenvironment (TME), specifically its hypoxic conditions.

Hypoxia, a characteristic feature of many solid tumors, is known to play a crucial role in tumor progression and resistance to therapy. By targeting hypoxia-inducible factor 1 alpha (HIF1α), a key regulator of cellular response to low oxygen levels, the researchers aimed to develop a more effective strategy for tumor-specific drug delivery.

Previous efforts to target HIF1α have been hindered by the instability and limited binding abilities of the peptide-based molecules used. To overcome these obstacles, the team turned to MAPs, which offer the advantages of high stability and multivalency.

Drawing inspiration from their successful development of MAPs targeting COVID-19, the researchers engineered HIF1α-MAPs (H-MAPs) by grafting critical residues of HIF1α onto the MAP scaffold. This innovative design resulted in H-MAPs with picomolar binding affinities, significantly surpassing previous approaches. In vivo studies showed promising results, with H-MAPs effectively homing in on hypoxic tumors.

“This is a very promising result, using a material that is degradable within the body and likely will limit side effects to treatments,” said Montclare. “We’re taking advantage of the building blocks of our own bodies and using those protein compositions to treat the body — and that’s where we’re making big progress.”

Montclare’s findings suggest that H-MAPs hold great potential as targeted therapeutic agents for cancer treatment. With further refinement and exploration, H-MAPs could offer a new avenue for precision medicine, providing clinicians with a powerful tool to combat cancer while minimizing side effects and maximizing therapeutic efficacy.

The development of H-MAPs represents a significant advancement in the field of cancer therapy, highlighting the importance of innovative approaches that leverage the intricacies of the tumor microenvironment. As researchers continue to unravel the complexities of cancer biology, protein-based targeting agents like H-MAPs offer hope for improved outcomes and better quality of life for cancer patients.

Targeting stubborn breast cancer subtypes

Not all tumors are hypoxic, however, and some forms of cancers are much harder to target than others.

Triple-negative breast cancer (TNBC) poses a significant challenge in the realm of oncology due to its resistance to conventional targeted therapies. Unlike other breast cancer subtypes, TNBC lacks the receptor biomarkers — such as estrogen receptors and human epidermal growth factor receptor 2 — making it unresponsive to standard treatments. Consequently, chemotherapy remains the primary option for TNBC patients. However, the efficacy of chemotherapy is often hindered by the development of drug resistance, necessitating innovative approaches to enhance treatment outcomes.

In recent years, there has been a surge of interest in improving the efficacy of chemotherapy for TNBC through enhanced drug delivery systems. One promising avenue involves the use of biocompatible materials, including lipids, polymers, and proteins, as carriers to encapsulate chemotherapeutic agents. Among these materials, protein-based hydrogels have emerged as a particularly attractive option due to their biocompatibility, tunable properties, and ability to achieve controlled drug release.

A recent breakthrough in the field comes from the development of a novel protein-based hydrogel, known as Q8, which demonstrates remarkable improvements over previous materials

By fine-tuning the molecular characteristics of the hydrogel using a machine learning algorithm, the researchers were able to engineer Q8 to exhibit a two-fold increase in gelation rate and mechanical strength. These enhancements pave the way for Q8 to serve as a promising platform for sustained chemotherapeutic delivery.

In a groundbreaking study, the researchers investigated the therapeutic potential of Q8 for the treatment of TNBC in vivo using a mouse model. Remarkably, the delivery of doxorubicin encapsulated in Q8 resulted in significantly improved tumor suppression compared to conventional doxorubicin treatment alone. This achievement marks a significant milestone in the development of non-invasive and targeted therapies for TNBC, offering new hope for patients facing this aggressive form of breast cancer.

The success of Q8 underscores the immense potential of protein-based hydrogels as versatile platforms for drug delivery in cancer therapy. By leveraging the unique properties of these materials, researchers can overcome longstanding challenges associated with chemotherapy, including poor drug bioavailability and resistance. Moving forward, further advancements in protein engineering and hydrogel design hold the promise of revolutionizing cancer treatment paradigms, offering renewed optimism for patients battling TNBC and other challenging malignancies.

NYU Tandon researchers help bring the fossil record into the digital age with low-cost 3D scanning

Inside Museu de Paleontologia Plácido Cidade Nuvens (MPPCN), a museum in rural northeastern Brazil, sits an unassuming piece of equipment that promises to digitize one of the world's most prized collections of ancient fossils.

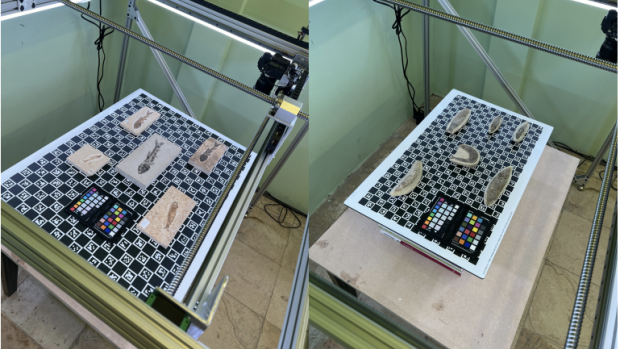

PaleoScan, designed by NYU Tandon School of Engineering researchers as part of a collaboration between Brazilian paleontologists and American computer scientists, is a first-of-its-kind 3D fossil scanner that allows museum staff to rapidly and easily scan and digitally archive their vast fossil collection for global accessibility. The technology can democratize paleontology and archaeology in the process.

The research team presented a paper about PaleoScan’s development at ACM CHI conference on Human Factors in Computing Systems this month in Honolulu, Hawaii. View a video about PaleoScan.

"PaleoScan was created specifically to enable museums with limited resources to digitize vital fossil collections," said Cláudio Silva, NYU Tandon Institute Professor of Computer Science and Engineering and Co-Director of the Visualization and Data Analytics Research Center (VIDA), who led PaleoScan’s development. "This low-cost and easy-to-assemble technology delivers results that rival expensive CT scanners while working at a much faster pace. Affordability and high-volume output were paramount goals. We achieved them."

MPPCN’s 11,000 diverse and well-preserved fossils from Brazil’s Araripe Basin, one of the planet's most fossil-rich regions, hold immense scientific value.

Digitizing the museum's world-class collection has proved enormously challenging, however. Situated in the remote city of Santana do Cariri, transporting the fragile fossils over two hours to the nearest urban center risked damaging the specimens. Yet the museum’s lack of technologically-trained personnel, reliable internet, computing resources and funds for expensive scanning technology made on-site digitizing unviable.

"PaleoScan emerges as a powerful system for fossil investigation," said Naiara Cipriano Oliveira, visiting researcher at the MPPCN at Universidade Regional do Cariri in Brazil, who worked with Silva on installing the device and oversees the digitization of the collection. "Thousands of irreplaceable fossils will soon be readily available to scientists anywhere in the world, ensuring that evidence of ancient life is studied and preserved like never before."

PaleoScan is a fully self-contained system with innovative hardware and software components. At its core is a compact, automated camera rig that can slide along two axes above fossils placed on a calibrated surface. An intuitive touchscreen interface allows an operator to simply select which fossils to scan and the desired resolution.

The scanner's integrated camera (a mirrorless DSLR) then automatically captures a series of overlapping photos from different angles while LED lights ensure consistent illumination. This raw image data, stored on an SD card, is periodically uploaded to a cloud-based software pipeline developed at NYU Tandon.

The "PaleoDP" software processes the images using photogrammetry techniques to discern precise color, texture and geometry for each fossil down to sub-millimeter accuracy. Paleontologists can then easily annotate the 3D fossil data with metadata before it is added to an online database for other researchers to study remotely.

The PaleoScan device itself was assembled for less than $3,500, making it a low-cost solution for museums and fossil repositories to rapidly digitize their collections and make their specimens available for anyone to study.

In a pilot deployment last year, PaleoScan digitized more than 200 fossils at MPCCN in just weeks. At that rate, the entire specimen collection could be digitized and backed up in about a year.

High-resolution PaleoScan images revealed remnants of the ganoid scales of an exquisitely preserved fossil fish species, offering insights about its evolutionary biology and environment.

“The incredible preservation and abundance of these fossils from Brazil offer a rare opportunity to study the ecology of ancient environments. Now with PaleoScan, a scanner can come to the fossils rather than having fragile specimens sent to expensive scanners, allowing high-quality scans to be easily shared with the global scientific community,” said Akinobu Watanabe, Ph.D., Associate Professor of Anatomy at New York Institute of Technology and Research Associate at the American Museum of Natural History, who assisted NYU researchers in developing and deploying the device technology.

Looking ahead, scientists envision fleets of PaleoScan devices deploying to digitize neglected fossil collections globally, especially at underfunded museums and remote field sites lacking modern equipment. The portable, low-cost scanner could democratize access to the world’s archived fossil record for educators and researchers.

PaleoScan builds on Silva’s track record of developing new technologies for museums. He led an international team that worked with The Frick Collection to create ARIES - ARt Image Exploration Space, an interactive image manipulation system that enables the exploration and organization of fine digital art. The PaleoScan project has been partially supported through funding from the National Science Foundation in the U.S. and Fundação Cearense de Apoio ao Desenvolvimento (Funcap) in Brazil.

CHI '24: Proceedings of the CHI Conference on Human Factors in Computing Systems; May 2024; Article No.: 708; Pages 1–16 https://doi.org/10.1145/3613904.3642020

Deep-sea sponge's “zero-energy” flow control could inspire new energy efficient designs

The Venus flower basket sponge, with its delicate glass-like lattice outer skeleton, has long intrigued researchers seeking to explain how this fragile-seeming creature’s body can withstand the harsh conditions of the deep sea where it lives.

Now, new research reveals yet another engineering feat of this ancient animal’s structure: its ability to filter feed using only the faint ambient currents of the ocean depths, no pumping required.

This discovery of natural ‘“zero energy” flow control by an international research team co-led by University of Rome Tor Vergata and NYU Tandon School of Engineering could help engineers design more efficient chemical reactors, air purification systems, heat exchangers, hydraulic systems, and aerodynamic surfaces.

In a study published in Physical Review Letters, the team found through extremely high-resolution computer simulations how the skeletal structure of the Venus flower basket sponge (Euplectella aspergillum) diverts very slow deep sea currents to flow upwards into its central body cavity, so it can feed on plankton and other marine detritus it filters out of the water.

The sponge pulls this off via its spiral, ridged outer surface that functions like a spiral staircase. This allows it to passively draw water upwards through its porous, lattice-like frame, all without the energy demands of pumping.

"Our research settles a debate that has emerged in recent years: the Venus flower basket sponge may be able to draw in nutrients passively, without any active pumping mechanism," said Maurizio Porfiri, NYU Tandon Institute Professor and director of its Center for Urban Science + Progress (CUSP), who co-led the study and co-supervised the research. "It's an incredible adaptation allowing this filter feeder to thrive in currents normally unsuitable for suspension feeding."

At higher flow speeds, the lattice structure helps reduce drag on the organism. But it is in the near-stillness of the deep ocean floors that this natural ventilation system is most remarkable, and demonstrates just how well the sponge accommodates its harsh environment. The study found that the sponge’s ability to passively draw in food works only at the very slow current speeds – just centimeters per second – of its habitat.

"From an engineering perspective, the skeletal system of the sponge shows remarkable adaptations to its environment, not only from the structural point of view, but also for what concerns its fluid dynamic performance," said Giacomo Falcucci of Tor Vergata University of Rome and Harvard University, the paper’s first author. Along with Porfiri, Falcucci co-led the study, co-supervised the research and designed the computer simulations. "The sponge has arrived at an elegant solution for maximizing nutrient supply while operating entirely through passive mechanisms."

Researchers used the powerful Leonardo supercomputer at CINECA, a supercomputing center in Italy, to create a highly realistic 3D replica of the sponge, containing around 100 billion individual points that recreate the sponge's complex helical ridge structure. This “digital twin” allows experimentation that is impossible on live sponges, which cannot survive outside their deep-sea environment.

The team performed highly detailed simulations of water flow around and inside the computer model of the skeleton of the Venus flower basket sponge. With Leonardo's massive computing power, allowing quadrillions of calculations per second, they could simulate a wide range of water flow speeds and conditions.

The researchers say the biomimetic engineering insights they uncovered could help guide the design of more efficient reactors by optimizing flow patterns inside while minimizing drag outside. Similar ridged, porous surfaces could enhance air filtration and ventilation systems in skyscrapers and other structures. The asymmetric, helical ridges may even inspire low-drag hulls or fuselages that stay streamlined while promoting interior air flows.

The study builds upon the team’s prior Euplectella aspergillum research published in Nature in 2021, in which it revealed it had created a first-ever simulation of the deep-sea sponge and how it responds to and influences the flow of nearby water.

In addition to Porfiri and Falcucci, the current study’s authors are Giorgio Amati of CINECA; Gino Bella of Niccolò Cusano University; Andrea Luigi Facci of University of Tuscia; Vesselin K. Krastev of University of Rome Tor Vergata; Giovanni Polverino of University of Tuscia, Monash University, and University of Western Australia; and Sauro Succi of the Italian Institute of Technology.

A grant from the National Science Foundation supported the research. Other funding came from CINECA, Next Generation EU, European Research Council, Monash University and University of Tuscia.

New research develops algorithm to track cognitive arousal for optimizing remote work

In the ever-evolving landscape of workplace dynamics, the intricate dance between stress and productivity takes center stage. A recent study, spanning various disciplines and delving into the depths of neuroscience, sheds light on this complex relationship, challenging conventional wisdom and opening new pathways for understanding how to improve productivity.

At the heart of this exploration lies the Yerkes-Dodson law, a theory proposing an optimal level of stress conducive to peak productivity. Yet, as researchers unveil, the universality of this law remains under scrutiny, prompting deeper dives into the nuances of stress-response across different contexts and populations.

Drawing from neuroscience, researchers from NYU Tandon led by Rose Faghih, Associate Professor of Biomedical Engineering, have published a study illuminating the role of autonomic nervous system, which is directly influenced by key brain regions — like the amygdala, prefrontal cortex, and hippocampus — in shaping our responses to stress and influencing cognitive functions. These insights not only deepen our comprehension of stress but also offer pathways to enhance cognitive performance.

The researchers’ approach is innovative, in that it concurrently tracks cognitive arousal and expressive typing states, employing sophisticated multi-state Bayesian filtering techniques. This allows them to paint a picture of how physiological responses and cognitive states interplay to influence productivity.

One particularly innovative aspect of the study involves typing dynamics as a measure of cognitive engagement and emotional expression. By examining typing patterns and brain autonomic nervous system activation, researchers gain insights into individuals' cognitive states, especially relevant in remote work environments. The integration of typing dynamics into the analysis provides a tangible link between internal cognitive processes and externalized behaviors.

“With the rise of remote work, understanding how stress impacts productivity takes on newfound significance,” Faghih says. “By uncovering the mechanisms at play, we’re paving the way for developing tools and strategies to eventually optimize performance and well-being in remote settings.”

Moreover, the study's methodology, grounded in sophisticated Bayesian models, promises not only to validate existing theories but also to unveil new patterns and insights. As the discussion turns to practical applications, the potential for integrating these findings into ergonomic workspaces and mental health support systems becomes apparent.

This research offers a glimpse into the intricate web of stress, productivity, and cognition. As we navigate the evolving landscape of work, understanding these dynamics becomes paramount, paving the way for a more productive, resilient workforce.

Alam, S., Khazaei, S., & Faghih, R. T. (2024). Unveiling productivity: The interplay of cognitive arousal and expressive typing in remote work. PLOS ONE, 19(5). https://doi.org/10.1371/journal.pone.0300786

NYU Tandon School of Engineering researchers test AI systems’ ability to solve The New York Times’ Connections puzzle

Can artificial intelligence (AI) match human skills for finding obscure connections between words?

Researchers at NYU Tandon School of Engineering turned to the daily Connections puzzle from The New York Times to find out.

Connections gives players five attempts to group 16 words into four thematically linked sets of four, progressing from “simple” groups generally connected through straightforward definitions to “tricky” ones reflecting abstract word associations requiring unconventional thinking.

In a study that will be presented at the IEEE 2024 Conference on Games – taking place in Milan, Italy from August 5 - 8 – the researchers investigated whether modern natural language processing (NLP) systems could solve these language-based puzzles.

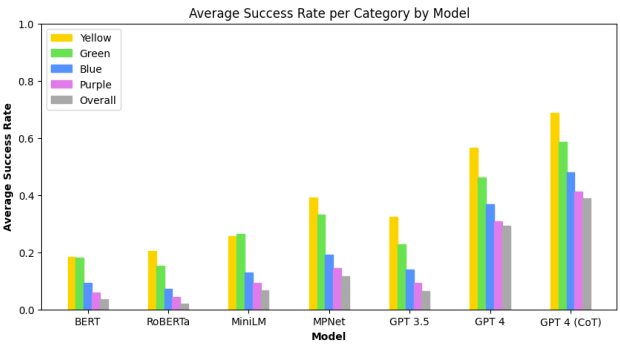

With Julian Togelius, NYU Tandon Associate Professor of Computer Science and Engineering (CSE) and Director of the Game Innovation Lab, as the study’s senior author, the team explored two AI approaches. The first leveraged GPT-3.5 and recently-released GPT-4, powerful large language models (LLMs) from OpenAI, capable of understanding and generating human-like language. The second approach used sentence embedding models, namely BERT, RoBERTa, MPNet, and MiniLM, which encode semantic information as vector representations but lack the full language understanding and generation capabilities of LLMs.

The results showed that while all the AI systems could solve some of the Connections puzzles, the task remained challenging overall. GPT-4 solved about 29% of puzzles, significantly better than the embedding methods and GPT-3.5, but far from mastering the game. Notably, the models mirrored human performance in finding the difficulty levels aligned with the puzzle's categorization from "simple" to "tricky."

"LLMs are becoming increasingly widespread, and investigating where they fail in the context of the Connections puzzle can reveal limitations in how they process semantic information,” said Graham Todd, PhD student in the Game Innovation Lab who is the study’s lead author.

The researchers found that explicitly prompting GPT-4 to reason through the puzzles step-by-step significantly boosted its performance to just over 39% of puzzles solved.

“Our research confirms prior work showing this sort of ‘chain-of-thought’ prompting can make language models think in more structured ways,” said Timothy Merino, PhD student in the Game Innovation Lab who is an author on the study. “Asking the language models to reason about the tasks that they're accomplishing helps them perform better.”

Beyond benchmarking AI capabilities, the researchers are exploring whether models like GPT-4 could assist humans in generating novel word puzzles from scratch. This creative task could push the boundaries of how machine learning systems represent concepts and make contextual inferences.

The researchers conducted their experiments with a dataset of 250 puzzles from an online archive representing daily puzzles from June 12th, 2023 to February 16th, 2024. Along with Togelius, Todd and Merino, Sam Earle, a PhD student in the Game Innovation Lab, was also part of the research team. The study contributes to Togelius’ body of work that uses AI to improve games and vice versa. Togelius is the author of the 2019 book Playing Smart: On Games, Intelligence, and Artificial Intelligence.

arXiv:2404.11730v2 [cs.CL] 21 Apr 2024

Breakthrough study proposes enhanced algorithm for ride-pooling services

In the ever-evolving landscape of urban transportation, ride-pooling services have emerged as a promising solution, offering a shared mobility experience that is both cost-effective and environmentally friendly. However, optimizing these services to achieve high ridership while maintaining efficiency has remained a challenge. A new study by NYU Trandon transportation experts proposes a novel algorithm aimed at revolutionizing the way ride-pooling services operate.

Led by Joseph Chow, Institute Associate Professor of Civil and Urban Engineering and Deputy Director of the USDOT Tier 1 University Transportation Center C2SMARTER, the study delves into the intricacies of dynamic routing in ride-pooling services, with a particular focus on the integration of transfers within the system. Transfers, the process of passengers switching between vehicles during their journey, have long been identified as a potential strategy to enhance service availability and fleet efficiency. Yet, the implementation of transfers poses a highly complex routing problem, one that has largely been overlooked in existing literature.

The research team's solution comes in the form of a state-of-the-art dynamic routing algorithm, designed to incorporate synchronized intramodal transfers seamlessly into the ride-pooling experience. Unlike traditional approaches that focus solely on immediate decisions, the proposed algorithm adopts a forward-looking perspective, taking into account the long-term implications of routing choices.

Central to the study is the development of a simulation platform, allowing researchers to implement and test their proposed algorithm in real-world scenarios. Drawing on data from both the Sioux Falls network and the MOIA ride-pooling service in Hamburg, Germany, the team evaluated the performance of their algorithm across various operational settings.

The results of the study are promising, suggesting that the incorporation of transfers into ride-pooling services using the proposed algorithm can lead to significant improvements in fleet utilization and service quality compared to transfers without it. By accounting for the additional opportunity costs of transfer commitments, the proposed algorithm demonstrates a competitive edge over traditional myopic approaches, reducing operating costs per passenger and minimizing the number of rejected ride requests.

While the findings represent a significant advancement in the field of urban transportation, the researchers acknowledge that further validation and refinement are necessary before widespread implementation. Nonetheless, the study marks a pivotal moment in the ongoing quest to optimize ride-pooling services for the cities of tomorrow.

In summary, the contributions of the research can be categorized into three main areas:

1. Development of an innovative online policy and algorithm for operating ride-pooling services with en-route transfers.

2. Identification and integration of a previously overlooked dimension in transfer decisions, leading to a more comprehensive cost function approximation model.

3. Conducting a rigorous simulation-based experiment, utilizing real-world data to compare various operational strategies and validate the effectiveness of the proposed algorithm.

As cities continue to grapple with the challenges of urban mobility, studies like this offer a beacon of hope, paving the way for more efficient, sustainable, and accessible transportation systems.

This research was supported by MOIA.

Namdarpour, F., Liu, B., Kuehnel, N., Zwick, F., & Chow, J. Y. J. (2024). On non-myopic internal transfers in large-scale ride-pooling systems. Transportation Research Part C: Emerging Technologies, 162, 104597. https://doi.org/10.1016/j.trc.2024.104597

NYU Tandon researchers mitigate racial bias in facial recognition technology with demographically diverse synthetic image dataset for AI training

Facial recognition technology has made great strides in accuracy thanks to advanced artificial intelligence (AI) models trained on massive datasets of face images.

These datasets often lack diversity in terms of race, ethnicity, gender, and other demographic categories, however, causing facial recognition systems to perform worse on underrepresented demographic groups compared to groups ubiquitous in the training data. In other words, the systems are less likely to accurately match different images depicting the same person if that person belongs to a group that was insufficiently represented in the training data.

This systemic bias can jeopardize the integrity and fairness of facial recognition systems deployed for security purposes or to protect individual rights and civil liberties.

Researchers at NYU Tandon School of Engineering are tackling the problem. In a recent paper, a team led by Julian Togelius, Associate Professor of Computer Science and Engineering (CSE) revealed it successfully reduced facial recognition bias by generating highly diverse and balanced synthetic face datasets that can train facial recognition AI models to produce more fair results. The paper’s lead author is Anubhav Jain, Ph.D. candidate in CSE.

The team applied an "evolutionary algorithm" to control the output of StyleGAN2, an existing generative AI model that creates high-quality artificial face images and was initially trained on the Flickr Faces High Quality Dataset (FFHQ). The method is a "zero-shot" technique, meaning the researchers used the model as-is, without additional training.

The algorithm the researchers developed searches in the model’s latent space until it generates an equal balance of synthetic faces with appropriate demographic representations. The team was able to produce a dataset of 13.5 million unique synthetic face images, with 50,000 distinct digital identities for each of six major racial groups: White, Black, Indian, Asian, Hispanic and Middle Eastern.

The researchers then pre-trained three facial recognition models — ArcFace, AdaFace and ElasticFace — on the large, balanced synthetic dataset they generated.

The result not only boosted overall accuracy compared to models trained on existing imbalanced datasets, but also significantly reduced demographic bias. The trained models showed more equitable accuracy across all racial groups compared to existing models exhibiting poor performance on underrepresented minorities.

The synthetic data proved similarly effective for improving the fairness of algorithms analyzing face images for attributes like gender and ethnicity categorization.

By avoiding the need to collect and store real people's face data, the synthetic approach delivers the added benefit of protecting individual privacy, a concern when training AI models on images of actual people’s faces. And by generating balanced representations across demographic groups, it overcomes the bias limitations of existing face datasets and models.

The researchers have open-sourced their code to enable others to reproduce and build upon their work developing unbiased, high-accuracy facial recognition and analysis capabilities. This could pave the way for deploying the technology more responsibly across security, law enforcement and other sensitive applications where fairness is paramount.

This study — whose authors also include Rishit Dholakia (’22) MS in Computer Science, NYU Courant; and Nasir Memon, Dean of Engineering at NYU Shanghai, NYU Tandon ECE professor and faculty member of NYU Center for Cybersecurity — builds upon a paper the researchers shared at the IEEE International Joint Conference on Biometrics (IJCB), September 25-28, 2023.

Anubhav Jain , Rishit Dholakia , Nasir Memon , et al. Zero-shot demographically unbiased image generation from an existing biased StyleGAN. TechRxiv. December 02, 2023

Unveiling biochemical defenses against chemical warfare

In the clandestine world of biochemical warfare, researchers are continuously seeking innovative strategies to counteract lethal agents. Researchers led by Jin Kim Montclare, Professor in the Department of Chemical and Biomolecular Engineering, have embarked on a pioneering mission to develop enzymatic defenses against chemical threats, as revealed in a recent study.

The team's focus lies in crafting enzymes capable of neutralizing notorious warfare agents such as VX, renowned for their swift and devastating effects on the nervous system. Through meticulous computational design, they harnessed the power of enzymes like phosphotesterase (PTE), traditionally adept at detoxifying organophosphates found in pesticides, to target VX agents.

The study utilized computational techniques to design a diverse library of PTE variants optimized for targeting lethal organophosphorus nerve agents. Leveraging advanced modeling software, such as Rosetta, the researchers meticulously crafted enzyme variants tailored to enhance efficacy against these formidable threats. When they tested these new enzyme versions in the lab, they found that three of them were much better at breaking down VX and VR. Their findings showcased the effectiveness of these engineered enzymes in neutralizing these chemicals.

A key problem in treating these agents lies in the urgency of application. In the event of exposure, rapid intervention becomes paramount. The research emphasizes potential applications, ranging from prophylactic measures to immediate administration upon exposure, underscoring the imperative for swift action to mitigate the agents' lethal effects.

Another key issue is protein stability — ensuring that the proteins can stay intact and at the site of affected tissue which is crucial for therapeutic applications. Ensuring enzymes remain stable within the body enhances their longevity and effectiveness, offering prolonged protection against chemical agents.

Looking ahead, Montclare's team aims to optimize enzyme stability and efficacy further, paving the way for practical applications in chemical defense and therapeutics. Their work represents a beacon of hope in the ongoing battle against chemical threats, promising safer and more effective strategies to safeguard lives.

Kronenberg, J., Chu, S., Olsen, A., Britton, D., Halvorsen, L., Guo, S., Lakshmi, A., Chen, J., Kulapurathazhe, M. J., Baker, C. A., Wadsworth, B. C., Van Acker, C. J., Lehman, J. G., Otto, T. C., Renfrew, P. D., Bonneau, R., & Montclare, J. K. (2024). Computational design of phosphotriesterase improves v‐agent degradation efficiency. ChemistryOpen. https://doi.org/10.1002/open.202300263

Cutting-edge enzyme research fights back against plastic pollution

Since the 1950s, the surge in global plastic production has paralleled a concerning rise in plastic waste. In the United States alone, a staggering 35 million tons of plastic waste were generated in 2017, with only a fraction being recycled or combusted, leaving the majority to languish in landfills. Polyethylene terephthalate (PET), a key contributor to plastic waste, particularly from food packaging, poses significant environmental challenges due to its slow decomposition and pollution.

Efforts to tackle this issue have intensified, with researchers exploring innovative solutions such as harnessing the power of microorganisms and enzymes for PET degradation. However, existing enzymes often fall short in terms of efficiency, especially at temperatures conducive to industrial applications.

Enter cutinase, a promising enzyme known for its ability to break down PET effectively. Derived from organisms like Fusarium solani, cutinase has shown remarkable potential in degrading PET and other polymeric substrates. Recent breakthroughs include the discovery of leaf and branch compost cutinase (LCC), exhibiting unprecedented PET degradation rates at high temperatures, and IsPETase, which excels at lower temperatures.

In a recent study, researchers from NYU Tandon led by Jin Kim Montclare, Professor of Chemical and Biomolecular Engineering, presented a novel computational screening workflow utilizing advanced protocols to design variants of LCC with improved PET degradation capabilities similar to those in isPETase. By integrating computational modeling with biochemical assays, they have identified promising variants exhibiting increased hydrolysis behavior, even at moderate temperatures.

This study underscores the transformative potential of computational screening in enzyme redesign, offering new avenues for addressing plastic pollution. By incorporating insights from natural enzymes like IsPETase, researchers are paving the way for the development of highly efficient PET-hydrolyzing enzymes with significant implications for environmental sustainability.

Britton, D., Liu, C., Xiao, Y., Jia, S., Legocki, J., Kronenberg, J., & Montclare, J. K. (2024). Protein-engineered leaf and branch compost cutinase variants using computational screening and ispetase homology. Catalysis Today, 433, 114659. https://doi.org/10.1016/j.cattod.2024.114659