Research News

Biomedical engineers show potential of new peptide for fighting Alzheimer’s disease and COVID-19

NYU Tandon professors Mary Cowman and Jin Ryoun Kim recently published a paper describing a novel peptide with broad therapeutic potential to combat chronic inflammation in multiple diseases. The peptide, called Amilo 5-MER, was discovered by Professor David Naor and his colleague Dr. Shmuel Jaffe Cohen in the Faculty of Medicine at the Hebrew University of Jerusalem in Israel. They showed that Amilo 5-MER has anti-inflammatory effects that reduce pathological and clinical symptoms in mouse models for rheumatoid arthritis, inflammatory bowel disease, and multiple sclerosis.

Based on Naor's finding that the peptide binds to several proteins associated with inflammation, including Serum Amyloid A (SAA), Cowman and Kim proposed a working mechanism for the peptide. In a collaboration between the Israeli and Tandon teams they were able to show that the peptide inhibits aggregation of SAA into more pro-inflammatory and pro-amyloidogenic forms. Amyloid-type aggregation of proteins is associated with many diseases, and the Amilo 5-MER peptide has been found to bind to other aggregating proteins that play key roles in chronic inflammations and neurodegenerative diseases. Thus, the peptide could have significant therapeutic value in many other pathological conditions, such as Alzheimer's Disease, AA amyloidosis, and even COVID-19.

The project was supported by the Ines Mandl Research Foundation (IMRF), which is dedicated to providing research funding in the fight against connective tissue disease. It is the legacy of Dr. Ines Mandl, who was the first woman to graduate from the Polytechnic Institute of Brooklyn (today’s NYU Tandon School of Engineering) with a Ph.D. in chemistry in 1949.

On the mechanism and utility of laser-induced nucleation using microfluidics

This research will be led by Ryan Hartman, professor, with co-principal investigator Bruce Garetz, professor and Associate Chair, the Department of Chemical and Biomolecular Engineering at NYU Tandon.

Why does shining a laser on some liquid solutions cause them to crystallize? The researchers are awarded a National Science Foundation (Chemical, Bioengineering, Environmental, and Transport Systems) grant to elucidate the mechanisms by which light can induce nucleation — the process by which molecules cluster together and organize during the earliest stages of crystallization. Understanding these mechanisms could result in “greener” industrial processes by which a wide range of materials and chemicals that we use every day, such as dyes and pharmaceuticals, are made, saving energy and reducing the need for large amounts of chemical solvents.

In addition to reducing the environmental impact of manufacturing crystalline materials, laser-induced nucleation has the potential to provide better control over crystal shape and the arrangement of molecules in the crystals during the manufacturing process, properties that can be optimized for a specific application of the material. To make greener crystallization part of undergraduate and graduate education, the project will create educational activities that train students from diverse backgrounds to engineer solutions based on this new approach to crystallization, making it an inherent part of basic chemical engineering education.

Specifically, the research program will design and study microfluidic nonphotochemical, laser-induced nucleation (NPLIN) of preselected organic molecules. To understand light-field induced nucleation mechanisms, the investigators will examine molecules that crystallize into different morphologies, into different polymorphs, and that follow single-step versus two-step nucleation. The team will look at three different mechanisms:

- The optical Kerr effect by which light can align molecules in a disordered solute cluster and thereby induce nucleation

- Dielectric polarization in which light lowers the energy of slightly sub-critical solute clusters

- The absorption of light by colloidal impurity particles resulting in the formation of nanobubbles that induce nucleation.

As part of the project, and in order to do many aspects of this research, the team will design high-pressure microfluidics coupled with a pulsed, collimated laser beam, and perform investigations of laser-induced crystallization of ibuprofen, carbamazepine, and glycine crystals. The use of microfluidics will contribute a quantitative experimental methodology for NPLIN that can also distinguish single-step nucleation from two-step nucleation. The research discoveries will set the foundation for translating fundamental findings to practical applications.

Forecasting e-scooter substitution of direct and access trips by mode and distance

This research was performed under the direction of Joseph Chow, industry associate professor of civil and urban engineering and Deputy Director of the C2SMART transportation research center at NYU Tandon. Authors included former C2SMART graduate students Mina Lee and Gyugeun Yoon, and Brian Yueshuai He of the University of California, Los Angeles.

The e-scooter sharing ecosystem is now one of the fastest emerging micromobility services. As of 2018, such e-scooter sharing companies as Lime and Bird operate in over 100 cities around the world. This year New York City chose Lime, Bird, and VeoRide as the first participants in its inaugural electric scooter pilot.

Sector growth is being driven now by the low entry barrier, but also because e-scooters are potentially filling a mobility gap in cities that have weaker public transit infrastructure. This is due to the fact that they provide better access to transit access points and offer an economical means to travel short distances as part of a Mobility-as-a-Service (MaaS) system. They also reduce traffic congestion and fuel use, which can be a catalyst for adoption in cities where automobiles are the most common mode of transportation.

In a new predictive study, the researchers created forecast models for motorized stand-up scooters (e-scooters) in four U.S. cities based on user age, population, land area, and the number of scooters. Using data from Portland, Ore, Austin, Tex., Chicago, IL., and New York City, the model predicted 75,000 daily e-scooter trips in Manhattan for a deployment of 2000 scooters, which translates to $77 million in annual revenue. The investigators assessed the number of daily trips by the alternative modes of transportation that they would likely substitute based on statistical similarity.

The model parameters reveal a relationship with direct trips of bike, walk, carpool, automobile and taxi as well as access/egress trips with public transit in Manhattan. The study estimates that e-scooters could replace 32% of carpool; 13% of bikes; and 7.2% of taxi trips. E-scooters are likely to compete with the other modes at shorter distances than at longer distances. The results statistically support the hypothesis that e-scooters play distinct roles as direct trip-substituting at short distances and access trip-substituting at longer distances.

In the study, published in the Elsevier journal Transportation Research Part D: Transport and Environment, the investigators argued that their research results are valuable for government or city transportation planners as the growing popularity of environment-friendly-transportation make e-scooters a sustainable mode providing significantly less pollution. In particular, the confirmed hypothesis that e-scooters can play a role for access trips lends support to its value toward increasing the public’s accessibility to public transit.

“The economical demand analysis we proposed can promote the development of policy and infrastructure relating to e-scooter systems,” they write. “For example, e-scooters are driving much of the discussion on curb management practices, as well as mobility data sharing and as a core part of Mobility-as-a-Service platforms.

This research was conducted with support from the C2SMART University Transportation Center (USDOT #69A3551747124).

A Node-Charge Graph-Based Online Carshare Rebalancing Policy with Capacitated Electric Charging

Authors include Joseph Chow, Institute Associate Professor of civil and urban engineering and deputy director of the C2SMART transportation research center at NYU Tandon; principal author Theodoros P. Pantelidis, a former Ph.D. student under Chow’s direction; Saif Eddin G. Jabari, global network assistant professor of civil and urban engineering at NYU Abu Dhabi and NYU Tandon; Li Li, recently awarded her Ph.D. at NYU Abu Dhabi; and Tai-Yu Ma of the Luxembourg Institute of Socioeconomic research.

As the makeup of car-share fleets reflect the global shift to electric vehicles (EV) operators will need to address unique challenges to EV fleet scheduling. These include user time and distance requirements, time needed to recharge vehicles, and distribution of charging facilities — including limited availability of fast charging infrastructure (as of 2019 there are seven fast DC public charging stations in Manhattan including Tesla stations). Because of such factors, the viability of electric car-sharing operations depends on fleet rebalancing algorithms.

The stakes are high because potential customers may end up waiting or accessing a farther location, or even balk from using the service altogether if there is no available vehicle within a reasonable proximity (which may involve substantial access, e.g. taking a subway from downtown Manhattan to midtown to pick up a car) or no parking or return location available near the destination.

In a new study, published in the journal Transportation Science, the authors present an algorithmic technique based on graph theory that allows electric mobility services like carshares to reduce operating expenses, in part because the algorithm operates in real time, and anticipates future costs, which could make it easier for fleets to switch to EV operations in the future.

The common practice for carshare scheduling is for users to book specific time slots and reserve a vehicle from a specific location. The return location is required to be the same for “two-way” systems but is relaxed for “one-way” systems. Examples of free-floating systems were the BMW ReachNow car sharing system in Brooklyn (until 2018) and Car2Go in New York City. These two systems recently merged to become ShareNow, which is no longer in the North American market.

Rebalancing involves having either the system staff or users (through incentives) periodically drop off vehicles at locations that would better match supply to demand. While there is an abundant literature on methods to handle carshare rebalancing, research on rebalancing EVs to optimize access to charging stations is limited: there is a lack of models formulated for one-way EV carsharing rebalancing that captures all the following: 1) the stochastic dynamic nature of rebalancing with stochastic demand; 2) incorporating users’ access cost to vehicles; and 3) capacities at EV charging stations.

The researchers offer an innovative rebalancing policy based on cost function approximation (CFA) that uses a novel graph structure that allows the three challenges to be addressed. The team’s rebalancing policy uses cost function approximation in which the cost function is modeled as a relocation problem on a node-charge graph structure.

The researchers validated the algorithm in a case study of electric carshare in Brooklyn, New York, with demand data shared from BMW ReachNow operations in September 2017 (262 vehicle fleet, 231 pickups per day, 303 traffic analysis zones) and charging station location data (18 charging stations with 4 port capacities). The proposed non-myopic rebalancing heuristic reduces the cost increase compared to myopic rebalancing by 38%. Other managerial insights are further discussed.

The researchers reported that their formulation allowed them to explicitly consider a customer’s charging demand profile and optimize rebalancing operations of idle vehicles accordingly in an online system. They also reported that their approach solved the relocation problem in 15% – 89% of the computational time of commercial solvers, with only 7 – 35% optimality gaps in a single rebalancing decision time period.

The study’s authors say future research directions include dynamic demand (function of time, price and other factors), data-driven (machine learning) algorithms for updating, more realistic/ commercial simulation environment using data from larger operations, and detailed cost-benefit analysis on the tradeoffs of EV’s and regular vehicles.

This research was supported by the C2SMART University Transportation Center, the Luxembourg National Research Fund, the New York University Abu Dhabi (NYUAD) Center for Interacting Urban Networks (CITIES), Tamkeen, the Swiss Re Institute through the Quantum CitiesTM Initiative. Data was provided by BMW ReachNow car-sharing operations in Brooklyn, New York, USA.

A study on the association of socioeconomic and physical cofactors contributing to power restoration after Hurricane Maria

This research was led by Masoud Ghandehari, professor in the Department of Civil and Urban Engineering at NYU Tandon, with Shams Azad, a Ph.D. student under Ghandehari’s guidance.

The electric power infrastructure in Puerto Rico suffered substantial damage as Hurricane Maria crossed the island on September 20, 2017. Despite significant efforts made by authorities, it took almost a year to achieve near-complete power recovery. The electrical power failure contributed to the loss of life and the slow pace of disaster recovery. Hurricane Maria caused extensive damage to Puerto Rico’s power lines, leaving on average 80% of the distribution system out of order for months.

In this study, imagery of daily nighttime lights from space was used to measure the loss and restoration of electric power every day at 500-meter spatial resolution. The researchers monitored the island’s 889 county subdivisions for over eight months using Visible/Infrared Imagery and Radiometer Suite sensors — which showed how power was absent/present visually — and by formulating a regression model to identify the status of the power recovery effort.

The hurricane hit the island with its maximum strength at the point of landfall, which corresponds to massive destruction across all physical infrastructures, resulting in a longer recovery period. Indeed, the researchers found that every 50-kilometer increase in distance from the landfall corresponded to 30% fewer days without power. Road connectivity was a major issue for the restoration effort: areas having a direct connection with hi-speed roads recovered more quickly with 7% fewer outage days. Areas that were affected by moderate landslides needed 5.5%, and high landslide areas needed 11.4% more days to recover.

The researchers found that financially disadvantaged areas suffered more from the extended outage. For every 10% increase in population below the poverty line, there was a 2% increase in recovery time. While financial status did impact restoration efforts, the investigators did not find any additional association of race or ethnicity in the study.

Spatial-dynamic matching equilibrium models of New York City taxi and Uber markets

This research was led by Joseph Chow, assistant professor of civil and urban engineering and deputy director of the C2SMART transportation research center at NYU Tandon, with Kaan Ozbay, director of C2SMART, and lead author Diego Correa, a former Ph.D. student, now General Director of Mobility of the City of Cuenca, Ecuador.

With the rapidly changing landscape for taxis, ride-hailing, and ride-sourcing services, public agencies have an urgent need to understand how such new services impact social welfare, as well as how customers are matched to service providers, and how ride-sourcing operations, surge pricing policy and more are evaluated.

The researchers conducted an empirical study to understand these problems specifically for ride-sharing service Uber in New York City (NYC). Since key data is not readily available for the service, the team deployed a dynamic spatial equilibrium model using data on distribution, service, and revenue for NYC taxi fleets, data that is readily available from the city. Specifically, they performed spatial distribution analyses using data on demand activities, service coverage, fleet sizes, matches (rider pickups), and social welfare (the social compensation or detriment to riders of pricing and availability of service) by zone and time of day. They tied that to Uber pickup data for a specific time period in New York City (NYC).

They found, for example, that the NYC taxi industry generates $495,900 in consumer surplus and $1,022,000 in Taxi profits representing the aggregate surplus of 16,400 taxi-passenger matches. For the Uber market, welfare estimates indicate that $73,300 in consumer surplus and $151,300 in Uber profits, representing the aggregate surplus of 2,250 Uber-passenger matches in the 4-hour analysis period.

Additionally, taxi demand over the study period is 20,949, while full matches are 16,433, implying that 4,516 demanded customer trips are unmet each hour, or an average of 452 every 6 min. This contrasts with the 5,537 Taxis that are vacant at any one time. The externalities of this inefficiency are not directly captured by the model. However, the consumer surplus of the other mobility options reflects the level of congestion in the roadways due to the Taxi and Uber fleet scenarios. It can guide policy for improving lower externality options. For the congestion charging scenario for Uber, a $5 charge should be accompanied by at least a 1.20% increase in consumer surplus in lower externality modes like public transit. This can be achieved by ensuring that enough of the congestion charge is diverted to improving the transit for that difference.

Future research will inevitably consider collaborating with local agencies to evaluate different Uber policies.

This research was partially supported by the National Science Foundation Grant No. CMMI-1634973, the C2SMART Tier-1 University Transportation Center and the Secretaría de Educación Superior, Ciencia, Tecnología e Innovación (SENESCYT) Ecuador.

Robust reinforcement learning: A case study in linear quadratic regulation

This research, whose principal author is Ph.D. student Bo Pang, was directed by Zhong-Ping Jiang, professor in the Department of Electrical and Computer Engineering.

As an important and popular method in reinforcement learning (RL), policy iteration has been widely studied by researchers and utilized in different kinds of real-life applications by practitioners.

Policy iteration involves two steps: policy evaluation and policy improvement. In policy evaluation, a given policy is evaluated based on a scalar performance index. Then this performance index is utilized to generate a new control policy in policy improvement. These two steps are iterated in turn, to find the solution of the RL problem at hand. When all the information involved in this process is exactly known, the convergence to the optimal solution can be provably guaranteed, by exploiting the monotonicity property of the policy improvement step. That is, the performance of the newly generated policy is no worse than that of the given policy in each iteration.

However, in practice policy evaluation or policy improvement can hardly be implemented precisely, because of the existence of various errors, which may be induced by function approximation, state estimation, sensor noise, external disturbance and so on. Therefore, a natural question to ask is: when is a policy iteration algorithm robust to the errors in the learning process? In other words, under what conditions on the errors does the policy iteration still converge to (a neighborhood of) the optimal solution? And how to quantify the size of this neighbourhood?

This paper studies the robustness of reinforcement learning algorithms to errors in the learning process. Specifically, they revisit the benchmark problem of discrete-time linear quadratic regulation (LQR) and study the long-standing open question: Under what conditions is the policy iteration method robustly stable from a dynamical systems perspective?

Using advanced stability results in control theory, they show that policy iteration for LQR is inherently robust to small errors in the learning process and enjoys small-disturbance input-to-state stability: whenever the error in each iteration is bounded and small, the solutions of the policy iteration algorithm are also bounded, and, moreover, enter and stay in a small neighbourhood of the optimal LQR solution. As an application, a novel off-policy optimistic least-squares policy iteration for the LQR problem is proposed, when the system dynamics are subjected to additive stochastic disturbances. The proposed new results in robust reinforcement learning are validated by a numerical example.

This work was supported in part by the U.S. National Science Foundation.

Asymptotic trajectory tracking of autonomous bicycles via backstepping and optimal control

Zhong-Ping Jiang, professor of electrical and computer engineering (ECE) and member of the C2SMART transportation research center at NYU Tandon, directed this research. Leilei Cui, a Ph.D. student in the ECE Department is lead author. Zhengyou Zhang and Shuai Wang from Tencent are co-authors.

This paper studies the trajectory tracking and balance control problem for an autonomous bicycle — one that is ridden like a normal bicycle before automatically traveling by itself to the next user — that is a non-minimum phase, strongly nonlinear system.

As compared with most existing methods dealing only with approximate trajectory tracking, this paper solves a longstanding open problem in bicycle control: how to develop a constructive design to achieve asymptotic trajectory tracking with balance. The crucial strategy is to view the controlled bicycle dynamics from an interconnected system perspective.

More specifically, the nonlinear dynamics of the autonomous bicycle is decomposed into two interconnected subsystems: a tracking subsystem and a balancing subsystem. For the tracking subsystem, the popular backstepping approach is applied to determine the propulsive force of the bicycle. For the balancing subsystem, optimal control is applied to determine the steering angular velocity of the handlebar in order to balance the bicycle and align the bicycle with the desired yaw angle. In order to tackle the strong coupling between the tracking and the balancing systems, the small-gain technique is applied for the first time to prove the asymptotic stability of the closed-loop bicycle system. Finally, the efficacy of the proposed exact trajectory tracking control methodology is validated by numerical simulations (see the video).

"Our contribution to this field is principally at the level of new theoretical development," said Jiang, adding that the key challenge is in the bicycle's inherent instability and more degrees of freedom than the number of controllers. "Although the bicycle looks simple, it is much more difficult to control than driving a car because riding a bike needs to simultaneously track a trajectory and balance the body of the bike. So a new theory is needed for the design of an AI-based, universal controller." He said the work holds great potential for developing control architectures for complex systems beyond bicycles.

The work was done under the aegis of the Control and Network (CAN) Lab led by Jiang, which consists of about 10 people and focuses on the development of fundamental principles and tools for the stability analysis and control of nonlinear dynamical networks, with applications to information, mechanical and biological systems.

The research was funded by the National Science Foundation (Grant number 10.13039/100000001).

Self-assembly of stimuli-responsive coiled-coil fibrous hydrogels

Jin Kim Montclare, professor of chemical and biomolecular engineering, with affilations at NYU Langone Health and NYU College of Dentistry, directed this research with first author Michael Meleties, fellow Ph.D. student Dustin Britton, postdoctoral associate Priya Katyal, and undergraduate research assistant Bonnie Lin.

Owing to their tunable properties, hydrogels comprising stimuli-sensitive polymers are among the most appealing molecular scaffolds because their versatility allows for applications in tissue engineering, drug delivery and other biomedical fields.

Peptides and proteins are increasingly popular as building blocks because they can be stimulated to self-assemble into nanostructures such as nanoparticles or nanofibers, which enables gelation — the formation of supramolecular hydrogels that can trap water and small molecules. Engineers, to generate such smart biomaterials, are developing systems that can respond to a multitude of stimuli including heat. Although thermosensitive hydrogels are among widely studied and well-understood class of protein biomaterials, substantial progress is also reportedly being made in incorporating stimuli-responsiveness including pH, light, ionic strength, redox, as well as the addition of small molecules.

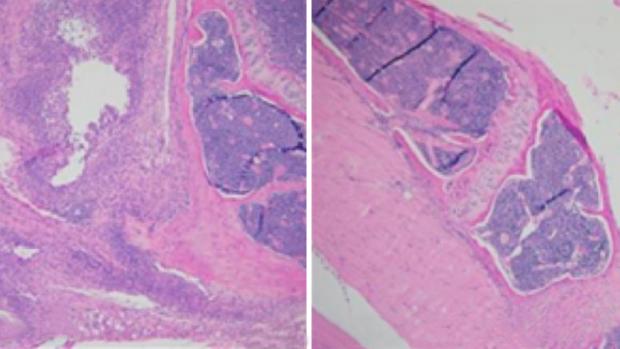

The NYU Tandon researchers, who previously reported a responsive hydrogel formed using a coiled-coil protein, Q, expanded their studies to identify the gelation of Q protein at distinct temperatures and pH conditions.

Using transmission electron microscopy, rheology and structural analyses, they observed that Q self-assembles and forms fiber-based hydrogels exhibiting upper critical solution temperature (UCST) behavior with increased elastic properties at pH 7.4 and pH 10. At pH 6, however, Q forms polydisperse nanoparticles, which do not further self-assemble and undergo gelation. The high net positive charge of Q at pH 6 creates significant electrostatic repulsion, preventing its gelation. This study will potentially guide the development of novel scaffolds and functional biomaterials that are sensitive towards biologically relevant stimuli

Montclare explained that upper critical solution temperature (UCST) phase behavior is characterized by a solution that will form a hydrogel when it is cooled below a critical temperature.

"In our case, it is due to the physical crosslinking/entanglement of fibers that our fiber-based hydrogel forms when cooled," she said, adding that when the temperature is raised above the critical temperature, the hydrogel transitions back into solution and most of the fibers should disentangle.

"In our study, we looked at how this process is affected by pH. We believe that the high net charge of the protein at pH 6 creates electrostatic repulsions that prevent the protein from assembling into fibers and further into hydrogels, while at higher pH where there would be less electrostatic repulsion, the protein is able to assemble into fibers that can then undergo gelation."

CO2 doping of organic interlayers for perovskite solar cells

The team reporting on this research was led by André D. Taylor, a professor of chemical and biomolecular engineering at NYU Tandon, and post-doctoral associate Jaemin Kong.

Perovskite solar cells have progressed in recent years with rapid increases in power conversion efficiency (from 3% in 2006 to 25.5% today), making them more competitive with silicon-based photovoltaic cells. However, a number of challenges remain before they can become a competitive commercial technology.

One of these challenges involves inherent limitations in the process of p-type doping of organic hole-transporting materials within the photovoltaic cells.

This process, wherein doping is achieved by the ingress and diffusion of oxygen into hole transport layers, is time intensive (several hours to a day), making commercial mass production of perovskite solar cells impractical. The Tandon team, however, discovered a method of vastly increasing the speed of this process through the use of carbon dioxide instead of oxygen.

In perovskite solar cells, doped organic semiconductors are normally required as charge-extraction interlayers situated between the photoactive perovskite layer and the electrodes. The conventional means of doping these interlayers involves the addition of lithium bis(trifluoromethane)sulfonimide (LiTFSI), a lithium salt, to spiro-OMeTAD, a π-conjugated organic semiconductor widely used for a hole-transporting material in perovskite solar cells, and the doping process is then initiated by exposing spiro-OMeTAD:LiTFSI blend films to air and light. Besides being time consuming, this method largely depends on ambient conditions. By contrast, Taylor and his team reported a fast and reproducible doping method that involves bubbling a spiro-OMeTAD:LiTFSI solution with carbon dioxide (CO2) under ultraviolet light.

They found that the CO2 bubbling process rapidly enhanced electrical conductivity of the interlayer by 100 times compared to that of a pristine blend film, which is also approximately 10 times higher than that obtained from an oxygen bubbling process. The CO2 treated film also resulted in stable, high-efficiency perovskite solar cells without any post-treatments.

The lead author Jaemin Kong explained that “Employing the pre-doped spiro-OMeTAD to perovskite solar cells shortens the device fabrication and processing time. Further, it makes cells much more stable as most detrimental lithium ions in spiro-OMeTAD:LiTFSI solution were stabilized to lithium carbonate, created while the doping of spiro-OMeTAD happened during CO2 bubbling process. The lithium carbonates end up being filtered out when we spincast the pre-doped solution onto the perovskite layer. Thus, we could obtain fairly pure doped organic materials for efficient hole transporting layers.”

Moreover, the team found that the CO2 doping method can be used for p-type doping of other π-conjugated polymers, such as PTAA, MEH-PPV, P3HT, and PBDB-T. Taylor said the team is looking to push the boundary beyond typical organic semiconductors used for solar cells.

"We believe that wide applicability of CO2 doping to various π-conjugated organic molecules stimulates research ranging from organic solar cells to OLEDs and OFETs even to thermoelectric devices that all require controlled doping of organic semiconductors,” and added that “Since this process consumes a quite large amount of CO2 gas during the process, it can be also considered for CO2 capture and sequestration study in the future. We are hoping that the CO2 doping technique could be a stepping stone for overcoming existing challenges in organic electronics and beyond.”