Chen Feng

-

Institute Associate Professor

Chen Feng is an Institute Associate Professor at New York University, Director of the AI4CE Lab, and Founding Co-Director of the NYU Center for Robotics and Embodied Intelligence (CREO). His research focuses on active and collaborative robot perception and robot learning to address multidisciplinary, use-inspired challenges in construction, manufacturing, and transportation. Prior to NYU, he worked as a research scientist in the Computer Vision Group at Mitsubishi Electric Research Laboratories (MERL) in Cambridge, Massachusetts, where he developed patented algorithms for localization, mapping, and 3D deep learning in autonomous vehicles and robotics. Chen Feng earned his doctoral and master's degrees from the University of Michigan between 2010 and 2015, and his bachelor's degree in 2010 from Wuhan University. Chen is an active contributor to the AI and robotics communities, such as CVPR, IEEE RA-L, and ICRA, and he has served as an area chair and associate editor. In 2023, he was awarded the NSF CAREER Award. More information on his research can be found at https://ai4ce.github.io/.

Chen leads the AI4CE lab (pronounced as "A-I-force") with students from several departments (CSE, MAE, CUE, ECE) and schools (Tandon and Courant) at NYU. The AI4CE lab conducts multidisciplinary use-inspired research to develop novel algorithms and systems for intelligent agents to accurately understand and efficiently interact with materials and humans in dynamic and unstructured environments. The lab aims to fundamentally advance Robotics and AI in areas such as localization, mapping, navigation, mobile manipulation, and scene understanding, to address infrastructure challenges on Earth and beyond, including construction robotics, manufacturing automation, and autonomous vehicles.

Research News

New AI Language-Vision Models Transform Traffic Video Analysis to Improve Road Safety

New York City's thousands of traffic cameras capture endless hours of footage each day, but analyzing that video to identify safety problems and implement improvements typically requires resources that most transportation agencies don't have.

Now, researchers at NYU Tandon School of Engineering have developed an artificial intelligence system that can automatically identify collisions and near-misses in existing traffic video by combining language reasoning and visual intelligence, potentially transforming how cities improve road safety without major new investments.

Published in the journal Accident Analysis and Prevention, the research won New York City's Vision Zero Research Award, an annual recognition of work that aligns with the City's road safety priorities and offers actionable insights. Professor Kaan Ozbay, the paper's senior author, presented the study at the eighth annual Research on the Road symposium on November 19.

The work exemplifies cross-disciplinary collaboration between computer vision experts from NYU's new Center for Robotics and Embodied Intelligence and transportation safety researchers at NYU Tandon's C2SMART center, where Ozbay serves as Director.

By automatically identifying where and when collisions and near-misses occur, the team’s system — called SeeUnsafe — can help transportation agencies pinpoint dangerous intersections and road conditions that need intervention before more serious accidents happen. It leverages pre-trained AI models that can understand both images and text, representing one of the first applications of multimodal large language models to analyze long-form traffic videos.

"You have a thousand cameras running 24/7 in New York City. Having people examine and analyze all that footage manually is untenable," Ozbay said. "SeeUnsafe gives city officials a highly effective way to take full advantage of that existing investment."

"Agencies don't need to be computer vision experts. They can use this technology without the need to collect and label their own data to train an AI-based video analysis model," added NYU Tandon Associate Professor Chen Feng, a co-founding director of the Center for Robotics and Embodied Intelligence, and paper co-author.

Tested on the Toyota Woven Traffic Safety dataset, SeeUnsafe outperformed other models, correctly classifying videos as collisions, near-misses, or normal traffic 76.71% of the time. The system can also identify which specific road users were involved in critical events, with success rates reaching up to 87.5%.

Traditionally, traffic safety interventions are implemented only after accidents occur. By analyzing patterns of near-misses — such as vehicles passing too close to pedestrians or performing risky maneuvers at intersections — agencies can proactively identify danger zones. This approach enables the implementation of preventive measures like improved signage, optimized signal timing, and redesigned road layouts before serious accidents take place.

The system generates “road safety reports” — natural language explanations for its decisions, describing factors like weather conditions, traffic volume, and the specific movements that led to near-misses or collisions.

While the system has limitations, including sensitivity to object tracking accuracy and challenges with low-light conditions, it establishes a foundation for using AI to “understand” road safety context from vast amounts of traffic footage. The researchers suggest the approach could extend to in-vehicle dash cameras, potentially enabling real-time risk assessment from a driver's perspective.

The research adds to a growing body of work from C2SMART that can improve New York City's transportation systems. Recent projects include studying how heavy electric trucks could strain the city's roads and bridges, analyzing how speed cameras change driver behavior across different neighborhoods, developing a “digital twin” that can find smarter routing to reduce FDNY response times, and a multi-year collaboration with the City to monitor the Brooklyn-Queens Expressway for damage-causing overweight vehicles.

In addition to Ozbay and Feng, the paper's authors are lead author Ruixuan Zhang, a Ph.D. student in transportation engineering at NYU Tandon; Beichen Wang and Juexiao Zhang, both graduate students from NYU's Courant Institute of Mathematical Sciences; and Zilin Bian, a recent NYU Tandon Ph.D. graduate now an assistant professor at Rochester Institute of Technology.

Funding for the research came from the National Science Foundation and the U.S. Department of Transportation's University Transportation Centers Program.

Ruixuan Zhang, Beichen Wang, Juexiao Zhang, Zilin Bian, Chen Feng, Kaan Ozbay,

When language and vision meet road safety: Leveraging multimodal large language models for video-based traffic accident analysis, Accident Analysis & Prevention, Volume 219,2025, 108077,ISSN 0001-4575,https://doi.org/10.1016/j.aap.2025.108077.

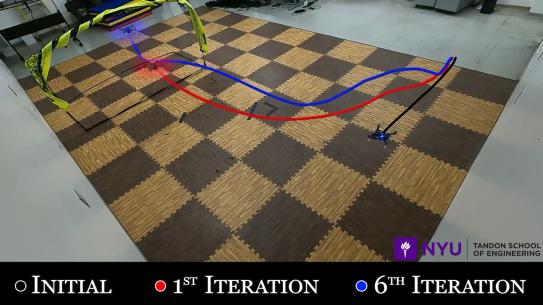

AI-powered and Robot-assisted Manufacturing for Modular Construction

Semiha Ergan, assistant professor in the Departments of Civil and Urban Engineering, and Computer Science and Engineering and Chen Feng, assistant professor in the departments of Civil and Urban and Mechanical and Aerospace Engineering will lead this project.

Modular construction, with an established record of accelerating projects and reducing costs, is a revolutionary way to transform the construction industry. However, new construction capabilities are needed to perform modular construction at scale, where, as is the case in factories, the industry suffers from the dependency on skilled labor. Among the challenges this project aims to address:

- Every project is unique and requires efficiency and accuracy in recognition and handling workpieces

- Design and production-line changes are common, and require design standardization and optimization of modules, and

- Production lines are complex in space and time, and necessitate the guidance of workers while processing design and installation information accurately

To focus on these challenges while exploring modular construction within the context of Future Manufacturing (FM), this project exploits opportunities at the intersection of AI/robotics/building information modeling and manufacturing, with the potential to increase the scalability of modular construction.

The research will pioneer initial formulations to enable (a) high throughput in manufacturing through the definition and evaluation of processes that embrace real-time workpiece semantic grounding and in-situ AR-robotic assistance, (b) feasibility studies of optimizing and standardizing the design of modules, and utilization of a cyber-infrastructure for their standardization, (c) prototyping cyber-infrastructures as both novel ways of forming academia and industry partnerships, and data infrastructures to accelerate data-driven adaption in FM for modular construction, and (d) synergistic activities with a two-year institution to train and educate FM workforce for the potential of FM and technologies evaluated.

The team argues that, while the evaluations of technologies will focus on the modular construction, the proposed technologies could make manufacturing industries more competitive, particularly heavy manufacturing industries that share similar challenges such as agricultural, mining, and ship building. The project will therefore enhance U.S. competitiveness in production, bolster economic growth, educate students, and influence workforce behavior towards efficiency and accuracy with the skills required for leadership in FM.