Award-winning NYU Tandon robotics researchers present an interdisciplinary vision of the future of robotics at ICRA 2022

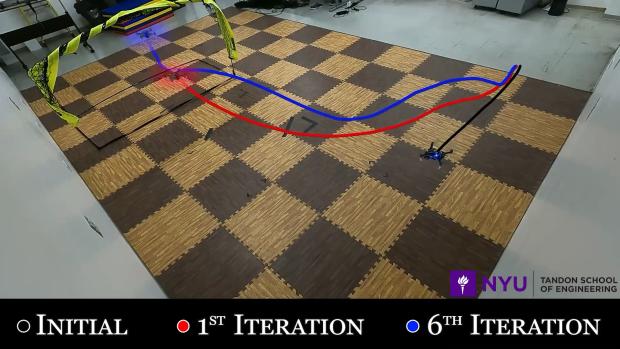

The navigation of flying drones was a prominent feature in the works being presented at ICRA 2022. Here, researchers demonstrate how a machine-learning model can help drones learn to fly more efficiently, over several interations.

NYU Tandon’s robotics researchers have been racking up achievements and recognitions. Giuseppe Loianno recently received a prestigious NSF CAREER Award for boosting the ability of robots to navigate in challenging environments, S. Farokh Atashzar received a grant from NSF to create novel approaches to computational “mega-network” modeling, and now our roboticists are bringing their latest research to Philadelphia for the upcoming 2022 IEEE International Conference on Robotics and Automation, the largest and most important international conference about robotics.

The conference, from May 23 to 27, 2022 will be held in person after several years of disruption from the pandemic.

Tandon’s robotics researchers in NYU Tandon’s departments of electrical and computer engineering, mechanical and aerospace engineering and civil and urban engineering, have six accepted papers and four workshops at the conference, which brings together experts to discuss the most cutting-edge research in robotics and autonomous systems.

The researchers will present their papers at the conference — representing work from four different labs — is a particularly strong academic contribution given the cohort’s size. Like much of their work, the papers reflect Tandon Robotics' focus on areas such as robotics for heath, construction, and improved urban living.

Among the works presented are:

- Three papers from the lab of Loianno, including “Learning Model Predictive Control for Quadrotors,” which is one of three finalists for the Best ICRA 2022 Outstanding Deployed Systems paper. This work offers key insights to help flying drones learn while on the job, utilizing machine learning to help avoid obstacles in cluttered airspace. With flying drones already being deployed to help in areas like logistics, search and rescue for post-disaster response, and other tasks, keeping them agile and safe is a key goal of ongoing research.

Loianno is also presenting “Multi-Robot Collaborative Perception with Graph Neural Networks” a proposal for a general-purpose Graph Neural Network (GNN) to increase single robots’ perception accuracy, and “Autonomous Single-Image Drone Exploration with Deep Reinforcement Learning and Mixed Reality,” which proposes a model that leverages ground information provided by the simulator environment to speed up learning and enhance final exploration performances of flying robots.

- Papers from Chen Feng's lab include “A Deep Reinforcement Learning Environment for Particle Robot Navigation and Object Manipulation,” selected as one of the three finalists for the Best Coordination Paper award. The paper is related to Loianno’s concerning deep-learning techniques to help autonomous navigation, Feng’s research concerns particle robots — small, simplistic drones that can act together as a “swarm.” These robots, inspired by biological elements like viruses or sea urchins, can only perform simple motions independently — in this case, expanding and contracting their radii — but can collectively move through intense coordination with one another. While these robotic swarms are currently only capable of handcrafted movement paradigms, Chen’s research into DRL algorithms can help pave a path to more autonomous, less hands-on control.

Chen is also presenting “Deep Weakly Supervised Positioning for Indoor Mobile Robots” proposes a new framework called DeepGPS, which can be trained to help flying robots understand their position in a given space without direct supervision.

- Atashzar's lab is presenting the paper “Deep Heterogeneous Dilation of LSTM for Transient-Phase Gesture Prediction Through High-Density Electromyography: Towards Application in Neurorobotics.” A key trouble of brain machine interfaces like smart prosthetics is estimating how motor neurons intend to act when they receive muscles from the brain to stimulate muscles in a particular way. Deep networks have been recently proposed to estimate motor intention using conventional bipolar surface electromyography (sEMG) signals for myoelectric control of neurorobots. This letter recommends Long-Short-Term Memory (LSTM), a type of neural network, which shows state-of-the-art performance for gesture prediction in terms of accuracy, training time, and model convergence.

In addition to the papers shown, Tandon researchers are presenting on a number of topics as part of workshops. Loianno has organized two workshops — one on aerial robotics, the other on parallel robotics and how new robots, mechanisms and AI will shape the future of the field. Feng has organized “Future of Construction” a deep look at how robotics will come to define the future of the $10 billion construction industry. Atashzar’s Human-Centered Autonomy in Medical Robotics explores a future horizon of healthcare where autonomous agents are deployed to automate several aspects of care delivery under the high-level and possibly remote supervision of a care provider. And Ludovic Righetti will be participating in Reinforcement Learning for Contact-Rich Manipulation. This panel tackles tasks involving complex contact dynamics and friction, where it is difficult to model related physical effects, and hence, traditional control methods often result in inaccurate or brittle controllers.