Research News

True or false: studying work practices of professional fact-checkers

This research included Nasir Memon of NYU Tandon and the NYU Center for Cybersecurity, Nicholas Micallef of the NYU Abu Dhabi Center for Cyber Security, and researchers from Indiana University Bloomington and the University of Utah.

Online misinformation is a critical societal threat . While fact-checking plays a role in combating the exponential rise of misinformation, little empirical research has been done on the work practices of professional fact-checkers and fact-checking organizations.

Existing research has covered fact-checking practitioner views, effectiveness of fact checking efforts, and professional and user practices for responding to political claims. While researchers are beginning to investigate challenges to fact-checking, such efforts typically focus on traditional media outlets rather than independent fact-checking organizations (e.g., Politifact). Similarly, such research has not yet investigated the entire misinformation landscape, including the dissemination of the outcomes of fact-checking work.

To address these shortcomings, a team including Nasir Memon of NYU Tandon and Nicholas Micalleff of NYU Abu Dhabi interviewed 21 professional fact-checkers from 19 countries, covering topics drawn from previous research analyzing fact-checking from a journalistic perspective. The interviews focused on gathering information about the fact-checking profession, fact-checking processes and methods, the use of computation tools for fact-checking, and challenges and barriers to fact-checking.

The study, "True or False: Studying the Work Practices of Professional Fact-Checkers," found that most of the fact-checkers felt they have a social responsibility of correcting harmful information to provide “a service to the public,” emphasizing that they want the outcome of their work to both educate and inform the public. Some fact-checkers mentioned that they hope to contribute to an information ecosystem providing a “balanced battlefield” for the discussion of an issue, particularly during elections.

The interviews also revealed that the fact-checking process involves first selecting a claim, contextualizing and analyzing it, consulting data and domain experts, writing up the results and deciding on a rating, and disseminating the report.

Fact-checkers encounter several challenges in achieving their desired impact because current fact-checking work practices are largely manual, ad-hoc, and limited in scale, scope, and reach. As a result, the rate at which misinformation can be fact-checked is much slower than the speed at which it is generated. The research points out the need for unified and collaborative computational tools that empower the human fact-checker in the loop by supporting the entire pipeline of fact-checking work practices from claim selection to outcome dissemination. Such tools could help narrow the gap between misinformation generation and fact-check dissemination by improving the effectiveness, efficiency, and scale of fact-checking work and dissemination of its outcomes.

This research has been supported by New York University Abu Dhabi and Indiana University Bloomington.

Developing design criteria for active green wall bioremediation performance

This research was led by Elizabeth Hénaff, Assistant Professor in the Department of Technology, Culture, and Society department with collaborators from Yale University.

Air pollution “is the biggest environmental risk to [human] health” according to the World Health Organization. While air-pollution related deaths are strongly associated with a person’s age and their country of origin’s economic status, poor indoor air quality correlates to health impacts ranging from transient symptoms such as difficulty concentrating and headaches, to chronic, more serious symptoms such as asthma and cancer, in both developing and developed nations.

Emerging data indicates that mechanical/physio-chemical air handling systems inadequately address common indoor air quality problems, including elevated CO2 levels and volatile organic compounds (VOCs), with compounding negative impacts to human health. In this new study, the researchers extend Hénaff's preliminary work suggesting that active plant-based systems may address these challenges.

The researchers investigated relationships between plant species choice, growth media design (hydroponic versus organic), and factors of design-related performance such as weight, water content, and air flow rate through growth media. The team studied these variables in relation to CO2 flux under low levels of light such as one might find in indoor lighting environments. The proposed methodology was designed to improve upon the methods of previous studies.

Across the species, hydroponic media produced 61% greater photosynthetic leaf area compared to organic media which produced 66% more root biomass. The investigators measured CO2 concentration changes driven by differing plant and growth media (organic vs. hydroponic) treatments within a semi-sealed chamber.

The results of this experiment point to two critical considerations: First, growth media selection should be considered a primary design criterion, with potentially significant implications for the ultimate CO2 balance and biological function of installations, especially as it relates to patterns of plant development and water availability. Secondly, influxes of CO2 concentrations during the initiation of active air flow and early plant development may have to be accounted for if the patterns of measured CO2 fluxes are found to persist at scale. In the context of active air flow systems and indoor air pollutant bioremediation (CO2 included), relative rates of CO2 production and sequestration as they relate to potential VOC remediation rates become critical for short term indoor air quality and implications for heating, ventilation and A.C. energy use.

Phoebe Mankiewicz, Aleca Borsuk, Christina Ciardullo, Elizabeth Hénaff, Anna Dyson; Developing design criteria for active green wall bioremediation performance: Growth media selection shapes plant physiology, water and air flow patterns; Energy and Buildings; Volume 260, 2022

Stochastic modeling of solar irradiance during hurricanes

Authors of this research, led by Luis Ceferino, professor of civil and urban engineering and member of the Center for Urban Science and Progress, include Ning Lin and Dazhi Xi of Princeton University.

Despite solar’s growing criticality for electricity generation, few studies have proposed models to assess solar generation during extreme natural events. In particular, hurricanes bring environmental conditions that may drastically reduce solar generation even if solar infrastructure remains fully functional.

In a new paper in researchers present a stochastic model to quantify irradiance decay during hurricanes, using a dataset that analyzes historical data on Global Horizontal Irradiance and 22 landfalling storms from the Atlantic in the North American basin, which reached a category of at least three during their lifetime. The data showed higher irradiance decays for higher hurricane categories and closer to the hurricane center due to optically thick clouds that absorb and reflect light.

Specifically, their model describes the irradiance decay as a function of hurricane category and the distance to the hurricane center normalized by the hurricane size. Their analysis, based on an examination and performance ranking of four irradiance decay functions with varying complexities, demonstrates that the hurricane’s radius of outermost closed isobar performs best as the size metric for normalizing distance.

To showcase the methodology’s applicability, they used it to generate spatiotemporal models of irradiance during storms from genesis to dissipation, based on probable storm behavior in 839 counties in the United States’ southern region. Among the results were that solar-powered electricity generation in Miami-Dade, Florida, can decrease beyond 70% in large regions during a category-4 hurricane even if the solar infrastructure is undamaged.

They found that, furthermore, generation losses can also last beyond three days, and this timeframe will be exacerbated if solar panels become non-functional. The team plans a follow-up study integrating the proposed model with panel fragility functions to offer analysis capabilities for forecasting time-varying solar generation during hurricanes.

A chance-constrained dial-a-ride problem with utility-maximising demand and multiple pricing structures

The classic Dial-A-Ride Problem (DARP) aims at designing the minimum-cost routing that accommodates a set of user requests under constraints at an operations planning level, where users’ preferences and revenue management are often overlooked.

Researchers at NYU Tandon, including Joseph Chow, professor of civil and urban engineering and Deputy Director of the C2SMART Tier 1 University Transportation Center, have designed innovative solutions. In a paper in Elsevier’s Transportation Research, they present a mechanism for accepting and rejecting user requests in a Demand Responsive Transportation (DRT) context based on the representative utilities of alternative transportation modes. They consider utility-maximising users and propose a mixed-integer programming formulation for a Chance Constrained DARP (CC-DARP), that captures users’ preferences.

They further introduce class-based user groups and consider various pricing structures for DRT services, and develop a local search-based heuristic and a matheuristic to solve the proposed CC-DARP. The study includes numerical results for both DARP benchmarking instances and a realistic case study based on New York City yellow taxi trip data. They found, with computational experiments performed on 105 benchmarking instances with up to 96 nodes yielded average profit gaps of 2.59% and 0.17% using the proposed local search heuristic and matheuristic, respectively.

The based on the case study the work suggests that a zonal fare structure is the best strategy in terms of optimizing revenue and ridership. Their CC-DARP formulation provides a new decision-support tool to inform revenue and fleet management for DRT systems on a strategic planning level.

Co-authors of the study are Xiaotong Dong and Travis Waller of the School of Civil and Environmental Engineering, University of New South Wales, Australia; and David Rey of the SKEMA Business School, Université Côte d’Azur, France.

Transit Network Frequency Setting With Multi-Agent Simulation to Capture Activity-Based Mode Substitution

Despite the emergence of many new mobility options in cities around the world, fixed route transit is still the most efficient means of mass transport. Bus operations are subject to vicious and virtuous cycles. Evidence of this can be seen in New York City.

Since 2007, travel speed reductions and increased congestion as a result of more mobility options competing for road space have led to a vicious cycle of ridership reduction and further increased congestion as former transit passengers take to other less congestion-efficient modes. In Brooklyn, bus ridership has declined by 21% during this period. While the decrease in ridership has been steady throughout this period, there is an emerging concern that it will only get worse as for-hire vehicle services like Uber and Lyft add more trips to the road network

Intervention in the form of network redesign is required to promote a virtuous cycle and make the bus more competitive, especially in the face of increased competition from ride-hail services. This can be done by redesigning the bus network in a way that reduces operating and user costs while increasing accessibility for more riders.

Now, new research from Joseph Chow, professor of computer science and engineering at the NYU Tandon School of Engineering and a member of the C2SMART Tier 1 University Transportation Center at NYU Tandon; and doctoral student Ziyi Ma are proposing a simulation-based transit network design model for bus frequency planning in large-scale transportation networks with activity-based behavioral responses. The model is applied to evaluate the existing Brooklyn bus network, other proposed network redesign, and used to develop an alternative design based on the researcher’s methodology.

The MATSim-NYC model designed by Chow and Ma is able to simulate patterns similar to the existing bus network in Brooklyn with some calibration. This model was used to confirm a plan from another group to increase ridership, but was also able to refine it even further. The increased ridership draws primarily from passenger car use (nearly 75%), with a small 2.5% drawn from ride-hail services and another 5% from taxis. This suggests the redesigns should be effective in moving people away from less efficient transportation modes.

The researchers are now looking to further refine their model so that it can become a key tool for policymakers planning the future of transportation in the city.

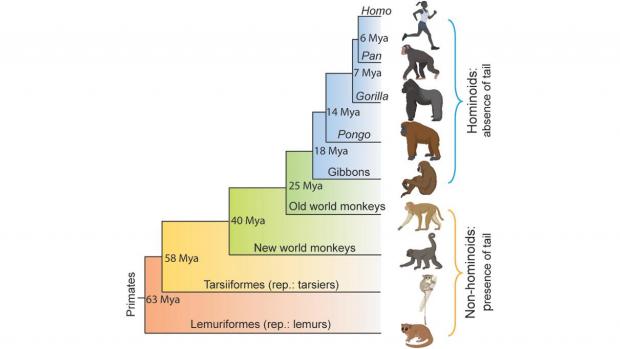

The genetic basis of tail-loss evolution in humans and apes

NYU researchers at the Tandon School of Engineering and the Grossman School of Medicine are trying to understand an age-old question that bedeviled most of us at some point: Why do all the other animals have tails, but not me? The loss of the tail is one of the main anatomical evolutionary changes to have occurred along the lineage leading to humans and to the “anthropomorphous apes.” The loss of tails has long been thought to have played a key role in bipedalism in humans.

This curiosity-based question was addressed by using bioinformatics tools to look at differences between the genomes of humans (and the other apes, which all lack tails) and monkeys (which all have tails, like most other mammals).

Bo Xia, a PhD candidate studying this problem in the labs of Jef Boeke and Itai Yanai, looked at sequence alignments of all genes known to be involved in tail development and discovered a movable piece of DNA called a retrotransposon inserted in the TBXT gene, which is a developmental regulator crucial for tail development. The reason it had not been spotted before was due its placement in noncoding (intron) DNA, where most people would not look for mutations.

Examination of the gene, which carried other copies of the Alu retrotransposon, led to a model for how the Alu might disregulate splicing of TBXT RNA. The researchers engineered a mouse model to test this hypothesis and found that indeed, many mice with a suitably altered genome lacked a tail. They also found that the mice without tails also suffered from spinal cord malformations. It’s possible our ancestors who lost their tails also had this side effect, which may contribute to some health problems even today.

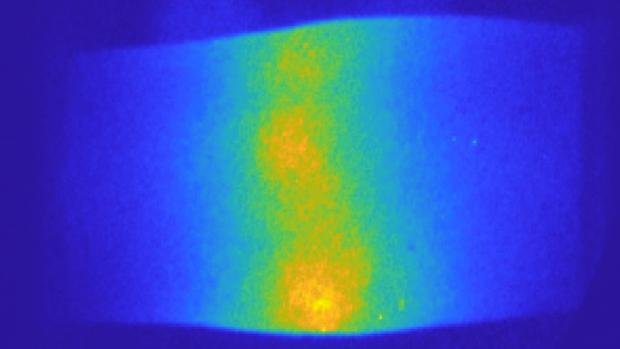

Evaluation of lupus arthritis using frequency domain optical imaging

Systemic lupus erythematosus (SLE), commonly referred to as simply “lupus”, is an autoimmune disorder where the body’s immune system attacks healthy tissue. Lupus affects somewhere between 20 to 150 people per 100,000, with variations among different racial and ethnic groups. The disease often causes arthritic symptoms in the joints, which can be debilitating in some cases.

Despite the severity of the disease, identification of lupus arthritis and assessment of its activity remains a challenge in clinical practice. Evaluations based on traditional joint examination lack precision, due to its subjective nature and accuracy in situations such as obese digits and co-existing fibromyalgia. As such, these examinations have limited ability to render quantitative data about improvement and worsening.

Recently, imaging technology, especially ultrasound (US) and magnetic resonance imaging (MRI), has enabled more objective and detailed assessment of articular and periarticular abnormalities with higher sensitivity. However, MRI and US are expensive and time-consuming. Furthermore, US has been found to be very operator dependent. Therefore, both modalities are currently not routinely used in practice. There is a clear unmet need for a simple, reliable, non-invasive and low-cost imaging modality that can objectively assess and monitor arthritis progress in patients with lupus.

Now, researchers at NYU Tandon in collaboration with Columbia University are exploring optical imaging technology as a reliable way to diagnose patients and assess the progression of the disease. The researchers, including Research Assistant Professor Alessandro Marone and Chair of the Biomedical Engineering Department Andreas H. Hielscher, found that frequency domain optical imaging could reliably identify lupus arthritis, and could be used to track how the disease progressed.

The results provide strong evidence that frequency domain optical images could provide objective, accurate insights into SLE that were not possible or economically feasible using other technologies. The light diffusion identified inflammation in the blood vessels around joints, similar to but distinct from the symptoms caused by rheumatoid arthritis. With this technology, caregivers may not have to rely on patient feedback to track the progression of lupus, but can see it in action.

Optical imaging methods have been used in studies comparing osteoarthritis, rheumatoid arthritis (RA) and healthy controls. The results of those studies highlighted that patients suffering from RA have higher light absorption in the joint space compared with healthy subjects. This is likely due to the presence of inflammatory synovial fluid that decreases light transmission through the inflamed joints. But these observations have never been used to study lupus before, and these findings could provide a reliable, rapid, and cost-effective method of assessing joint involvement in lupus patients.

Mobility in post-pandemic economic reopening under social distancing guidelines: Congestion, emissions, and contact exposure in public transit

The COVID-19 pandemic has raised new challenges for urban transportation — “back to the office” policies, staggered teleworking hours, and social distancing requirements on public transit may exacerbate traffic congestion and emissions due to shifts in travel modes and behaviors.

A team consisting of C2SMART members Ding Wang, Yueshuai Brian He, Jingqin Gao, Joseph Chow, Kaan Ozbay, and researchers from Cornell University, proposed a simulation tool for evaluating trade-offs between traffic congestion, emissions, and COVID-19 spread mitigation policies which impact travel behavior. Their research has been published in the November 2021 volume of the Transportation Research Part A journal.

Using New York City as a case study, the team used open-source agent-based simulation models to evaluate transportation system usage. Additionally, a Post Processing Software for Air Quality (PPS-AQ) estimation was used to evaluate air quality impacts associated with pandemic-related transportation changes.

The research also estimated system-wide contact exposure, finding that the social distancing requirement on public transit to be effective in reducing exposure but having negative impacts on congestion and emission within Manhattan and in neighborhoods at transit and commercial hubs.

The proposed integrated traffic simulation models and air quality estimation models may have the potential to help policymakers evaluate the impact of policies on traffic congestion and emissions and also to help identify transportation “hot spots,” both temporally and spatially.

The research was conducted with the support of the C2SMART University Transportation Center, and funded in part by the 55606–08-28 UTRC-September 11th grant as well as a grant from the U.S. Department of Transportation’s University Transportation Centers Program.

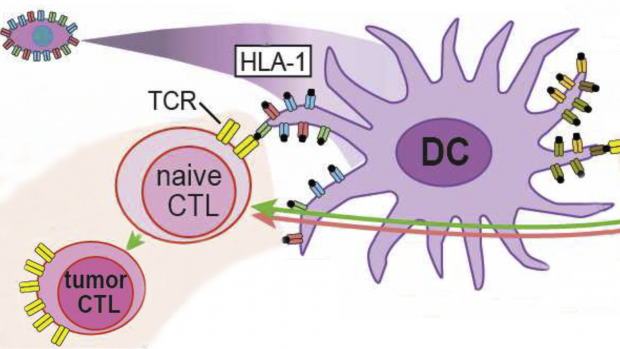

Programmable off-the-shelf dendritic cells as an immunotherapy discovery platform

A new therapeutic era has been ushered in with Adoptive Cell Immunotherapy, which uses patient-harvested T cells genetically engineered against tumor-specific targets. However, the number of addressable cancers is limited by a lack of tumor-specific targets and T cell receptors.

A team led by David M. Truong, Ph.D., assistant professor of biomedical engineering, has been awarded a $1.8 million grant from the National Institute of Allergy and Infectious Diseases (NIAID) under the NIH Director’s New Innovator program, to address this issue by expanding the number of human leukocyte antigen (HLA) restricted T cell receptors (TCRs) and tumor-specific antigens (TSAs), thus transforming the entire immunotherapy pipeline.

To accomplish this, Truong proposes the generation of programmable Dendritic cells (DCs), which are professional antigen presenting cells that function to mature and activate naive T cells. These programmable DCs would facilitate the discovery of new TCRs, the validation of TSAs, and could even be used as “living” vaccines to marshal a patient’s own immune system against infectious disease and cancer.

Truong specifically hopes to produce off-the-shelf DCs pre-engineered to match any HLA haplotype, even rarer haplotypes, that are pre-encoded with any combination of TSAs. Truong’s group will search for and validate “universal” TSAs and TCRs that can be used broadly in TCR therapy for any patient.

More specifically, the group will focus on peptides expressed from regions of the genome which are normally epigenetically silenced, but which are reactivated in tumor cells, e.g., endogenous retroviruses. To further this, the group will continue to develop technology enabling the “writing” of millions of DNA base-pairs in human-induced pluripotent stem cells (iPSCs). Such technology allows for direct customization of the large HLA locus iPSCs in a single step, the introduction of libraries of potential TSAs, as well as integration of synthetic reporter constructs for safer and scalable directed differentiation of iPSCs to DCs.

This research on allogeneic programmed DCs may catalyze a wide variety of immunotherapy applications and expand access to advanced treatments for a greater number of patients.

NYC Future Manufacturing Collective

Extensive use of sensors, computers and software tools in product design and manufacturing requires traditional manufacturing education to evolve for the new generation of cyber-manufacturing systems. While universities will continue to provide education to build a fundamental knowledge base for their students, the widening gap between the education delivered and the skills required by industry needs innovative solutions to prepare the workforce for future generations of manufacturing.

The New York City Future Manufacturing Collective (NYC-FMC) will develop a network of multidisciplinary researchers, educators, and stakeholders in New York City to explore future cyber manufacturing research through the lens of the worker's relationship to an increasingly complex and technologically driven environment and set of processes. The NYC-FMC will advance related technologies as well as the underlying systems, processes, and organizational conditions to which these interfaces are connected, to change and drive the roles of people in manufacturing.

NYC-FMC will organize a variety of activities, including an internship program for students to obtain exposure to industrial environments by engaging major manufacturing based corporations, producing a newsletter to define the state-of-the-art in manufacturing technologies and the new manufacturing ecosystem, and organizing two manufacturing-focused symposia each year. The program will build a coalition of multidisciplinary faculty from NYC universities, industry executives and technologists, investors, entrepreneurs, public sector and other relevant manufacturing ecosystem participants.

The NYC-FMC will take a convergence approach to generate novel ideas, frameworks, and hypotheses to catalyze future research, partnerships, and industrial innovation in manufacturing and cyber-physical systems. Executives and technologists from industry, including large manufacturing concerns with a connection to the greater NYC region and beyond and startup companies in the Brooklyn Navy Yard’s New Lab, will provide stimulus from the private sector and help create conditions to advance education and research goals. This coalition will build a novel education and workforce training program framework to create a learning and feedback loop between researchers, industry partners, and the workforce focused on the future cyber-manufacturing systems.

This award reflects NSF's statutory mission and has been deemed worthy of support through evaluation using the Foundation's intellectual merit and broader impacts review criteria.