Yao Wang

,

Ph.D.

-

Professor

Yao Wang is a recognized expert in video coding, networked video applications, medical imaging, and computer vision.

She joined the faculty of Polytechnic Institute of New York University in 1990 as an assistant professor and became an associate professor in 1996 and a professor in 2000. She holds a joint appointment in Dept. Electrical and Computer Engineering and Dept. Biomedical Engineering, both in NYU Tandon School of Engineering, and has an Affiliated Appointment in the Radiology Department of NYU School of Medicine. She was the Speaker for the NYU Tandon Faculty from Sept. 2017 – Aug. 2019. She has been the Associate Dean for Faculty Affairs at NYU Tandon since June 2019.

Wang received her Bachelor of Science and Master of Science in Electronic Engineering from Tsinghua University in Beijing, China in 1983 and 1985, respectively, and a PhD in Electrical and Computer Engineering from the University of California at Santa Barbara in 1990.

She authored the well-known textbook Video Processing and Communications in addition to writing numerous book chapters and journal articles. She has also served as the associate editor of Institute of Electrical and Electronics Engineers’ (IEEE) Transactions on Multimedia and Transactions on Circuits and Systems for Video Technology.

Wang was elected a Fellow of the IEEE for her contributions to video processing and communications in 2004. She received the New York City Mayor’s Award for Excellence in Science and Technology, Young Investigator Category, in 2000; the IEEE Communications Society Leonard G. Abraham Prize Paper Award in the Field of Communications Systems in 2004; the IEEE Communications Society Multimedia Communication Technical Committee Best Paper Award in 2011. She received the Overseas Outstanding Young Investigator Award from the Natural Science Foundation of China in 2005; and was named the Yangtze River Lecture Scholar by the Ministry of Education in China in 2007. She was a keynote speaker at the 2010 International Packet Video Workshop, and a keynote speaker at the 2018 Picture Coding Symposium. She received the Distinguished Teacher Award of NYU Tandon School of Engineering in 2016. She has many past and on-going research projects funded by National Science Foundation and National Institutes of Health.

Her research is supported in part by NYU WIRELESS.

Education

Tsinghua University, Beijing, P.R. China, 1983

Bachelor of Science, Electrical Engineering

Tsinghua University, Beijing, P.R. China, 1985

Master of Science, Electrical Engineering

University of California at Santa Barbara, 1990

Doctor of Philosophy, Electrical Engineering

Experience

AT&T Labs - Research, NJ

Part-time Consultant

From: January 1992 to December 2000

New York University (formerly Polytechnic Institute of NYU, Polytechnic University, Brooklyn Polytechnic Institute)

Professor

From: June 1990 to present

Publications

Authored/Edited Books

Yao Wang, Jörn Ostermann, and Ya-Qin Zhang , Video Processing and Communications , Prentice Hall, 2002 (Published September 2001) ISBN 0-13-017547-1.

Other Publications

Research News

3D streaming gets leaner by seeing only what matters

A new approach to streaming technology may significantly improve how users experience virtual reality and augmented reality environments, according to a study from NYU Tandon School of Engineering.

The research — presented in a paper at the 16th ACM Multimedia Systems Conference on April 1, 2025 — describes a method for directly predicting visible content in immersive 3D environments, potentially reducing bandwidth requirements by up to 7-fold while maintaining visual quality.

The technology is being applied in an ongoing NYU Tandon National Science Foundation-funded project to bring point cloud video to dance education, making 3D dance instruction streamable on standard devices with lower bandwidth requirements.

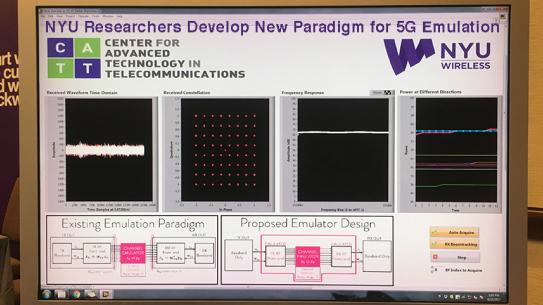

"The fundamental challenge with streaming immersive content has always been the massive amount of data required," explained Yong Liu — professor in the Electrical and Computer Engineering Department (ECE) at NYU Tandon and faculty member at both NYU Tandon's Center for Advanced Technology in Telecommunications (CATT) and NYU WIRELESS — who led the research team. "Traditional video streaming sends everything within a frame. This new approach is more like having your eyes follow you around a room — it only processes what you're actually looking at."

The technology addresses the "Field-of-View (FoV)" challenge for immersive experiences. Current AR/VR applications demand high bandwidth — a point cloud video (which renders 3D scenes as collections of data points in space) consisting of 1 million points per frame requires more than 120 megabits per second, nearly 10 times the bandwidth of standard high-definition video.

Unlike traditional approaches that first predict where a user will look and then calculate what's visible, this new method directly predicts content visibility in the 3D scene. By avoiding this two-step process, the approach reduces error accumulation and improves prediction accuracy.

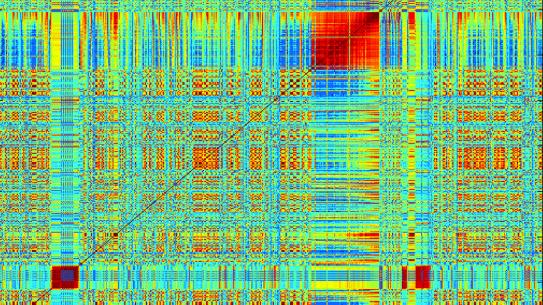

The system divides 3D space into "cells" and treats each cell as a node in a graph network. It uses transformer-based graph neural networks to capture spatial relationships between neighboring cells, and recurrent neural networks to analyze how visibility patterns evolve over time.

For pre-recorded virtual reality experiences, the system can predict what will be visible for a user 2-5 seconds ahead, a significant improvement over previous systems that could only accurately predict a user’s FoV a fraction of a second ahead.

"What makes this work particularly interesting is the time horizon," said Liu. "Previous systems could only accurately predict what a user would see a fraction of a second ahead. This team has extended that."

The research team's approach reduces prediction errors by up to 50% compared to existing methods for long-term predictions, while maintaining real-time performance of more than 30 frames per second even for point cloud videos with over 1 million points.

For consumers, this could mean more responsive AR/VR experiences with reduced data usage, while developers can create more complex environments without requiring ultra-fast internet connections.

"We're seeing a transition where AR/VR is moving from specialized applications to consumer entertainment and everyday productivity tools," Liu said. "Bandwidth has been a constraint. This research helps address that limitation."

The researchers released their code to support continued development. Their work was supported in part by the US National Science Foundation (NSF) grant 2312839.

In addition to Liu, the paper's authors are Chen Li and Tongyu Zong both NYU Tandon Ph.D candidates in Electrical Engineering; Yueyu Hu, NYU Tandon Ph.D. candidate in Electrical and Electronics Engineering; and Yao Wang, NYU Tandon professor who sits in ECE, the Biomedical Engineering Department, CATT and NYU WIRELESS.

C. Li, T. Zong, Y. Hu, Y. Wang, Y. Liu. 2025. Spatial Visibility and Temporal Dynamics: Rethinking Field of View Prediction in Adaptive Point Cloud Video Streaming. In Proceedings of the 16th ACM Multimedia Systems Conference (MMSys '25). Association for Computing Machinery, New York, NY, USA, 24–34.

Resource constrained mobile data analytics assisted by the wireless edge

The National Science Foundation grant for this research was obtained by Siddharth Garg and Elza Erkip, professors of electrical and computer engineering, and Yao Wang, professor of computer science and engineering and biomedical engineering. Wang and Erkip are also members of the NYU WIRELESS research center.

Increasing amounts of data are being collected on mobile and internet-of-things (IoT) devices. Users are interested in analyzing this data to extract actionable information for such purposes as identifying objects of interest from high-resolution mobile phone pictures. The state-of-the-art technique for such data analysis employs deep learning, which makes use of sophisticated software algorithms modeled on the functioning of the human brain. Deep learning algorithms are, however, too complex to run on small, battery constrained mobile devices. The alternative, i.e., transmitting data to the mobile base station where the deep learning algorithm can be executed on a powerful server, consumes too much bandwidth.

This project that this NSF funding will support seeks to devise new methods to compress data before transmission, thus reducing bandwidth costs while still allowing for the data to be analyzed at the base station. Departing from existing data compression methods optimized for reproducing the original images, the team will develop a means of using deep learning itself to compress the data in a fashion that only keeps the critical parts of data necessary for subsequent analysis. The resulting deep learning based compression algorithms will be simple enough to run on mobile devices while drastically reducing the amount of data that needs to be transmitted to mobile base stations for analysis, without significantly compromising the analysis performance.

The proposed research will provide greater capability and functionality to mobile device users, enable extended battery lifetimes and more efficient sharing of the wireless spectrum for analytics tasks. The project also envisions a multi-pronged effort aimed at outreach to communities of interest, educating and training the next generation of machine learning and wireless professionals at the K-12, undergraduate and graduate levels, and broadening participation of under-represented minority groups.

The project seeks to learn "analytics-aware" compression schemes from data, by training low-complexity deep neural networks (DNNs) for data compression that execute on mobile devices and achieve a range of transmission rate and analytics accuracy targets. As a first step, efficient DNN pruning techniques will be developed to minimize the DNN complexity, while maintaining the rate-accuracy efficiency for one or a collection of analytics tasks.

Next, to efficiently adapt to varying wireless channel conditions, the project will seek to design adaptive DNN architectures that can operate at variable transmission rates and computational complexities. For instance, when the wireless channel quality drops, the proposed compression scheme will be able to quickly reduce transmission rate in response while ensuring the same analytics accuracy, but at the cost of greater computational power on the mobile device.

Further, wireless channel allocation and scheduling policies that leverage the proposed adaptive DNN architectures will be developed to optimize the overall analytics accuracy at the server. The benefits of the proposed approach in terms of total battery life savings for the mobile device will be demonstrated using detailed simulation studies of various wireless protocols including those used for LTE (Long Term Evolution) and mmWave channels.

This award reflects NSF's statutory mission and has been deemed worthy of support through evaluation using the Foundation's intellectual merit and broader impacts review criteria.