Immersive Computing Lab teams up with Meta to uncover how energy-saving tactics affect perceived quality of XR experiences

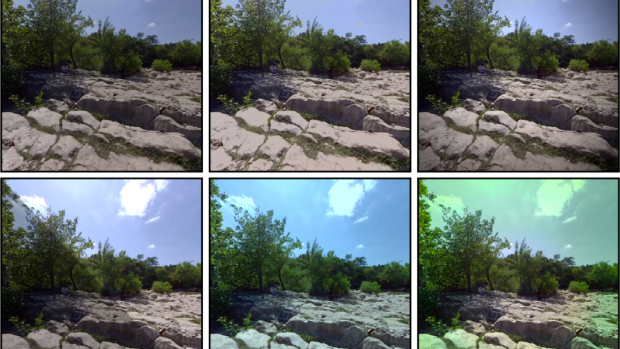

The results of six power-saving display mapping algorithms for XR devices.

As the boundary between real and virtual experiences continues to blur, the challenge of balancing power efficiency and image quality in compact XR devices becomes increasingly critical. The Immersive Computing Lab directed by Qi Sun, assistant professor at the Center for Urban Science + Progress (CUSP) and in the Department of Computer Science and Engineering at NYU Tandon, explores just how closely XR can match human perception of reality. One key aspect of this effort is to ensure standalone XR devices can be used for extended periods without sacrificing image quality.

“XR devices have benefited various professional and societal scenarios, such as modeling human decision-making in urban disasters and first responder training,” said Sun. “These applications demand extended usage sessions. However, current XR devices may only last for 2 to 2.5 hours on a single battery charge, with about 40% of the energy consumption on XR headsets coming from the displays.”

Sun, computer science Ph.D. student Kenneth Chen, and collaborators from Meta recently presented their research at SIGGRAPH 2024, the International Association for Computing Machinery's Special Interest Group on Computer Graphics and Interactive Techniques. Their work “PEA-PODs: Perceptual Evaluation of Algorithms for Power Optimization in XR Displays”, which received an Honorable Mention for the Best Paper, evaluates six display mapping algorithms — power-saving techniques to maintain the image’s intended appearance while reducing display power use — and translates findings into a unified scale that measures perceptual impact.

“Meta is an industrial leader in the Metaverse and creator of XR devices like the Quest 3,” said Sun. “Our interdisciplinary team developed human subject studies and design optimization for future XR hardware.”

The study utilized two head-mounted displays: the HTC VIVE Pro Eye and Meta Quest Pro. The team investigated various techniques for optimizing XR display power use, including uniform dimming, luminance clipping, brightness rolloff, dichoptic dimming, color foveation, and whitepoint shift. Uniform dimming reduces brightness evenly across the display; luminance clipping restricts the intensity of the brightest areas; brightness rolloff dims brightness from the center to the edges of the visual field using eye-tracking; dichoptic dimming varies brightness between the eyes by dimming the display for one eye; color foveation reduces color detail in peripheral vision while maintaining sharpness in the center; and whitepoint shift adjusts the color temperature of the display to save power.

The researchers started with small pilot studies to identify optimal settings for each display mapping algorithm, ensuring the effects were neither too obvious nor too subtle. They then used these refined settings in a larger study to evaluate each technique’s performance. In the main study, 30 participants viewed 360° stereoscopic videos, toggled between reference and test videos, and then chose the one with the highest quality.

Before this study, there was no standardized method for measuring the perceptual impact of display mapping techniques. This research fills that gap by offering insights into the most efficient techniques for saving power, optimizing display settings, and assessing tradeoffs. Among the methods tested, brightness rolloff emerged as the most efficient and whitepoint shift had a more noticeable negative effect on image quality.

“This research paves the way for the Immersive Computing Lab’s core mission of developing human-aligned wearable displays and generative AI algorithms,” said Sun. “In particular, it guides the future of visual computing systems as to how each watt of energy or each gram of CO2 is consumed to create content that is meaningful to humans and environments.”

Chen, K., Wan, T., Matsuda, N., Ninan, A., Chapiro, A., & Sun, Q. (2024). PEA-PODs: Perceptual Evaluation of Algorithms for Power Optimization in XR Displays. ACM Transactions on Graphics (TOG), 43(4), 1–17.