Tandon Teams Meet the Verizon Connected Futures Research and Prototyping Challenge

Empowering citizen journalists, helping the disabled employ robotics with a simple app—it’s all in a day’s work for researchers at NYU Tandon School of Engineering. In early February, two teams from the school triumphed in the Verizon Connected Futures Research and Prototyping Challenge, held in partnership with NYC Media Lab and aimed at supporting the development of new media and technology projects from researchers across the city.

One winning Tandon project, “Witness Video Summarization: A Collective Journalistic Experience,” is being overseen by Professor of Electrical and Computer Engineering Yao Wang, in collaboration with Visiting Professor Xin Feng and graduate students Fanyi Duanmu and Shervin Minaee. She explains that given the ubiquity of cell-phone cameras, any noteworthy event taking place anywhere in public at any time is likely to end up being the subject of copious amateur videos. Natural disasters, auto accidents, protest marches, and police actions... they all provide fodder for anyone ready to hit the record button.

These snippets of citizen journalism turn up almost immediately on video-sharing sites like YouTube and Vimeo, and the sheer quantity makes it an arduous task to sift through them.

Yao’s team is working on algorithms that would identify those videos that depict the event from various angles and at various times. (In the case of a house fire, for example, the app would seek out videos taken from all sides of the structure, as well as those capturing each moment, from first spark until the fire is fully extinguished.) Additionally, it would weed out videos that are too similar in content (those taken from the same angle, at the same moment, for example), choosing what is displayed based on picture quality.

The result would be a fully comprehensive, yet non-redundant, trove of first-hand witness video. The app would greatly empower citizen filmmakers, thereby democratizing the journalistic process, and would also prove to be a boon to professional reporters unable to be at a site in person.

The second Tandon project to win funding in the Challenge is the brainchild of two mechanical engineering students—Jared Alan Frank, a doctoral candidate, and Matthew Moorhead, who is earning his master’s degree. They say, “We have all been told the enormous potential that mechatronic and robotic technologies may one day offer to society. However, complex machines such as robots are only as powerful to society as their ability to be comfortably used by people, the majority of whom are non-technical.”

The pair have long been interested in how tablet computers and smartphones could be used as controllers for interacting with robotic technologies—currently, typical interactions require trained personnel to operate specialty equipment to control a robotic platform. Now that almost everyone carries a smartphone, however, the process can be magnitudes more participatory and immersive—and moving, physical systems in the lab can even be controlled remotely. “The smartphone or tablet incorporates very sophisticated hardware and software,” they say, “so why not take advantage of that fact and let it be the eyes, ears, and brain of the system?”

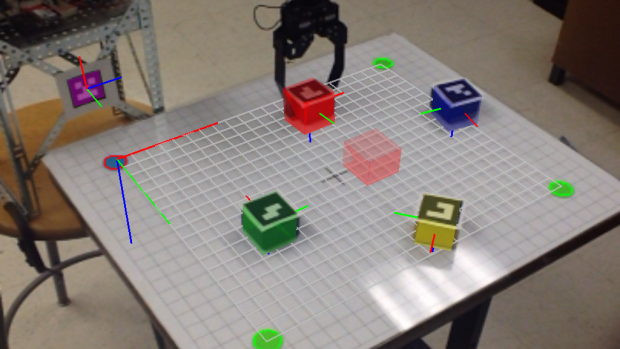

Their award-winning project, “Connecting People to Robotics Using Interactive Augmented Reality Apps,” takes things a step even further. They envision a disabled person being able to map his or her environment using virtual reality technology and then controlling a robotic system simply by interacting with that world on the screen. So, for example, reaching a box of cereal with a robotic arm could be as simple as swiping at an icon representing the box in the virtual world on the device. As they point out, robotic systems are already helping the disabled with life tasks, building cars for manufacturers, enabling doctors to do complex surgeries, and disabling bombs for military personnel out in the field, but thanks to virtual reality technology, those feats could potentially be done without bulky, expensive controllers--making them more cost effective and accessible.

Frank, the interface developer, and Moorhead, the robotics lead, foresee a time when their system is ready for commercialization. “That time might be a little closer now, thanks to Verizon and the NYC Media Lab,” they say.

View a video of an earlier project that acted as a stepping stone to their current work: