Watch New York Public Radio's Demo Day presentation from June 21, 2023.

Inclusive design is a core focus for the Product, Design and Engineering team at New York Public Radio. As our team began to think about how artificial intelligence could be used to create inclusive experiences, we wanted to be sure that our application was both consistent with our mission and relevant to the core business challenges facing our organization.

Using AI to increase the accessibility of our products was a goal that satisfied both those criteria.

As the marquee public radio station for New York City and the surrounding region, our mission is to provide inspiring storytelling, rigorous journalism and extraordinary music that is free and accessible to all. We define accessibility both in terms of ease of finding and discovering our programming and in terms of providing equal access to all audiences.

Today, our broadcast has limited entry points to those who are deaf or hard of hearing. We also know that live radio has a high barrier to entry for listeners who might be new to the medium or short on time.

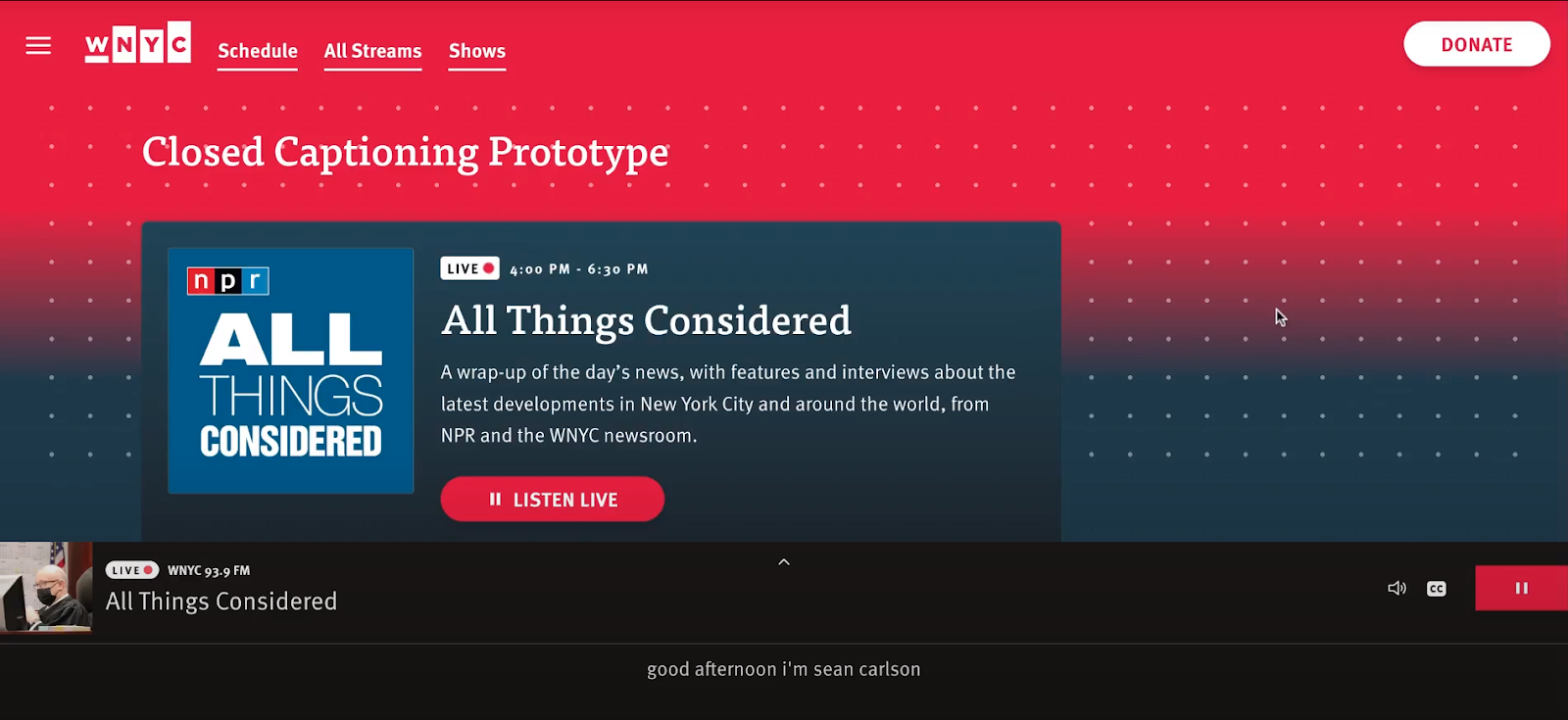

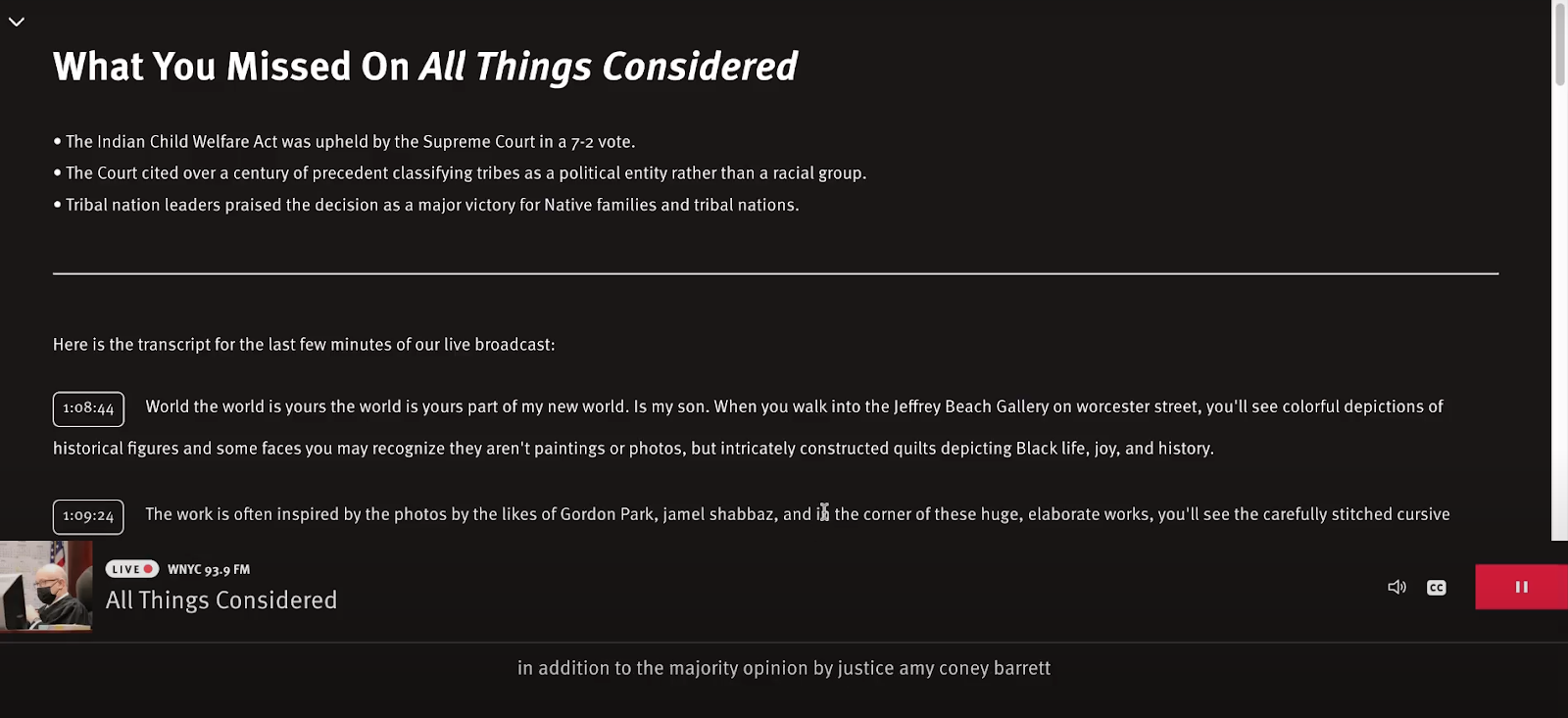

Our project used text to speech language models to generate closed captions of WNYC-FM in real time. We integrated those captions into a version of the radio player used on WNYC.org.

Artificial intelligence isn’t the only way to generate closed captions. We could have used manual transcription, as we do for some of our after show transcripts. But that approach would be prohibitively expensive to use for live broadcasts. We could’ve used a traditional closed captioning system, like what is used to provide captions for television broadcasts. That approach requires considerable upfront investment and would have been more challenging to integrate with our websites and mobile apps.

We were excited to try an AI-based solution as part of the NYC Media Lab’s AI+Local News Challenge because it had low start-up costs and could be easily integrated with the rest of our platforms. We were also excited to experiment with how AI could help make our broadcast more user friendly.

During the challenge, we experimented with using GPT 3.5 to summarize the broadcast. Our prompt created three bullets to recap what had just happened on air so a new listener could catch up quickly.

The proof of concept we built during the challenge gave us some valuable insights.

Building a working prototype allowed us to focus the conversations around artificial intelligence inside our organization. As we tested and iterated, we were able to have productive conversations with our partners in the newsroom, on our legal team, and in other parts of our organization. Doing focused innovation work as part of the Challenge helped us navigate the tension between looking to the future and solving near-term problems.

Looking beyond the Challenge, we’re excited to continue our work. We’ve already started to share our insights with our peers across public media and are looking forward to finding partners and funders to scale this project. We want to user test our prototype to ensure it meets the needs of deaf and hard of hearing users. We also want to further refine the summarization feature to ensure we can trust the responses generated by GPT.

We believe this work will be foundational to the ongoing conversation about how to imagine a future for local news. Public media is an essential laboratory for testing the technologies that will shape the information landscape—and ensuring they are deployed in a way that benefits the public.

Project team: Sam Guzik, Josh Gitlin, Tember Hopkins and Kim LaRocca