Mechatronics and Controls lab - Part A

Ulugbek Akhmedo

Support software development to coordinate gestures of CEASAR with voice commands.

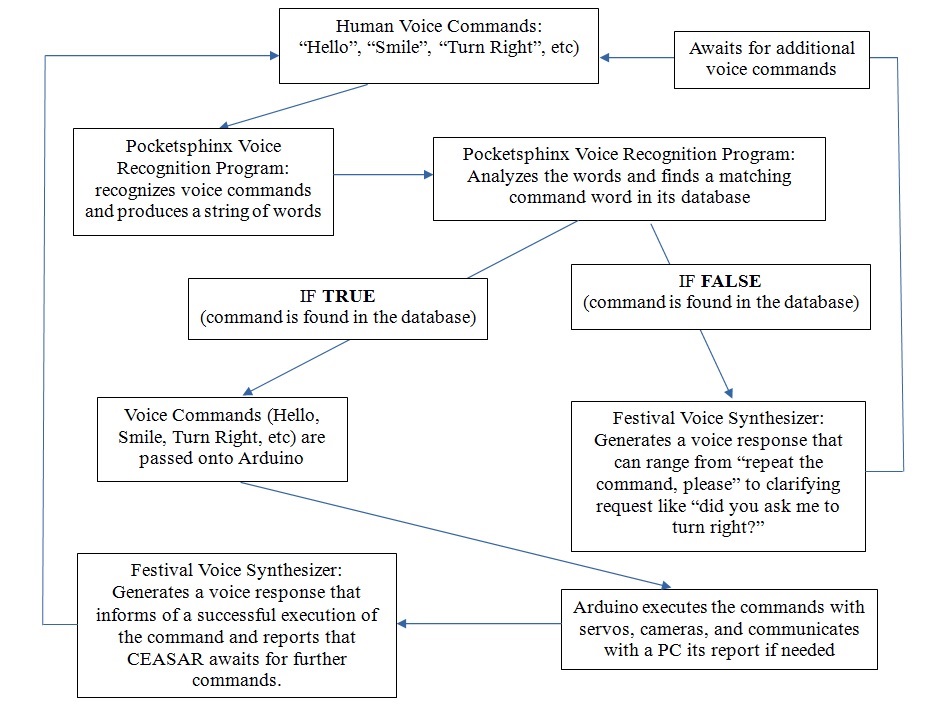

The past week brought slow progress. Essentially the problem is understood. Below is the diagram that demonstrates how voice command coordination and voice response should work to help with remotely coordinating CAESAR.

The schematic demonstrates that human voice commands are analyzed by the Pockesphinx voice recognition program. Pocketsphinx produces a string of words or a single word. This output is matched against the established set of words that correspond to commands sent to the robot. If matching is unsuccessful, it should trigger Festival human voice synthesizer program to produce a voice response seeking for clarification or confirmation of the given command.

When the words match the command familiar to the robot, Adruino (or other microcontroller) receive the command(s) from Pocketsphinx and then executes them. Such commands could be navigating the robot (e.g. “Go Forward”, “Turn Right”, or “Stop”), manipulating hands and objects with hands (e.g. “Pick up a yellow ball”, “Drop the ball”), locating objects and reporting their position to the operator (e.g. “Locate the blue block”, “Where is the red rectangle”), and finally responding to voice communication (e.g. “What is your name?”, “How old are you?”, “Where do you live?”, “What is the weather like today? etc.)

Additionally, certain key words could be used to shut down the program. Shutting down the robot can be done by having the code in Pocketsphinx such that when it hears the specific command it stops listening, comes to stop (if it were moving). Additional indication of the fact that the robot stopped listening can be light signals. At this moment Fewstival can synthesize acknowledgment of the last command.

Ideally, voice commands could be used to “wake up” the robot or attract its attention. Again, certain keywords should be used (e.g. "CAESAR wake up"). Similar to shutting down sequence the robot should indicate and acknowledge that it can hear you by using Festival synthesizer by saying, for example: " I am listening, sir" or "I am ready" or "I am waiting for your commands, my friend."

At the first approach, we should establish communication from Pocketsphinx to the Arduino unit. Once this is established, tuning of the program will improve accuracy of the robot's ability to recognize the voice commands and execute the commands. The second stage will be communication of Arduino with Festival and back with Pocketsphinx to have robot respond vocally and in such a way interact with humans continuously. The last stage will be fine tuning of the parts and expanding the vocabulary and library of commands matching with the vocabulary as well as the ability of the robot to perform various tasks.